I know, this topic has already been addressed by quite a lot of people. Chris Webb blogged about it here(PowerPivot/DAX) and here(SSAS/MDX), Javier Guillén here, Alberto Ferrari mentions it in his video here and also PowerPivotPro blogged about it here. Still I think that there are some more things to say about it. In this post I will review the whole problem and come up with a new approach on how to solve this issue for both, tabular and multidimensional models with the best possible performance I could think of (hope I am not exaggerating here 🙂 )

OK, lets face the problem of calculating new customers first and define what a new customer for a given period actually is:

A new customer in Period X is a customer that has sales in Period X but did not have any other sales ever before. If Period X spans several smaller time periods

(e.g. Period X=January contains 31 days) then there must not be any sales before the earliest smaller time period (before 1st of January) for this customer to be counted as a new customer.

According to this definition the common approach can be divided into 2 steps:

1) find all customers that have sales till the last day in the selected period

2) subtract the number of customers that have sales till the day before the first day in the

selected period

First of all we need to create a measure that calculates our distinct customers.

For tabular it may be a simple calculated measure on your fact-table:

Total Customers:=DISTINCTCOUNT(‘Internet Sales’[CustomerKey])

For multidimensional models it should be a physical distinct count measure in your fact-table, ideally in a separate measure group.

How to solve 1) in tabular models

This is also straight forward as DAX has built-in functions that can do aggregation from the beginning of time. We use MAX(‘Date’[Date]) to get the last day in the current filter context:

Customers Till Now:=CALCULATE(

[Total Customers],

DATESBETWEEN(

‘Date’[Date],

BLANK(),

MAX(‘Date’[Date])))

How to solve 2) in tabular models

This is actually the same calculation as above, we only use MIN to get the first day in the current filter context and also subtractt “1” to get the day before the first day.

Previous Customers:=CALCULATE(

[Total Customers],

DATESBETWEEN(

‘Date’[Date],

BLANK(),

MIN(‘Date’[Date])-1))

To calculate our new customers we can simply subtract those two values:

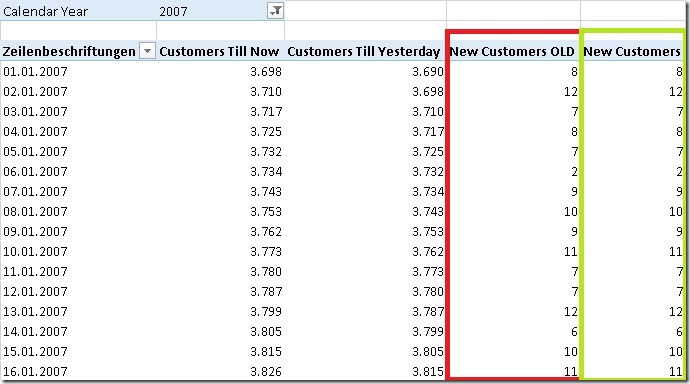

New Customers OLD:=[Customers Till Now]-[Previous Customers]

How to solve 1) + 2) in multidimensional models

Please refer to Chris Webb’s blog here. The solution is pure MDX and is based on a combination of the range-operator “{null:[Date].[Calendar].currentmember}”, NONEMPTY() and COUNT().

Well, so far nothing new.

So lets describe the solution that I came up with. It is based on a different approach. To make the approach easily understandable, we have to rephrase the answer to our original question “What are new customers”?”:

A new customer in Period X is a customer that has his first sales in Period X.

According to this new definition we again have 2 steps:

1) Find the first date with sales for each customer

2) count the customers that had their first sales in the selected period

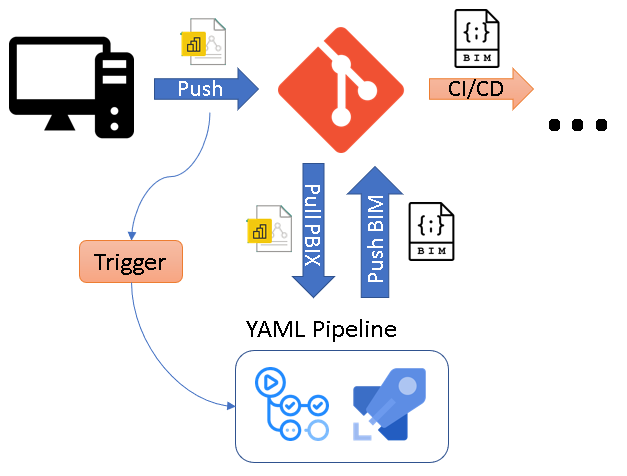

I will focus on tabular models. For multidimensional models most of the following steps have to be solved during ETL.

How to solve 1) in tabular models

This is pretty easy, we can simply create a calculated column in our Customer-table and get the first date on which the customer had sales:

=CALCULATE(MIN(‘Internet Sales’[Order Date]))

How to solve 2) in tabular models

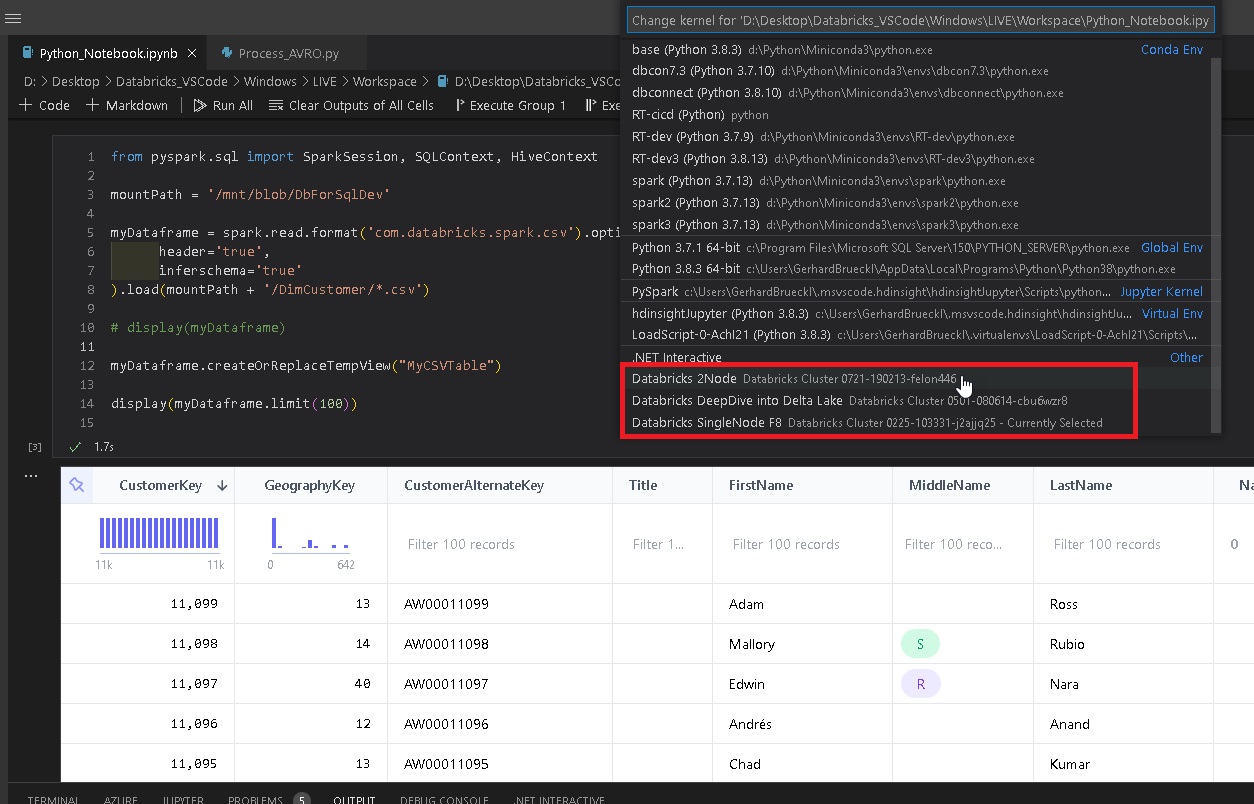

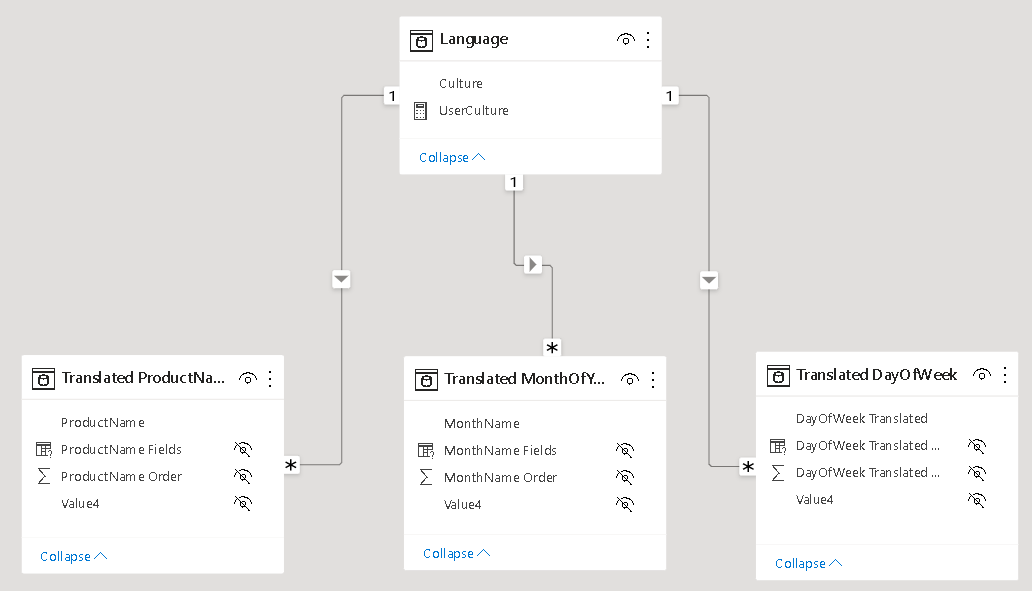

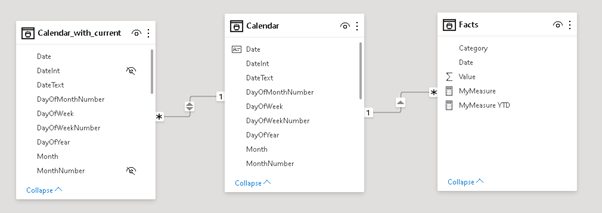

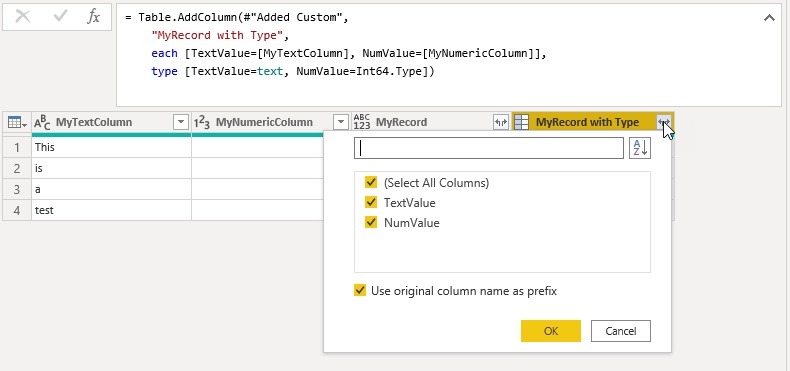

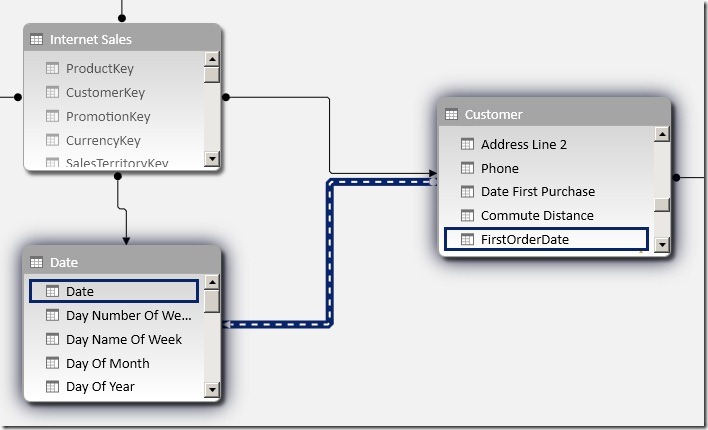

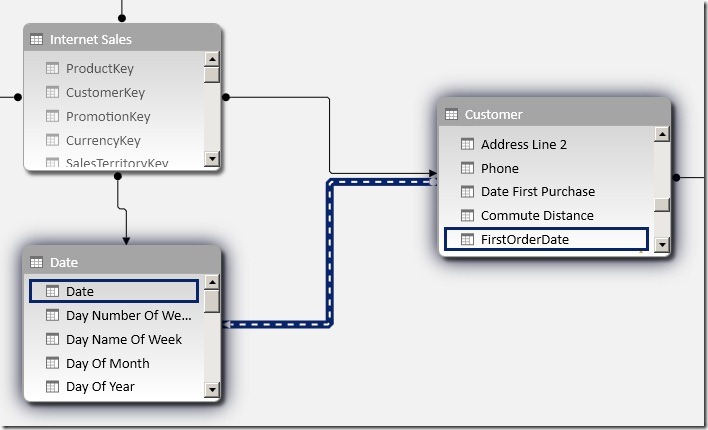

The above create calculated column allows us to relate our ‘Date’-table directly to our ‘Customer’-table. As there is already an existing relationship between those tables via ‘Internet Sales’ we have to create an inactive relationship at this point:

Using this new relationship we can very easy calculate customers that had their first sales in the selected period:

New Customers:=CALCULATE(

COUNTROWS(Customer),

USERELATIONSHIP(Customer[FirstOrderDate], ‘Date’[Date]))

Pretty neat, isn’t it?

We can use COUNTROWS() opposed to a distinct count measure as our ‘Customer’-table only contains unique customers – so we can count each row in the current filter context.

Another nice thing is that we do not have to use any Time-Intelligence function like DATESBETWEEN which are usually resolved using FILTER that would iterate over the whole table. Further it also works with all columns of our ‘Date’-table, no matter whether it is [Calendar Year], [Fiscal Semester] or [Day Name of Week]. (Have you ever wondered how many new customers you acquired on Tuesdays? 🙂 ) And finally, using USERELATIONSHIP allows us to use the full power of xVelocity as native relationships are resolved there.

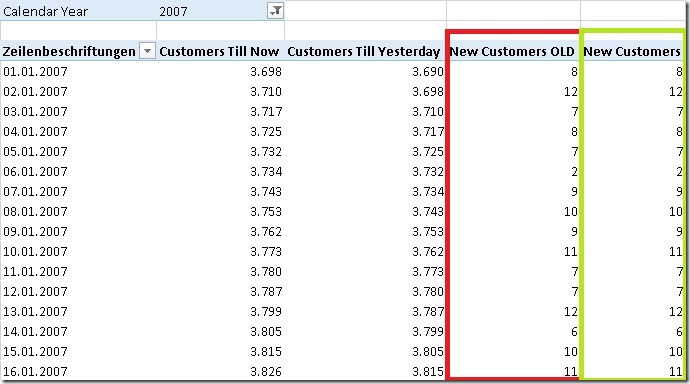

The results are of course the same as for [New Customers OLD]:

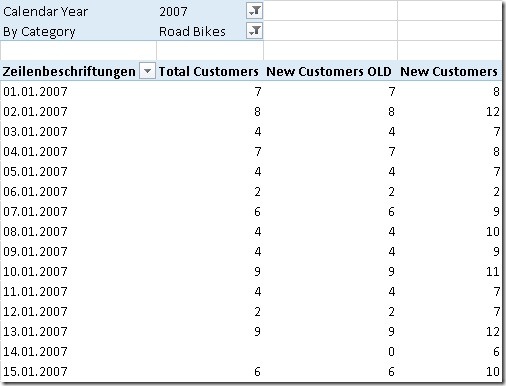

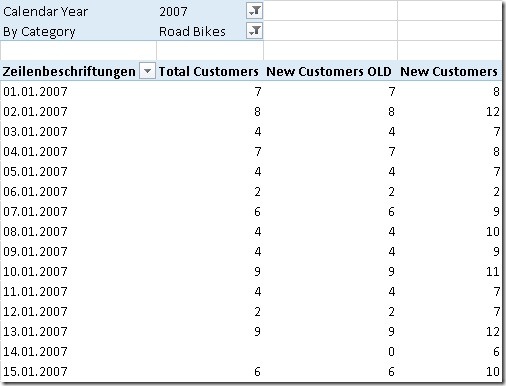

Though, there are still some issues with this calculation if there are filters on other tables:

As you can see, our new [New Customers] measure does not work in this situation as it is only related to our ‘Date’-table but not to ‘Product’.

I will address this issue in a follow-up post where I will also show how the final solution can be used for multidimensional models – Stay tuned!

Download Final Model (Office 2013!)

UPDATE: Part2 can be found here