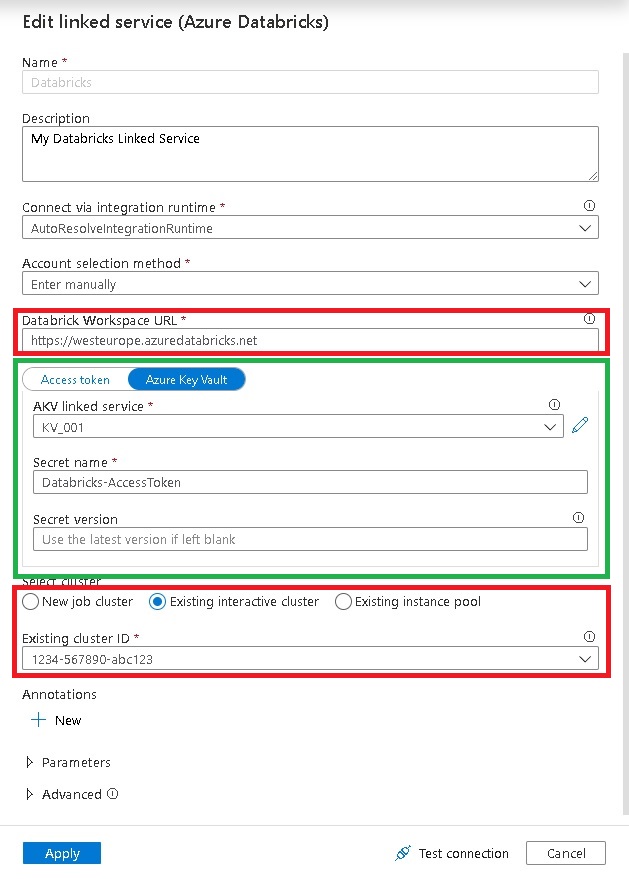

Paul Andrews (b, t) recently blogged about HOW TO USE ‘SPECIFY DYNAMIC CONTENTS IN JSON FORMAT’ IN AZURE DATA FACTORY LINKED SERVICES. He shows how you can modify the JSON of a given Azure Data Factory linked service and inject parameters into settings which do not support dynamic content in the GUI. What he shows with Linked Services and parameters also applies to Key Vault references – sometimes the GUI allows you to reference a value from the Key Vault instead of hard-coding it but for other settings the GUI only offers a simple text box:

As You can see, the setting “AccessToken” can use a Key Vault reference whereas settings like “Databricks Workspace URL” and “Cluster” do not support them. This is usually fine because the guys at Microsoft also thought about this and support Key Vault references for the settings that are actually security relevant or sensitive. Also, providing the option to use Key Vault references everywhere would flood the GUI. So this is just fine.

But there can be good reasons where you want to get values from the Key Vault also for non-sensitive settings, especially when it comes to CI/CD and multiple environments. From my experience, when you implement a bigger ADF project, you will probably have a Key Vault for your sensitive settings and all other values are provided during the deployment via ARM parameters.

So you will end up with a mix of Key Vault references and ARM template parameters which very likely will be derived from the Key Vault at some point anyway. To solve this, you can modify the JSON of an ADF linked service directly and inject KeyVault references into almost every property!

Lets have a look at the JSON of the Databricks linked service from above:

{

"name": "Databricks",

"properties": {

"annotations": [],

"type": "AzureDatabricks",

"typeProperties": {

"domain": "https://westeurope.azuredatabricks.net",

"accessToken": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "KV_001",

"type": "LinkedServiceReference"

},

"secretName": "Databricks-AccessToken"

},

"existingClusterId": "0717-094253-sir805"

},

"description": "My Databricks Linked Service"

},

"type": "Microsoft.DataFactory/factories/linkedservices"

}

As you can see in lines 8-15, the property “accessToken” references the secret “Databricks-Accesstoken” from the Key Vault linked service “KV_001” and the actual value is populated at runtime.

After reading all this, you can probably guess what we are going to do next –

We also replace the other properties by Key Vault references:

{

"name": "Databricks",

"properties": {

"type": "AzureDatabricks",

"annotations": [],

"typeProperties": {

"domain": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "KV_001",

"type": "LinkedServiceReference"

},

"secretName": "Databricks-Workspace-URL"

},

"accessToken": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "KV_001",

"type": "LinkedServiceReference"

},

"secretName": "Databricks-AccessToken"

},

"existingClusterId": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "KV_001",

"type": "LinkedServiceReference"

},

"secretName": "Databricks-ClusterID"

}

}

}

}

You now have a linked service that is configured solely by the Key Vault. If you think one step further, you can replace all values which are usually sourced by ARM parameters with Key Vault references instead and you will end up with an ARM template that only has two parameters – the name of the Data Factory and the URI of the Key Vault linked service! (you may even be able to derive the Key Vaults URI from the Data Factory name if the names are aligned!)

The only drawback I could find so far was that you cannot use the GUI anymore but need to work with the JSON from now on – or at least until you remove the Key Vault references again so that the GUI can display the JSON properly again. But this is just a minor thing as linked services usually do not change very often.

I also tried using the same approach to inject Key Vault references into Pipelines and Dataset but unfortunately this did not work 🙁

This is probably because Pipelines and Datasets are evaluated at a different stage and hence cannot dynamically reference the Key Vault.

Pingback: Azure Data Factory and Key Vault References – Curated SQL

I have done this once for SQL Server with windows authentication, and parameterized the user name with a keyvault value like this. However we’ve had a lot of credential caching issues, where old user name values would remain cached on the IRT even though the key vault secret was updated.

Nice catch! Need to check that.

Do you remember whether this applied to all values from key vault or only those “hacked in” manually?

The issue was only with the hacked user name value, we tried to change it but it kept trying to log in with the old value. Only way to resolve it was to configure a new keyvault secret. This was last year however, maybe things have changed since then.

interesting, it *should* at least reload the secret from KeyVault if you do a “Test Connection” or a full deployment

Hence it is fine for most of my scenarios where those values do not change frequently but only as part of a (full) deployment

for using data from azure key vault in pipelines I use this:

https://docs.microsoft.com/en-us/azure/data-factory/how-to-use-azure-key-vault-secrets-pipeline-activities

A web activity pulls the data for the entries, then you could use their output in any activity.

yes, this also works fine when used inside of pipelines.

For Linked Services and DataSets this only works indirectly and more complicated as you would need to pass all KeyVault values via a parameter to each and every single Dataset/LinkedService

Bit late, but would this also work for global parameters? It would be quite nice to define a global parameter via a KV reference, which afterwards I can use the global parameter for a HTTP call. Also makes it way easier for different environments (dev, prod)

I tried it out in a test environment, but ADF basically took the JSON as a literal string.

Any idea if this is possible?

if I am not mistaken, global parameters can only be used in activities but not in e.g. Linked Services where it would make sense

so you would need to pass the global parameter from the activity down to the linked service – each and every time!

in general I try to package all configurations (sensitive and non-sensitive) into a keyvault and reference everything from there. so for the deployment the only thing I have to change is the URL of the KeyVault linked service

Your approach to packaging everything in a key vault sounds incredibly efficient.

However, I’m facing a specific challenge where the global parameter needs to vary across different environments. Here’s the scenario:

1. Objective: I need to make an API request to Power BI to initiate the refresh of a semantic model.

2. Requirements: This request requires a Bearer token for my Service Principal, which I obtain using the client secret, client ID, and tenant ID. Additionally, the workspace ID and dataset ID of Power BI are necessary.

3. Current Process: I currently acquire these values through an HTTP request to Azure Key Vault. In my pipeline, I parametrize the secret names and set the key vault URL as a global parameter. The crucial aspect here is the need to alter this global parameter for each environment (development, acceptance, production, etc.), which I do via an Azure DevOps Pipelines job.

My question is: While I don’t need the Key Vault Linked Service for setting other linked service properties, I do need to use a secret as a global parameter. Do you think integrating Key Vault in this manner for environment-specific global parameters is feasible?

If so, a working example would be nice 🙂

in that case I would simply use the ADF Managed Service Identity (MSI) for authentication and grant it access to your PowerBI workspace

then you dont need to worry about secrets and key vaults at all