One of the most requested features when it comes to Azure ML is and has always been the integration into PowerBI. By now we are still lacking a native connector in PowerBI which would allow us to query a published Azure ML web service directly and score our datasets. Reason enough for me to dig into this issue and create some Power Query M scripts to do this. But lets first start off with the basics of Azure ML Web Services.

Every Azure ML project can be published as a Web Service with just a single click. Once its published, it can be used like any other Web Service. Usually we would send a record or a whole dataset to the Web Service, the Azure ML models does some scoring (or any other operation within Azure ML) and then sends the scored result back to the client. This is straight forward and Microsoft even supplies samples for the most common programming languages. The Web Service relies on a standardized REST API which can basically be called by any client. Yes, in our case this client will be PowerBI using Power Query.

Rui Quintino has already written an article on AzureML Web Service Scoring with Excel and Power Query and also Chris Webb wrote a more generic one on POST Request in Power Query in general Web Service and POST requests in Power Query. Even Microsoft recently published an article how you can use the R Integration of Power Query to call a Azure ML Web Service here.

Having tried these solutions, I have to admit that they have some major issues:

1) very static / hard coded

2) complex to write

3) operate on row-by-row basis and might run into the API Call Limits as discussed here.

4) need a local R installation

As Azure ML usually deal with tables, which are basically Power Query DataSets, a requirement would be to directly use a Power Query DataSet. The DataSet has to be converted dynamically into the required JSON structure to be POSTed to Azure ML. The returned result, usually a table again, should be converted back to a Power Query DataSet. And that’s what I did, I wrote a function that does all this for you. All information that you have to supply can be found in the configuration of your Azure ML Web Service:

– Request URI of your Web Service

– API Key

– the [Table to Score]

the [Table to Score] can be any Power Query table but of course has to have the very same structure (including column names and data types) as expected by the Web Service Input. Then you can simply call my function:

The whole process involves a lot of JSON conversions and is kind of complex but as I encapsulated everything into M functions it should be quite easy to use by simply calling the CallAzureMLService-function.

However, here is a little description of the used functions and the actual code:

ToAzureMLJson – converts any object that is passed in as an argument to a JSON element. If you pass in a table, it is converted to a JSON-array. Dates and Numbers are formatted correctly, etc. so the result can the be passed directly to Azure ML.

let

ToAzureMLJson= (input as any) as text =>

let

transformationList = {

[Type = type time, Transformation = (value_in as time) as text => """" & Time.ToText(value_in, "hh:mm:ss.sss") & """"],

[Type = type date, Transformation = (value_in as date) as text => """" & Date.ToText(value_in, "yyyy-MM-dd") & """"],

[Type = type datetime, Transformation = (value_in as datetime) as text => """" & DateTime.ToText(value_in, "yyyy-MM-ddThh:mm:ss.sss" & """")],

[Type = type datetimezone, Transformation = (value_in as datetimezone) as text => """" & DateTimeZone.ToText(value_in, "yyyy-MM-ddThh:mm:ss.sss") & """"],

[Type = type duration, Transformation = (value_in as duration) as text => ToAzureMLJson(Duration.TotalSeconds(value_in))],

[Type = type number, Transformation = (value_in as number) as text => Number.ToText(value_in, "G", "en-US")],

[Type = type logical, Transformation = (value_in as logical) as text => Logical.ToText(value_in)],

[Type = type text, Transformation = (value_in as text) as text => """" & value_in & """"],

[Type = type record, Transformation = (value_in as record) as text =>

let

GetFields = Record.FieldNames(value_in),

FieldsAsTable = Table.FromList(GetFields, Splitter.SplitByNothing(), {"FieldName"}, null, ExtraValues.Error),

AddFieldValue = Table.AddColumn(FieldsAsTable, "FieldValue", each Record.Field(value_in, [FieldName])),

AddJson = Table.AddColumn(AddFieldValue, "__JSON", each ToAzureMLJson([FieldValue])),

jsonOutput = "[" & Text.Combine(AddJson[__JSON], ",") & "]"

in

jsonOutput

],

[Type = type table, Transformation = (value_in as table) as text =>

let

BufferedInput = Table.Buffer(value_in),

GetColumnNames = Table.ColumnNames(BufferedInput),

ColumnNamesAsTable = Table.FromList(GetColumnNames , Splitter.SplitByNothing(), {"FieldName"}, null, ExtraValues.Error),

ColumnNamesJson = """ColumnNames"": [""" & Text.Combine(ColumnNamesAsTable[FieldName], """, """) & """]",

AddJson = Table.AddColumn(value_in, "__JSON", each ToAzureMLJson(_)),

ValuesJson = """Values"": [" & Text.Combine(AddJson[__JSON], ",#(lf)") & "]",

jsonOutput = "{""Inputs"": { ""input1"": {" & ColumnNamesJson & "," & ValuesJson & "} }, ""GlobalParameters"": {} }"

in

jsonOutput

],

[Type = type list, Transformation = (value_in as list) as text => ToAzureMLJson(Table.FromList(value_in, Splitter.SplitByNothing(), {"ListValue"}, null, ExtraValues.Error))],

[Type = type binary, Transformation = (value_in as binary) as text => """0x" & Binary.ToText(value_in, 1) & """"],

[Type = type any, Transformation = (value_in as any) as text => if value_in = null then "null" else """" & value_in & """"]

},

transformation = List.First(List.Select(transformationList , each Value.Is(input, _[Type]) or _[Type] = type any))[Transformation],

result = transformation(input)

in

result

in

ToAzureMLJson

AzureMLJsonToTable – converts the returned JSON back to a Power Query Table. It obeys column names and also data types as defined in the Azure ML Web Service output. If the output changes (e.g. new columns are added) this will be taken care of dynamically!

let

AzureMLJsonToTable = (azureMLResponse as binary) as any =>

let

WebResponseJson = Json.Document(azureMLResponse ,1252),

Results = WebResponseJson[Results],

output1 = Results[output1],

value = output1[value],

BufferedValues = Table.Buffer(Table.FromRows(value[Values])),

ColumnNameTable = Table.AddIndexColumn(Table.FromList(value[ColumnNames], Splitter.SplitByNothing(), {"NewColumnName"}, null, ExtraValues.Error), "Index", 0, 1),

ColumnNameTable_Values = Table.AddIndexColumn(Table.FromList(Table.ColumnNames(BufferedValues), null, {"ColumnName"}), "Index", 0, 1),

RenameList = Table.ToRows(Table.RemoveColumns(Table.Join(ColumnNameTable_Values, "Index", ColumnNameTable, "Index"),{"Index"})),

RenamedValues = Table.RenameColumns(BufferedValues, RenameList),

ColumnTypeTextTable = Table.AddIndexColumn(Table.FromList(value[ColumnTypes], Splitter.SplitByNothing(), {"NewColumnType_Text"}, null, ExtraValues.Error), "Index", 0, 1),

ColumnTypeText2Table = Table.AddColumn(ColumnTypeTextTable, "NewColumnType", each

if Text.Contains([NewColumnType_Text], "Int") then type number

else if Text.Contains([NewColumnType_Text], "DateTime") then type datetime

else if [NewColumnType_Text] = "String" then type text

else if [NewColumnType_Text] = "Boolean" then type logical

else if [NewColumnType_Text] = "Double" or [NewColumnType_Text] = "Single" then type number

else if [NewColumnType_Text] = "datetime" then type datetime

else if [NewColumnType_Text] = "DateTimeOffset" then type datetimezone

else type any),

ColumnTypeTable = Table.RemoveColumns(ColumnTypeText2Table ,{"NewColumnType_Text"}),

DatatypeList = Table.ToRows(Table.RemoveColumns(Table.Join(ColumnNameTable, "Index", ColumnTypeTable, "Index"),{"Index"})),

RetypedValues = Table.TransformColumnTypes(RenamedValues, DatatypeList, "en-US"),

output = RetypedValues

in

output

in

AzureMLJsonToTable

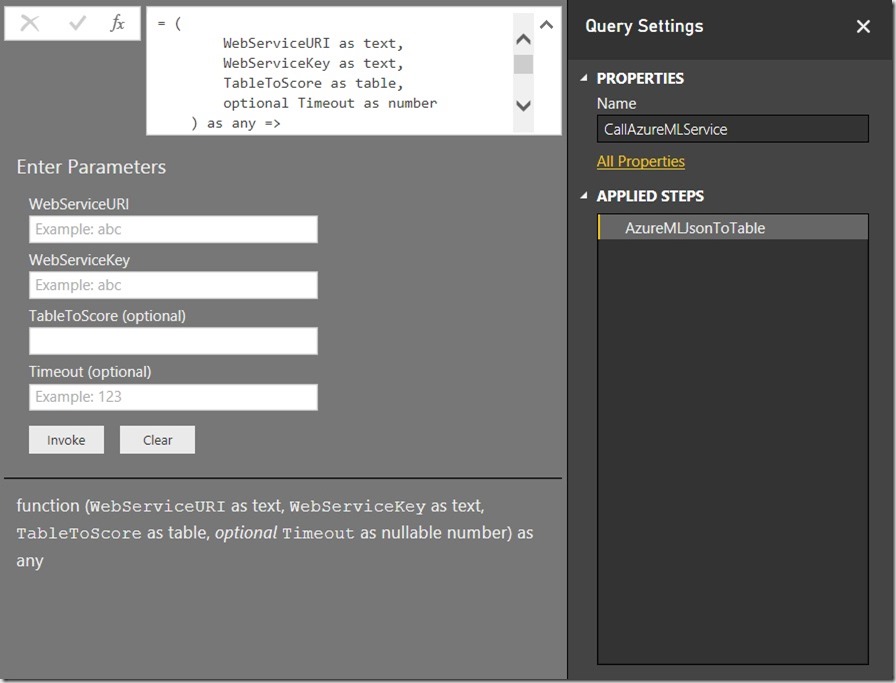

CallAzureMLService – uses the two function from above to convert a table to JSON, POST the JSON to Azure ML and convert the result back to a Power Query Table.

let

CallAzureMLService = (

WebServiceURI as text,

WebServiceKey as text,

TableToScore as table,

optional Timeout as number

) as any =>

let

WebTimeout = if Timeout = null then #duration(0,0,0,100) else #duration(0,0,0,Timeout) ,

WebServiceContent = ToAzureMLJson(TableToScore),

WebResponse = Web.Contents(WebServiceURI,

[Content = Text.ToBinary(WebServiceContent),

Headers = [Authorization="Bearer " & WebServiceKey,

#"Content-Type"="application/json",

Accept="application/json"],

Timeout = WebTimeout]),

output = AzureMLJsonToTable(WebResponse)

in

output

in

CallAzureMLService

Known Issues:

As the [Table to Score] will probably come from a SQL DB or somewhere else, you may run into issues with Privacy Levels/Settings and the Formula Firewall. In this case make sure to enable Fast Combine for your workbook as described here.

The maximum timeout of a Request/Response call to an Azure ML Web Service is 100 seconds. If your call exceeds this limit, you might get an error message returned.I ran a test and tried to score 60k rows (with 2 numeric columns) at once and it worked just fine, but I would assume that you can run into some Azure ML limits here very easily with bigger data sets. As far as I know, these 100 seconds are for the Azure ML itself only. If it takes several minutes to upload your dataset in the POST request, than this is not part of this 100 seconds. If you are still hitting this issue, you could further try to split your table into different batches, score them separately and combine the results again afterwards.

So these are the steps that you need to do in order to use your Azure ML Web Service together with PowerBI:

1) Create an Azure ML Experiment (or use an existing)

2) Publish the Experiment as a Web Service

3) note the URL and the API Key of your Web Service

4) run PowerBI and load the data that you want to score

5) make sure that the dataset created in 4) has the exact same structure as expected by Azure ML (column names, data types, …)

6) call the function “CallAzureMLWebService” with the parameters from 3) and 5)

7) wait for the Web Service to return the result set

8) load the final table into PowerBI (or do some further transformations before)

And that’s it!

Download:

You can find a PowerBI workbook which contains all the functions and code here: CallAzureMLWebService.pbix

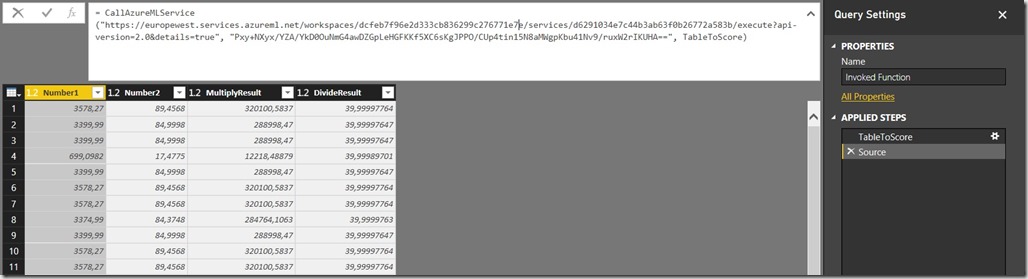

I used a simple Web Service which takes 2 numeric columns (“Number1” and “Number2”) and returns the [Number1] * [Number2] and [Number1] / [Number2]

PS: you will not be able to run the sample as it is as I changed the API Key and also the URL of my original Azure ML Web Service

Hi there

Ive been looking for exactly this! So glad Ive found this.

One question tho, what if I subscribe Cluster API via data.marketplace, how do I consume that API via PowerBI? In effect I wont have API Key and URL.

Hope you can advice.

Thanks

Peddie

Hi Ped,

I have not worked with the Azure Marketplace API yet but I wonder why you would need to send/push data to them?

There is a pre-built Connector for Microsoft Azure Marketplace, why does this one not work?

or are you talking about a published Azure ML webservice on the marketplace which you can use to score your own data?

-gerhard

Thanks for this Gerhard. I have an interesting problem with my implementation of this. First, I am using a custom query to call the Twitter API based on a search term that is a parameter. Once I have transformed the response into the Power Query table with the correct labels/data types, I am using your code above to call my Azure ML text sentiment analysis service. Everything works fine in the query editor. However, when I change the search term or try to close and apply, I get a 400 Bad Request error. At first I thought maybe there was some non-English text, but that doesn’t seem to be the issue. What is really bizarre is that my results load into query editor (I can see the returned table with my experiment output columns as they should be), but fail to load to the dashboard. Any thoughts? I am happy to share my PBIX with you.

In general there should be no difference whether you run it at design time or when you actually load the data into the datamodel.

just send me/share the pbix file so i can take a closer look and investigate into the issue.

Kind regards,

-gerhard

I’ve found that if I import the Tweets from an actual file, rather than pull them into PBI directly via API, the Azure ML web call works fine. However, if I pull the tweets in via API and then send them to Cognitive Services, it works fine.

Another interesting problem that I can’t figure out (but will try to add in the Wait function you reference) is that my Azure ML web call seems to be limited to 2199 tweets being sent. If I keep top rows at 2199, I get a successful web call with my sentiment scores, but if I change that Keep Top Rows to 2200+, I get a 400 error.

I will also try batching my web calls using a technique from Gil at DataChant: http://datachant.com/2016/11/16/improve-refresh-time-batching-web-api-calls-part-2/

I figured it out after examining tweet #2200 and some others that seemed to be causing the error. I had tried to clean the tweets using the built in Clean function, but I discovered that some tweets had a “\” symbol, and when removed, the tweets are successfully analyzed by the ML model. Perhaps the back slash impacts the feature hashing in the model.

Hi David,

thanks for the Feedback!

what you are saying is that the issue relates to Azure ML and not PowerBI – right?

Have you had success with the batching? I was thinking of this also but did not have any time yet to do some more investigation

-gerhard

Hi Gerhad,

I am able to get the Azure ML data in the invoked function table but I dont see that table in the Relationships tab. I would like to join my Azure ML data with other tables.

Your response will be appreciated.

Ignore my previous comment. I was able to find the invoked function table. It was all the way at the right side of the screen. I had to scroll a lot to get it.

Hi Gerhard,

This is exactly the kind of solution I have been looking for, for a long time!

I have a doc file that I have converted to csv to run a text mining experiment on it (through AzureML). However, when I call the function “CallAzureMLWebService”, I am getting the following expression error:

Expression.Error: We cannot convert a value of type Record to type Text.

Details:

Value=Record

Type=Type

I understand that Record is JSON object and that the function is failing when it is trying to convert JSON back to Table, but beyond that I do not know how to work with JSON. Would you know why this is happening and what I can do to get rid of it? Any help is greatly appreciated!

Thanks,

Priyal Turakhia

hmm, thats pretty hard to debug without having the actual code

can you send me the workbook (in case it does not contain any sensitive data)

or at least some sample of the input (PQ Dataset) and the output (Azure ML expected format)

-gerhard

HI, I have been looking for this for quite a time. Thanks for sharing this. I have made a logistic regression model in azure ml studio and i am using your codes to score the database, but i am getting this error – Formula.Firewall: Query ‘Invoked Function (2)’ (step ‘Source’) references other queries or steps, so it may not directly access a data source. Please rebuild this data combination, even though i have change the privacy settings you have mentioned in the article. Please help me with this.

well, the error is not directly related to my code

seems like there is still something wrong with the privacy levels. Did you change them for all sources? the should all be the same type. Also ensure that you change privacy settings of the workbook to “Ignore”

Chris Webb wrote several articles about this topic and how to solve it:

https://blog.crossjoin.co.uk/2017/06/26/data-privacy-settings-in-power-bipower-query-part-3-the-formula-firewall-error/

I am sure you will find the answer there

-gerhard

Hi Gerhard

That’s exactly what I was looking for!

However, the link to the pbix file containing the function seems to be broken.

Could you please let me know where I find the functions?

Thanks!

Julia

Hi Julia,

I just checked the download of the .pbix and it worked fine for me.

However, I will also past the M-code directly in the blog post so it is easier to re-use it

kind regards,

-gerhard

Hi Gerhard

Great, thank you so much!

Can you explain the process of publishing an Azure ML project as a Web Service and how it can be utilized like any other Web Service? How does the interaction between the client and the Web Service work, and in the context of your use case, how does PowerBI using Power Query play a role in this process?

I think this is very outdated and Azure ML services dont work the way they used to work in the past

still, the blog illustrates very well how you can send data from Power BI to external services and process the response within Power BI again