With the recent public release of Power BI it is finally possible to refresh Power Pivot workbooks online.Once a workbook is uploaded to SharePoint online and “Enabled” for Power BI you can schedule an automatic data refresh for the Power Pivot model. Though, at the moment only a very limit number of data sources are supported:

- Windows Azure SQL Database data

- Open Data protocol (OData) feeds

- On premises data sources that are enabled for access in Power BI for Office 365

Especially for public available data OData feeds are very popular, for example from Wikipedia. Those public data feeds usually do not require any authentication so one would expect these data sources to work flawless with a scheduled data refresh of Power BI. Well, you are wrong here!

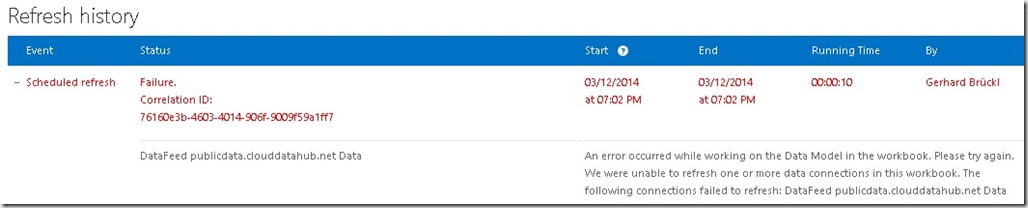

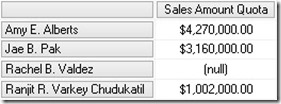

When you create a simple Power Pivot model with one OData source to e.g. Wikipedia, publish it and setup scheduled data refresh you will receive the following error:

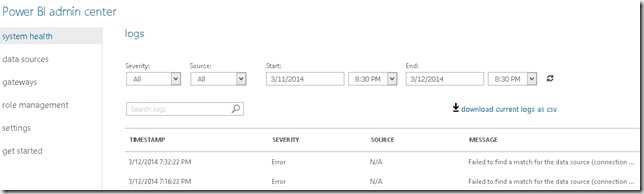

The error message itself does not reveal any insights on the actual error. If you are in the (more or less) lucky situation that you are also owner of that site you can go to the Power BI Admin Center to get some further details on the error:

By downloading the CSV or hovering over a single Message you can see the details:

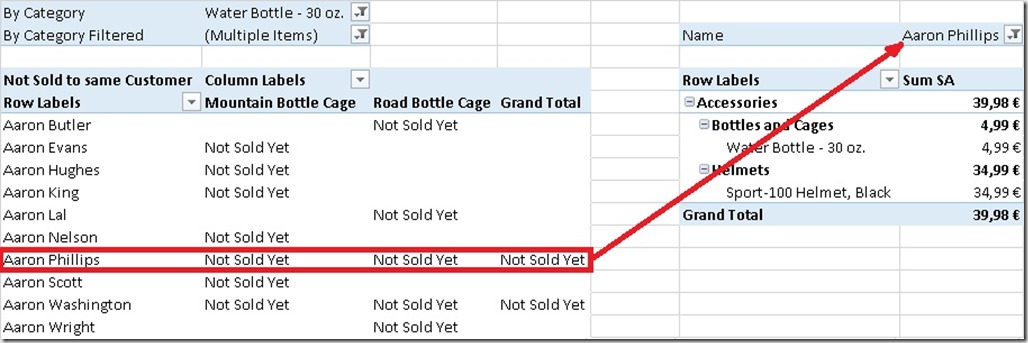

- "Failed to find a match for the data source (connection string: data source=http://publicdata.clouddatahub.net/Web/Tables/fa9af6681cd64206b3aafe6d12408117/V1/Data;include atom elements=Auto;include expanded entities=False;integrated security=SSPI;persist security info=false;time out=600;schema sample size=25;retry count=5;retry sleep=100;keep alive=False;max received message size=4398046511104;base url=http://publicdata.clouddatahub.net/Web/Tables/fa9af6681cd64206b3aafe6d12408117/V1/Data) for the user 'Gerhard.Brueckl@XYZ.onmicrosoft.com'. The user is unauthorized or, the corresponding data source is not created. Check the user's permission to the data source or create a data source for the connection string. Tracing ID: 23f244ad-7921-48f7-b13a-ef68e8cf5503"

It says that for my user no corresponding data source exists or I do not have permissions to access it.

In our case the reason is that our user is unauthorized – the actually data source that was used must not necessarily exist in the user’s data sources (Those can be found via “My Power BI”) for scheduled data refresh to work.

So you will ask yourself what could go possibly wrong here as you are just accessing a public OData feed?!?

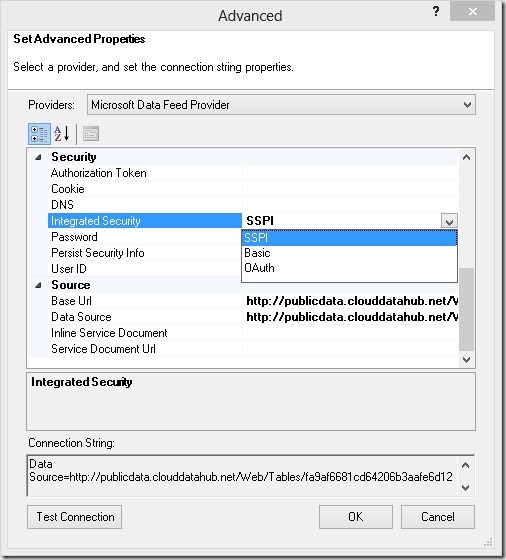

The reason is that in the connection string the “Integrated Security”-property is set to SSPI by default. In your local Excel/Power Pivot model this works just fine as the SSPI context can be resolved and sent to the OData feed. Sure the OData feed actually ignores this information as it is public but from an authentication point of view everything works correctly!

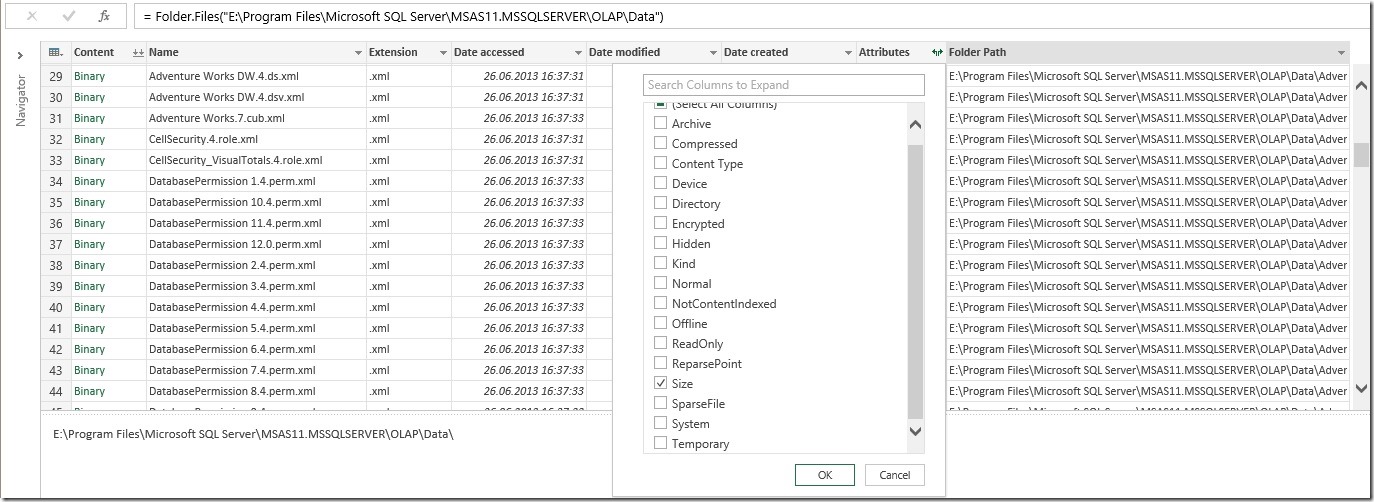

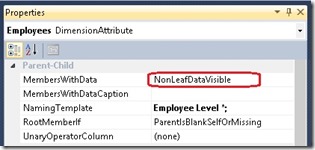

The problem with Scheduled Data Refresh is that SSPI simply does not work as it cannot be resolved and Power BI cannot use any service user for your request if SSPI is defined. The first thing that comes to your mind would be to simply set the authentication method to “Anonymous” which would be perfectly fine for a public data feed. However, Power Pivot does not support Anonymous authentication for OData sources:

As SSPI does not work we need to use “Basic” here and provide “User ID” and “Password”. Which UserID/Password you may ask? – and the simple answer is: “It does not matter!”

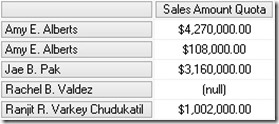

You can provide any values here, in my case I used “random” for both, User ID and Password! Another thing you need to ensure is that “Persist Securtiy Info” is set to True so your “Password” is stored in the final connection string making Power BI think that authentication is defined correctly within the connection string and Power BI does not have to do anything.

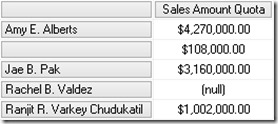

Once you changes those settings your data refresh will work like a charm:

On last thing you may realize is the “Running Time”. It takes significantly longer when doing the refresh in Power BI opposed to doing the refresh locally. Just keep that in mind, especially for bigger data feeds.

In the future I hope that Microsoft will introduce some kind of “Anonymous” authentication within the dropdown of Power Pivot or simply check at some point if the OData feed requires any authentication at all hence overwriting the authentication mode specified in the connection string when refreshing.