One of the coolest features of Power BI is that I integrates very well with other tools and also offers a lot of interfaces which can be used to extend this capabilities even further. One of those is the R Integration which allows you to run R code from within Power BI. R scripts can either be used as a data source or for visualizing your data. In this post I will focus on the data source component and show how you can use a locally stored R script and execute it directly in Power BI. Compared to the native approach where you need to embed the R code in the Power BI file, this has several advantages:

- Develop R script in familiar external tool like RStudio

- Integration with Source Control

- Leverage Power BI for publishing and visualizing results

Out of the box Power BI only supplies one function to call R scripts as a data source which is R.Execute(text). Usually, when you use the wizard, it simply passes your R script as a hardcoded value to this function. Knowing the power of Power BI and its scripting language M for data integration made me think – “Hey, as R scripts are just text files and Power BI can read text files, I could also dynamically read any R script and execute it!”

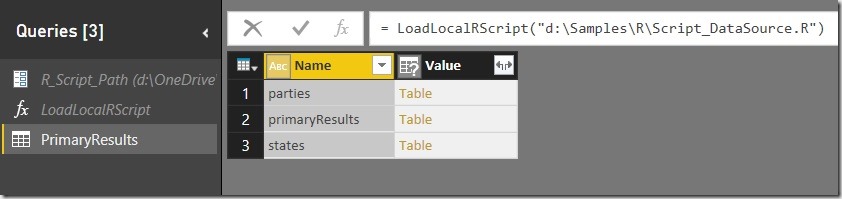

Well, turns out to be true! So I created a little M function where I pass in the file-path of an existing R script and which returns a table of data frames which are created during the execution of the script. Those can then be used like any other data sets/tables within Power BI:

And here is the corresponding M code for the Power Query function:

(Thanks also to Imke Feldmann for simplifying my original code to the readable one below)

- let

- LoadLocalRScript = (file_path as text) as table =>

- let

- Source = Csv.Document(File.Contents(file_path),[Delimiter=#(lf), Columns=1, Encoding=1252, QuoteStyle=QuoteStyle.None]),

- RScript = Text.Combine(Source[Column1], "#(lf)"),

- output = R.Execute(RScript)

- in

- output

- in

- LoadLocalRScript

First we read the R script like any other regular CSV file but we use line-feed (“#(lf)”) as delimiter. So we get a table with one column and one row for each line of our original R script.

Then we use Text.Combine() on our column to transform the single lines back into one long text resembling our original R script. This text can the be passed to the R.Execute() function to return the list of R data frames created during the execution of the script.

And that’s it! Any further steps are similar to using any regular R script which is embedded in Power BI so it is up to you on how you proceed from here. Just one thing you need to keep in mind is that changing the local R script might break the Power BI load if you changed or deleted any data frames which are referenced in Power BI later on.

One issues that I came across during my tests is that this approach does not work with scheduled refreshes in the Power BI Web Service via the Personal Gateway. The first reason for this is that it is currently not possible to use scheduled refresh if custom functions are involved. Even if you can work around this issue pretty easily by using the code from above directly in Power Query I still ran into issues with different privacy levels for the location of the R script and the R.Execute() function. But I will investigate into those issues and update this blog post accordingly (see UPDATE below).

For the future I hope that is fixed by Microsoft and Power BI allows you to execute remote scripts natively – but until then, this approach worked quite well for me.

UPDATE:

To make the refresh via the Personal Gateway work you have to enable “FastCombine”. How to do this is described in more detail here: Turn on FastCombine for Personal Gateway.

In case you are interested in more details on this approach, I am speaking at TugaIT in Lisbon, Portugal this Friday (20th of May 2016) about “Power BI for the Data Scientist” where I will cover this and lots of other interesting topics about the daily work of a data scientist and how PowerBI can used to ease them.

Downloads:

Power BI Workbook: Load_Local_R_Script_wFunction.pbix

Sample R Script: Sample_R_Script.r