Calculating the Events-In-Progress is a very common requirement and many of my fellow bloggers like Chris Webb, Alberto Ferrari and Jason Thomas already blogged about it and came up with some really nice solutions. Alberto also wrote a white-paper summing up all their findings which is a must-read for every DAX and Tabular/PowerPivot developer.

However, I recently had a slightly different requirement where I needed to calculate the Events-In-Progress for Time Periods – e.g. the Open Orders in a given month – and not only for a single day. The calculations shown in the white-paper only work for a single day so I had to come up with my own calculation to deal with this particular problem.

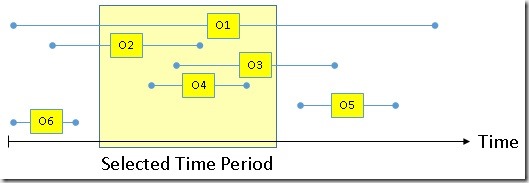

Before we can start we need to identify which orders we actually want to count if a Time Period is selected. Basically we have to differentiate between 6 types of Orders for our calculation and which of them we want to filter or not:

| Order | Definition |

| Order1 (O1) | Starts before the Time Period and ends after it |

| Order2 (O2) | Starts before the Time Period and ends in it |

| Order3 (O3) | Starts in the Time Period and ends after it |

| Order4 (O4) | Starts and ends in the Time Period |

| Order5 (O5) | Starts and ends after the Time Period |

| Order6 (O6) | Starts and ends before the Time Period |

For my customer an order was considered as “open” if it was open within the selected Time Period, so in our case we need to count only Orders O1, O2, O3 and O4. The first calculation you would usually come up with may look like this:

- [MyOpenOrders_FILTER] :=

- CALCULATE (

- DISTINCTCOUNT ( ‘Internet Sales’[Sales Order Number] ),

- FILTER (

- ‘Internet Sales’,

- ‘Internet Sales’[Order Date]

- <= CALCULATE ( MAX ( ‘Date’[Date] ) )

- ),

- FILTER (

- ‘Internet Sales’,

- ‘Internet Sales’[Ship Date]

- >= CALCULATE ( MIN ( ‘Date’[Date] ) )

- )

- )

[MyOpenOrders_FILTER] :=

CALCULATE (

DISTINCTCOUNT ( 'Internet Sales'[Sales Order Number] ), -- our calculation, could also be a reference to measure

FILTER (

‘Internet Sales’,

‘Internet Sales’[Order Date]

<= CALCULATE ( MAX ( 'Date'[Date] ) )

),

FILTER (

‘Internet Sales’,

‘Internet Sales’[Ship Date]

>= CALCULATE ( MIN ( 'Date'[Date] ) )

)

)

We apply custom filters here to get all orders that were ordered on or before the last day and were also shipped on or after the first day of the selected Time Period. This is pretty straight forward and works just fine from a business point of view. However, performance could be much better as you probably already guessed if you read Alberto’s white-paper.

So I integrate his logic into my calculation and came up with this formula (Note that I could not use the final Yoda-Solution as I am using a DISTINCTCOUNT here):

- [MyOpenOrders_TimePeriod] :=

- CALCULATE (

- DISTINCTCOUNT ( ‘Internet Sales’[Sales Order Number] ),

- GENERATE (

- VALUES ( ‘Date’[Date] ),

- FILTER (

- ‘Internet Sales’,

- CONTAINS (

- DATESBETWEEN (

- ‘Date’[Date],

- ‘Internet Sales’[Order Date],

- ‘Internet Sales’[Ship Date]

- ),

- [Date], ‘Date’[Date]

- )

- )

- )

- )

To better understand the calculation you may want to rephrase the original requirement to this: “An open order is an order that was open on at least one day in the selected Time Period”.

I am not going to explain the calculations in detail again as the approach was already very well explained by Alberto and the concepts are the very same.

An alternative calculation would also be this one which of course produces the same results but performs “different”:

- [MyOpenOrders_TimePeriod2] :=

- CALCULATE (

- DISTINCTCOUNT ( ‘Internet Sales’[Sales Order Number] ),

- FILTER (

- GENERATE (

- SUMMARIZE (

- ‘Internet Sales’,

- ‘Internet Sales’[Order Date],

- ‘Internet Sales’[Ship Date]

- ),

- DATESBETWEEN (

- ‘Date’[Date],

- ‘Internet Sales’[Order Date],

- ‘Internet Sales’[Ship Date]

- )

- ),

- CONTAINS ( VALUES ( ‘Date’[Date] ), [Date], ‘Date’[Date] )

- )

- )

I said it performs “different” as for all DAX calculations, performance also depends on your model, the data and the distribution and granularity of the data. So you should test which calculation performs best in your scenario. I did a simple comparison in terms of query performance for AdventureWorks and also my customer’s model and results are slightly different:

| Calculation (Results in ms) | AdventureWorks | Customer’s Model |

| [MyOpenOrders_FILTER] | 58.0 | 1,094.0 |

| [MyOpenOrders_TimePeriod] | 40.0 | 390.8 |

| [MyOpenOrders_TimePeriod2] | 35.5 | 448.3 |

As you can see, the original FILTER-calculation performs worst on both models. The last calculation performs better on the small AdventureWorks-Model whereas on my customer’s model (16 Mio rows) the calculation in the middle performs best. So it’s up to you (and your model) which calculation you should prefer.

The neat thing is that all three calculations can be used with any existing hierarchy or column in your Date-table and of course also on the Date-Level as the original calculation.

Download: Events-in-Progress.pbix

nice piece of work, Gerhard! Keep up the great work and contribution to the community! Happy Holidays!

Thanks for sharing!

I found that in the real world (hard to see in Adventure Works) you can achieve a good optimization by applying a filter on a low-cardinality column in the fact table. For example, if you are filtering a range of dates and the maximum distance between Order Date and Ship Date is 60 days, you might exclude all the “other” years. Depending on data distribution, this type of optimization can be very effective (you should remove at least 80-90% of rows in order to see the improvement).

Hey Marco,

I am aware that it is very beneficial in terms of performance if the distance between the two used dates (Order Date and Ship Date) is low but what exactly do you mean by “excluding all the ‘other’ years”?

I guess what you wanted to point out is that if e.g. August 2014 is selected and the maximum distance is 60 days the calculation only needs to consider June 2014 until October 2014 which would improve performance significantly

-gerhard

Yes exactly that!

Hey Gerhard,

this is an awesome piece of work, but it seems I have some trouble to use.

In my scenario I use an date table that is not related to the facttable, using all of your 3 solutions provide the same results (gladly).

This is something like an aggregated distinctcount over time …

Unfortunately this does not work with PowerView due to the lack of the relationship. As soon as I create the relationship I no longer have my (aggregated distinct count over time). Do you have an idea how I can solve my problem?

Kind regards and once again thanks for sharing

Tom

Hi Tom,

what exactly do you mean by “it does not work with PowerView”?

I guess you are referring to dynamic filtering? e.g. clicking on a bar-chart?

As soon as you have an active relationship between the Fact-table and the Date-table you would need to adopt the calculations. Otherwise you would have two relationships, the active one and the one that is created on the fly using FILTER/CONTAINS

-gerhard

Hey Gerhard,

in my first solution there was no relationship between the date table and the fact table this worked smoothly using the generate/contains approach.

Until PowerView reminded me that it needs a relationship, this was the reason why I wrote “it does not work in PowerView” 🙂

Now I have two date dimensions one is related (the normal date table) and one that is not related (even not inactive), I call it date_calc.

I adjusted the Generate/Contains approach a little and voila. I’m glad that there are some days off ahead, so that there is some time to optimize my solution.

Now I’m able to track the development (evolution) of things within a certain timeframe using a status, where the things reach a status (hopefully) or stay at their first status (the do not evolve).

Once again, thanks for your post! It saved me a lot of thinking 🙂

Hey Gerhard,

Thank you for this great post. I have a similar but slightly different question:

What would I do If i need to count the number of sales orders that have been ordered but not delivered on a single day?

Thank you!

Wesley

Hi Wesley,

I think this is very similar to what Chris Webb discussed in his post: http://blog.crossjoin.co.uk/2013/06/13/a-new-events-in-progress-dax-pattern/

and what is also used as a starting point for this blog post.

If your request is simply to find orders which were ordered but not delivered on the very same day, you could imply use this formula:

SoldButNotShippedSameDay:=CALCULATE(

DISTINCTCOUNT(‘Facts'[OrderID]),

FILTER(‘Facts’,

‘Facts'[OrderDate] <> ‘Facts'[ShipDate])

)

hope that helps to solve your issue

-gherard

Hi Gherard,

I have a similar problem to Wesley. The problem I am encountering is that I am receiving a time period from the report and would like to evaluate if an order was shipped but not received on the first day of that date range.

Thanks!

Nishka

Hi Nishka,

do you mean that if you select July, you want to find orders that have been shipped on July 1st but not received on July 1st?

or shipped on July 1st but not received in the whole time-period of the report?

-gerhard

Yes I mean the first scenario, if the selection is June I want to check if on June 1st the order was shipped but not received

sorry for the delay but I was on vacation.

so for orders that have been shipped on June 1st but not received on

a) June 1st

b) until June 31st (last day of selection)

c) until now (not received at all)

which one is correct for you?

Hello,

I have utilized the dax code behind this idea in an effort to breakdown open case age by month. Had to make a slight modification in terms of Min/Max and the inequality signs to pull the total open cases at the end of the month:

Open Cases = CALCULATE( DISTINCTCOUNT(DocWebTouchPoints_dw_Incident[IncidentID]),

FILTER( DocWebTouchPoints_dw_Incident, DocWebTouchPoints_dw_Incident[ArriveDateTime] CALCULATE(MAX(‘Date Table'[Date Key]))))

However, I am still getting total cases that arrived prior to end of month AND closed within the last month. (There is no relationship between fields used.)

Would greatly appreciate your assistance or a redirect to a webpage resource as this has been boggling my mind for a few days now.

Woops, this is the code:

Open Cases = CALCULATE( DISTINCTCOUNT(DocWebTouchPoints_dw_Incident[IncidentID]),

FILTER( DocWebTouchPoints_dw_Incident, DocWebTouchPoints_dw_Incident[ArriveDateTime] CALCULATE(MAX(‘Date Table'[Date Key]))))

Hi Vanessa,

it seems that the operator (“=”) got lost in your comment – assuming that it was an equal sign as in your other comment. then the calculation would simply return all distinct incidents which ARRIVED on the last day of the selected time period.

you would need to filter on both, Arrival-Date and Close-Date.

You should first check out the articles that i linked to at the beginning of the blog and make your calculation work for a single day – then you can adopt it accordingly as described here

kind regards,

-gerhard

Open Cases SR = CALCULATE ( DISTINCTCOUNT(DocWebTouchPoints_dw_Incident[IncidentID]),

FILTER(DocWebTouchPoints_dw_Incident, DocWebTouchPoints_dw_Incident[ArriveDateTime] = CALCULATE(MAX(‘Date Table'[Date Key])) && DocWebTouchPoints_dw_Incident[Type] = “SERVICE REQUEST”))

Hi Gerhard,

I have a similar situtation where user wants to select a date and the report has to find active ppl that point of time. I have only one table which has the start date and end date. And I have a time dimension. I don’t know which column should I join the date dimension to (if i should). As until I join ssas tabular won’t find a unique column.

Kindly share your expert suggestions.

Best Regards

GS

Hi GS,

the links at the very beginning of the post should cover your problem, this post is just an advanced version which also allows you to select a time range (e.g. months or weeks)

In general, you will not have an (active) relationship between your tables as relationships always require you to link two columns directly but do not allow “between”-joins. Thats why we need to use FILTER() and other functions to create this join on the fly.

but there also seems to be an issue with your Date-Table as it should contain unique dates and you should be able to link it to your fact-table – but again, for all Events-In-Progress-Calculations you must not have any active relationships between your tables

hth,

-gerhard

Dear Gerhard

I am new to this blog, my apologies, if I am too direct.

I have a problem for which I am unable to find a solution. I was wondering if you could give some solution, would be really appreciative.

I am working in excel powerpivot, have slight knowledge of DAX.

I have a table in which I have userIDs, and their level play details. The details include level no, start time and end time of each level. I want to calculate the session count and approx duration for the for each session.

The sessions will be ofcourse for each individual user ID.

A Session will/can include multiple levels, I am taking all levels that start within 5 minutes of completion of previous level as one sesssion.

Waiting for any and all guidance.

Best Regards/Aamer

Hi Aamer,

you can probably use the very same formula as I used in my example. You dont even need to take care of your +/- 5 mintues in between levels as you can do a distinct count on UserID and it does not matter whether he played 7 levels or just 1 level.

The average session duration will probably be more tricky. You would need a session-id or something, calculating this on-the-fly might not be feasible in DAX. The only thing I could think of is to create an artificial parent-child hierarchy between the different levels of the user and use the first part as the session-identifier

kind regards,

-gerhard

ok, so I created a little sample-file where I ceate an artificial SessionID calculated column based on start/end-time and the +/-5 minutes rule

you can then use this to drive all your calculations

the file can be found here

http://files.gbrueckl.at/blog/Events-In-Progress_9F14/Sessions.xlsx

let me know if you have any further questions!

-gerhard

Thanks Gerhard

Much appreciated, solved my problem

Best regards

Aamer

Gerhard,

Thanks for this write-up. This is a very common business problem, could be orders or subscriptions like I am working on now. All customers has subscriptions with FromDate and ToDate on them, one row for each subscription, with an ID for the subscription which in addition to the customerid is the row identifier :

CustomerID SubscriptionID FromDate ToDate

1000000 1 05/01/2016 16/03/2016

1000000 2 16/03/2016 NULL

1000001 1 27/05/2013 NULL

………..

I have a couple of million of these in my fact table.

So, counting customers/subscriptions has already been solved thanks to Alberto’s and your article here. However, I would also like to use this table to calculate Churn/Retention etc. for our customers. The new and returning customers pattern may be applicable but that is based on sales transactions and in this case that is not good enough, since customers are billed monthly/quarterly etc. So, to get Churn I need to know which customers did I have last period (month if that is selected, year if that is selected) that I don’t have any longer.

Any thoughts on how to proceed here?

Hi Stian,

thats actually a very interesting question!

So you want to know customers which existed in the past but do not exist anymore in the current period. As we cannot count something that does not exist anymore in the current period, we need to do the calculation the other way round – find active customers in the previous period and check if they still have a valid subscription in the current one.

Unfortunately I do not have too much time at the moment so I can just give you a littel snippet from which you can start working:

Churn:=COUNTROWS(),

FILTER(

ADDCOLUMNS(

CALCULATETABLE(

SUMMARIZE(

Subscriptions,

Subscriptions[CustomerID],

Subscriptions[SubscriptionID]),

“HasNextSubscription”, CALCULATE(COUNTROWS(Subscriptions), Subscriptions[SubscriptionID] > EARLIER(Subscriptions[SubscriptionID])) > 0)

[HasNextSubscription] = TRUE())

There are probably some typos in there but you should get an idea:

Find all customers with their SubscriptionIDs for the previous period

Check if they have any newer SubscriptionsIDs (which I assume is an ascending number?)

if they dont have any newer SubscriptionIDs, they churned

you probably need to add an extra check on the subscription validity and if it includes TODAY to avoid that all todays customers are counted as churn 😉

hope that helps

would be great if you can keep me updated on this!

-gerhard

If you are using SSAS 2016 or PowerBI, you might also take a look at the new set functions like EXCEPT in combination with DAX Variables.

You could calculate a list of customers of the previous period and use EXCEPT to remove the cutsomers of the current period so only the lost customers are left. then you can do a simple COUNTROWS and would also get your results

would also be interesting in terms of performance

another approach would be to flag the last SubscriptionID of each customer in a calculated column as = partitioned by customer

having this flag you can simply extend your existing calculation with [Flag] = TRUE() to get only churned customers

Hi,

I have a very similar case with retail networ where each location of stores across the country has a unique ID. Location are taken over from one company to another (eg. retail chain acquires small shop run by a private owner). Start date and end date of store operations are available. I need to calculate how many time location was taken over during in a selected time period. It would be grate to apply the solution of churning subscribers to my case as well.

I hope Gerhard will help for Stian to go forward with the churn pattern!

Paweł

Gerhard –

I has been a while since you originally posted this. However, I am relatively new with Power BI and I found it extremely helpful. I was able to use your technique to count a client census for our non-profit agency.

Thank you very much for taking the time to explain this.

Richard DeVillers

Gerhard –

As I mentioned above, I was able to use your technique to compute a daily census of clients we have in our child care agency.

However, I am now trying to compute an average daily census by month using thud results. I have tried everything approach I can think of (which is a admittedly limited due to my inexperience with PowerBI) but have not been able to do this. The daily census is correct (using your approach) but I am unable to sum those amounts correctly.

For example, in November 2016 the sum of the daily census is 517. If I manually sum the computed daily census it is correct. I would then divide that by the number of days (30) to get and average daily census of 17.2.

However, I am unable to correctly sum up the daily census amounts for a month. Invariably the amounts are wrong. One measure I tried was this: (Daily Census” is the table and measure I am attempting to sum up)

Census Sum =

CALCULATE(

SUMX(‘Avatar Census’,[Daily Census]),

ALL(‘Avatar Census’)

)

Using this approach i get 713. I tried harding coding the dates and get the same result:

Census Sum =

CALCULATE(

SUMX(‘Avatar Census’,’Avatar Census’[Daily Census]),

ALL (‘Avatar Census’),

‘DateTable'[Month] = 11,

‘DateTable'[Year] = 2016

)

I have concluded that I must be trying to do something that is not permitted for these types of measures in Power BI. I would really appreciate any guidance you could provide.

Many thanks,

Richard DeVillers

Hi Richard,

to create a average of daily values for a given time range you would usually use a calculation like this:

myDailyAverage = AVERAGEX(VALUES(‘DateTable'[Date]), [MyOriginalMeasure])

so for each [Date] the value for [MyOriginalMeausre] is calculated and then an average is calculated

Wow Gerhard! That worked perfectly. Thank you very, very much.

I obviously do not understand some important concepts of Power BI. I would never have thought to use VALUES and datetable as the first parameter of the AVERAGEX function (I tried many, many variations of SUMX / #periods, AVERAGEX, etc). But now that you point it out it is obvious that the VALUES clause controls the range of dates to average.

I need to keep studying.

Again – I really appreciate you taking the time to reply and educate me!

Richard DeVillers

Gerhard,

I have attempted to use your measure; however, it does not seem work… Using Adventure Works 2016 DW, I get respectively 5, 4, and 5 for the first three days of Order Date data (2010-12-29, 2010-12-30, 2010-12-31). Instead, it should be 5, 9, and 14.

In other words, the code from the post is act no different than the distinct count. What am I doing wrong???

Hi Kevin, I would assume that you have an active relationship between ‘Date'[Date] and ‘Internet Sales'[Order Date] which filters the final result back down to the Orders that where placed on the selected day. Can you try to add either ALL(‘Date’) or CROSSFILTER(‘Date'[Date], ‘Internet Sales'[Order Date], None) to the inner ‘Internet Sales’ like this:

CALCULATETABLE(‘Internet Sales’, ALL(‘Date’))

I also came up with this new calculation but have not done any performance testing yet but it should perform also quite well:

MyOpenOrders_Intersect =

VAR DatesInSelection = DATESBETWEEN(‘Date'[Date], MIN(‘Date'[Date]), MAX(‘Date'[Date]))

RETURN CALCULATE(

DISTINCTCOUNT(‘Internet Sales'[Sales Order Number]),

FILTER(

CALCULATETABLE(‘Internet Sales’, ALL(‘Date’)),

COUNTROWS(

INTERSECT(

DatesInSelection,

DATESBETWEEN(‘Date'[Date], ‘Internet Sales'[Order Date], ‘Internet Sales'[Ship Date])))

> 0)

)

i have the same problem here, but when i try this it still only shows in the month of the active relationship.

Hi Josh, did you check that there is no other active relationship interfering with the calculation as described in my previous answer?

I do have an active relationship, is that not possible? I thought i could do something with the ALL function in dax maybe

Sorry misread your reply, yes no other active relationship

can you send me a sample of the file so I can reproduce the issue?

Hi Gerhard,

Can you help in figuring out measure formula for this scenario please?

User provide date range on the date filter created from Date Dimension. Now I have data which comes once in a week,month ,quarter etc . If user select a date range then the values which fall in that date range should show up and also if the data was provided before the min start date should show up as well if that was the only data provided so far. It would be more clear from the example below

for example : Data is in form of StoreNumber,Value, DataDate and its like

ST1,30,4/4/2018

ST1,20,5/10/2018

ST2,33,6/10/2018

ST3,88,12/2/2017

ST3,22,1/1/2018

ST3,11,2/2/2018

ST4,40,7/1/2018

Date range selected on report 3/1/2018 to 6/20/2018

Output should be

ST1,20,5/10/2018 – should show up as it falls in the date range and last value provided for store number 1

ST2,33,6/10/2018 – should show up as it falls in the date range and last value provided for store number 2

ST3,11,2/2/2017 — note here this is out of date range but it should still show up as that was the last data value received for Store Number 3 even though it is outside selected range

ST4,40,7/1/2018 – this value should NOT show up because this is out of date range.

I tried using earlier function to calculate the maxdate for each store data set but can only get data for ST1 and ST2 in output and I am unable to get ST3 record

I am sorry if this is confusing let me know if you have questions.

Thanks,

Richa

you can try this:

LastValue = CALCULATE(SUM(Facts[Value]), CALCULATETABLE(LASTDATE(Facts[DataDate]), DATESBETWEEN(Facts[DataDate], BLANK(), MAX(Facts[DataDate]))))

LastValidValue =

VAR Stores = CALCULATETABLE(VALUES(Facts[Store]), DATESBETWEEN(Facts[DataDate], BLANK(), MAX(Facts[DataDate])))

RETURN CALCULATE(SUMX(Stores, [LastValue]), ALL(Facts))

does this work for you?

(it may not work for the data provided but if you have a snowflake schema it should work)

-gerhard

Hi Gerhard

I am new to power bi and having a hard time with this… i am trying to find the number of open cases at a certain date…. my table has open date and closed date…so far here is the formula i have which gives me all open cases but does not take out the cases that have been closed and I am stuck here. Any help will be appreciated

Backlog = CALCULATE(DISTINCTCOUNT(‘WH CRM'[Index.1]),FILTER(‘WH CRM’,’WH CRM'[Date/Time Opened]=LASTDATE(‘Date'[Date])))

Hi Busola,

couldn’t you just simply take all cases where [Date/Time Openend] <= MAX('Date'[Date]) and further exclude all cases that have been closed already (e.g. ISBLANK([Date/Time Closed])) Backlog = CALCULATE(DISTINCTCOUNT(‘WH CRM'[Index.1]),FILTER(‘WH CRM’,’WH CRM'[Date/Time Opened] <= MAX(‘Date'[Date]) && ISBLANK(’WH CRM'[Date/Time Closed]))) -gerhard

Hi Gerhard;

I’m new in DAX, but it could be my favourite, i have food profitability data set, i have formula and it worked

1. A_Food Profitability Ttl = divide(‘fact_ed_gohr'[A_Cost Food Ttl] , ‘fact_ed_gohr'[A_Food Revenue Ttl],0)

2. A_Food Profitability LY = CALCULATE([A_Food Profitability Ttl],SAMEPERIODLASTYEAR(‘dim_Date'[date]))

when i need Total Year to date (YTD), i have to summarize data like this, and it worked

3.A_Food Profitability YTD = TOTALYTD(sumx(SUMMARIZE(fact_ed_gohr, dim_Date[Date].[Year], dim_Date[Date].[Month], “dd”, ‘fact_ed_gohr'[A_Food Profitability Ttl]), [dd]),dim_Date[date])

but when i need to get YTD Last Year, the formula is missed;

A_Food Profitability YTD = TOTALYTD(sumx(SUMMARIZE(fact_ed_gohr, dim_Date[Date].[Year], dim_Date[Date].[Month], “aa”, ‘fact_ed_gohr'[A_Food Profitability LY]), [aa]),dim_Date[date])

and also if i used this formula

A_Food Profitability YTD LY old = TOTALYTD(‘fact_ed_gohr'[A_Food Profitability LY],dim_Date[date])

would you please give me a clue how to get Summarized YTD Last Year

best regards

vick

Hi vick,

please check out https://www.daxpatterns.com/time-patterns/ and https://www.daxpatterns.com/cumulative-total/

I am sure you will find an answer there

regards,

-gerhard

Hi Gerhard,

I would like to ask, how to count number of all days (Processing Days) for orders O1, O2, O3 and O4 as per your scenarion. But days to be only counted within Selected Time Period (Between Min( ‘Date'[Date]) and MAX ( ‘Date'[Date]) ) assuming, there are no duplicate order_id’s on Order table and Date table has no relationship to Order table. Thank you.

Best Regards,

Stefan

I quickly came up with this solution:

Days Orders Active FILTER =

VAR MaxDate = MAX(‘Date'[Date])

VAR MinDate = MIN(‘Date'[Date])

RETURN

SUMX(

ADDCOLUMNS(

CALCULATETABLE(

‘Internet Sales’,

FILTER (

CALCULATETABLE(VALUES(‘Internet Sales'[Order Date]), ALL(‘Date’)),

‘Internet Sales'[Order Date]

<= CALCULATE ( MAX ( 'Date'[Date] ) ) ), FILTER ( CALCULATETABLE(VALUES('Internet Sales'[Ship Date]), ALL('Date')), 'Internet Sales'[Ship Date] >= CALCULATE ( MIN ( ‘Date'[Date] ) )

),

ALL(‘Date’)),

“Days Active”, (MIN(MaxDate, [Ship Date]) – MAX(MinDate, [Order Date])) * 1),

[Days Active]

)

The inner CALCULATETABLE can be replaced by any of the other patterns mentioned in the blog

Also, I added a sample PBIX file at the end of the blog for you to download. It already contains all the calculations and a separate page for “Days Orders Active”

regards,

-gerhard

Thank you Gerhard,

I see the logic here. but I should have also mentioned that we are still using Excel 2010 PowerPivot. VAR is not working there.. is there any other way?

Best regards,

Stefan

you can simply replace the VARs with the actual formula, I just used it for readability

here is the version without VARs:

Days Orders Active FILTER noVAR =

SUMX(

ADDCOLUMNS(

CALCULATETABLE(

‘Internet Sales’,

FILTER (

CALCULATETABLE(VALUES(‘Internet Sales'[Order Date]), ALL(‘Date’)),

‘Internet Sales'[Order Date]

<= CALCULATE ( MAX ( 'Date'[Date] ) ) ), FILTER ( CALCULATETABLE(VALUES('Internet Sales'[Ship Date]), ALL('Date')), 'Internet Sales'[Ship Date] >= CALCULATE ( MIN ( ‘Date'[Date] ) )

),

ALL(‘Date’)),

“Days Active”, (MIN(MAX(‘Date'[Date]), [Ship Date]) – MAX(MIN(‘Date'[Date]), [Order Date])) * 1),

[Days Active]

)

i come from a programing standpoint and much of DAX leaves me just… baffled

however, i think its very cool, so i keep banging my head against it trying to make pretty spreadsheets

my likely dumb question

you mentioned not being able to use the jedi method because of distinct count

i, however, cannot use it…. because i don’t ‘get’ how to translate it into a measure (i can run the evaluate just fine in a tabular query)

how do i… do that?

reading the posts above, ‘Days Orders Active FILTER noVAR =’ kinda “looks” like it… is that the syntax to use?

thanks so much for taking the time to explain all that you already have

to convert the “Jedi”-method from the whitepaper to a regular measure, you need to use the last parameter from the ADDCOLUMNS function and use it as a measure:

EVALUATE ADDCOLUMNS ( VALUES ( 'Date'[Date] ), "Open Orders", SUMX ( FILTER ( GENERATE ( SUMMARIZE ( 'Internet Sales', 'Internet Sales'[Order Date], 'Internet Sales'[Ship Date], "Rows", COUNTROWS ( 'Internet Sales' ) ), DATESBETWEEN ( 'Date'[Date], 'Internet Sales'[Order Date], 'Internet Sales'[Ship Date] - 1 ) ), 'Date'[Date] = EARLIER ( 'Date'[Date] ) ), [Rows] ) )so in this case your measure woulde be:

MyMeasure = SUMX( … )

thanks, i ran into an issue with

“EARLIER/EARLIEST refers to an earlier row context which doesn’t exist”

i saw in this post a reply from you about 6 years ago

https://javierguillen.wordpress.com/2012/02/06/can-earlier-be-used-in-dax-measures/

i tried to follow your explanation of how you fixed it, but i don’t quite ‘get’ it yet.

i’m still at the stage of plugging in things i find on google and kinda hoping

how would i use earlier in this situation? does it even ‘matter?’, i’m trying to follow along with the events in progress example.

also, i see that there are posts about “summarizecolumns” replacing summarize

does that have any relevance?

thanks for taking the time

could you give some more context? Is it related to the Events-In-Progress pattern described in the blog or is it just a general question about EARLIER/EARLIEST?

Can you share your existing DAX calculation?

-gerhard

Hello Gerhard,

I’m dealing with a work scheduling system & need to know the number of tasks scheduled every week of the year. If a task is not completed in the week originally scheduled, then it should show as being scheduled in the ORIGINAL week PLUS EVERY WEEK following until it is completed. The examples given only seem to count the tasks in the ORIGINAL week scheduled, not every week following. I need them to cumulative count as scheduled until completed. Thoughts?

well, you can use the approach described in this blog post if you simply set the value of the “TaskCompletedAt” column to somewhere in the far future (e.g. 2030-12-31) for tasks that are not completed yet.

you can do this calculation easily in a calculated column

I do a bit of web design coding and am teaching myself some PowerPivot for a project. Getting hung up on one item related to overlapping data points and I can’t quite figure out. Here is my sample data:

Number Start Date Start Time End Date End Time Overlaps

19-0002325 6/24/2019 16:39:10 6/24/2019 16:52:56 No

19-0002326 6/24/2019 20:23:46 6/24/2019 20:49:01 Yes

19-0002327 6/24/2019 20:45:48 6/24/2019 21:16:32 Yes

19-0002328 6/24/2019 21:17:11 6/24/2019 21:36:09 No

19-0002329 6/24/2019 21:36:12 6/24/2019 21:57:20 Yes

19-0002330 6/24/2019 21:36:54 6/24/2019 22:03:07 Yes

How can I modify your example to simply provide a column that indicates if it overlaps?

Hi David,

first I created calculated columns that combine date and time of Start/End (could also be done in Power Query already) and then the actual calculated column for the Overlaps

Start DateTime = [Start Date] + [Start Time]

End DateTime = [End Date] + [End Time]

Overlaps_Calc = COUNTROWS(

FILTER(

ALL(MyTable),

[Number] EARLIER([Number])

&& EARLIER([Start DateTime]) = [Start DateTime]

)

) > 0

regards,

-gerhard

Wow, 5 years and counting on this tread, thanks for all your help Gerhard. Would you be able to point me in the direction to a solution I have been attempting to solve for some time now?

I have a table with a patient admit dates and discharges date in separate columns, and ultimately I wish to count unique patients in a Power BI model, the number of patients “in-house” on any given day, month or year. I hope to display by day, of the current month visually, as well as by prior months daily averages.

Thanks for any direction you can provide.

Terry

Hi Terry,

you can actually take the code as it is and only change the table and column names

what exactly are you struggling with?

regards,

-gerhard

Dear Gerhard, Thank you for this blog post, it is very useful. although in a small dataset I am happy with the first version of the solution (later I will look into optimizing it with the second/third variant), I have questions on two concepts that make this work in practice.

1. When we talk about inactive relationships, every source i have found only discusses “USERELATIONSHIP” but not “FILTER”. In the first code here, FILTER is taking advantage of inactive relationships. Where is this concept documented?

2. I have downloaded your example PBIX and I saw that if we put the measure (that is coded in the first solution) into a visual in PBI, then it will act as a filter for the entire visual. Why is this happening? (I assume it is because PBI creates a single DAX query to get the data content for the visual, and then the filter inside the measure somehow affects the entire data content of the result set. But) where is this behavior explained?

Thanks again,

Máté

1. well, USERELATIONSHIP relies on an existing relationship between two tables which is not the case here

using FILTER and similar functions, you can create a “virtual” relationship to filter table A based on values selected in table B

have a look here:

https://www.sqlbi.com/articles/physical-and-virtual-relationships-in-dax/

2. the visual is filtered because the measure returns BLANK for rows whithout a result and those are then suppressed by the visual. if all values of a visual return BLANK, the whole row is hidden

regards,

-gerhard

Hello Gerhard, thanks for your useful post, its a very good solution because it optimizes a lot my model.

But i have one problem and I am not able to get a solution. When I apply your solution to my model while I am working with Dates it is perfect, but the problem starts when I get to a lower level because i would like to measure O1,O2,O3,O4 in an hour level.

So I create another Calendar table with a Column that is a DateTime and it contains these data (this is an example for one day) and I have more lines for the entire 2020

01/01/2020 0:00:00

…

01/01/2020 17:00:00

…

01/01/2020 23:00:00

So when I apply your measure it shows me an error : ” a date column containing duplicate dates was specified in the call to function datesbetween ”

# Use_of_Licenses =

CALCULATE (

DISTINCTCOUNT (Uso_Licencias[User_Name]),

FILTER (

GENERATE (

SUMMARIZE(

Uso_Licencias,

Uso_Licencias[Create_Date_GMT],

Uso_Licencias[Release_Date_GMT]),

DATESBETWEEN(

Calendario_Hora[DateTime],

Uso_Licencias[Create_Date_GMT]),

Uso_Licencias[Release_Date_GMT]))

),

CONTAINS ( VALUES(Calendario_Hora[DateTime]), [DateTime], Calendario_Hora[DateTime])

So what I would like to measure is the maximum of concurrent users in the day, but for this i need to an hour level but i have these problems.

Do you know any solution for my problem?

Thanks,

Jaime

Hi Jaime,

the problem here is the DATESBETWEEN() function which only works with full days but not with hours. It is a built-in function and not very flexible.

You can try to use FILTER() instead like this:

FILTER(Calendario_Hora[DateTime], AND(Calendario_Hora[DateTime]>=Uso_Licencias[Create_Date_GMT]), Calendario_Hora[DateTime]<=Uso_Licencias[Release_Date_GMT]))) kind regards, -gerhard

Gerhard thanks for your fast answer,

I have applied your change in the measure and now it shows data in my graphic, but the data shown is not real to the actual users in the application. It shows me less than it should.

For example i have this measure that shows me the real users that are connected in an hour of the day, but is sooo inefficient that if I charge more than a week in my model is impossible to work with that report.

VAR MinDate = MIN ( ‘Calendario_Hora'[DateTime] )

VAR MaxDate = MAX ( ‘Calendario_Hora'[DateTime] )

VAR Result =

CALCULATE(

DISTINCTCOUNT( ‘Uso_Licencias'[User_Name] ),

‘Uso_Licencias'[Create_Date_GMT] MinDate,

REMOVEFILTERS( ‘Calendario_Hora’)

)

RETURN

Result

Thanks again Gerhard,

Jaime

Hi again Gerhard,

I have just found a pattern in the data shown in my graphic with your proposed change of putting the FILTER().

We are only measuring Order3 (O3) Starts in the Time Period and ends after it , I am not sure if we are measuring O4, but O1 and O2 are not shown in the data of concurrent users and I would like to measured it.

I suppose O1 and O2 are not shown in my graphic because with this “(Calendario_Hora[DateTime]>=Uso_Licencias[Create_Date_GMT]) ” we are not taking account the ones that start before.

Any advice of how can we solve this?

Thanks,

Regards

Jaime

well, the original post was about showing O1-O4

as you changed the formula it is now producing a different result

if you want to include O1 and O2 please refer to the original formula

the performance will decrease significantly with the number of rows that is returned by DATESBETWEEN/FILTER

if you change the granularity to hours or even minutes, this number will increase A LOT

you can try to use the other formula that I also described in the post

another option might be to separate date and time into two tables

If you analyze a single day, use the time-table

if you want to analyze multiple days, use the date table

Hi Gerhard, I have the same hourly granular requirement; I need to count the number of active machines in a period of time. A big challenge is that some machines are active for over 24 hours, so when I add the measure to a matrix (hours on rows, machines on columns), the measure counts backwards, meaning it uses the end time in the current day instead of the next day. Any suggestions on how to resolve this problem?

Hi Kim,

so you have a time-dimension for the time intervals on each day (e.g. Hours 0-23, Minutes, …) and you use that on your matrix, right?

and your facts are linked to it only by time, but not by date and now you run into issues if a machine runs from 23:00 until 3:00 the next day

is that the problem?

-gerhard

Yes, that is correct. I actually implemented a query inspired by this thread which is actually producing results for 95% of all cases, even the ones that end on a future date. The issue only appears when the future end time is before the start time, i.e.

Start Date = July 7

Start Time = 10AM

End Date = July 8

End Time = 9AM

This case will not be counted. However, if the end time was 11AM, then the case would be counted.

Here is the query I’m using:

# of Active Machines (time_v2) =

CALCULATE(

[# of Jobs],

FILTER(

GENERATE(

SUMMARIZE(

CALCULATETABLE( Jobs, ALL( ‘Time Table’ ) ),

Jobs[Job Start Time],

Jobs[Job End Time]

),

FILTER(

VALUES( ‘Time Table'[Time] ),

AND(

‘Time Table'[Time] >= Jobs[Job Start Time],

‘Time Table'[Time] <= Jobs[Job End Time]

)

)

),

CONTAINS(

VALUES( 'Time Table'[Time] ),

'Time Table'[Time],

'Time Table'[Time]

)

),

CROSSFILTER( 'Time Table'[Time], Jobs[Job Start Time], NONE )

)

It would definitely work if you’d have a dimension that combines date+time into one column – then the less-equal and greater-equal would just work fine

alternatively you could try to extend your existing formula and extend the logic in the FILTER() to work differently if [Job Start Date] and [Job End Date] are not on the same day

Hi Gerhard, responding to your comment at a higher comment level due to your website constraints.

I’m a bit confused on what you mean by extending the filter table, because FILTER only allows one table. Are you suggesting I use CALCULATE here?

no, I was referring to using additional conditions in the FILTER() function and not just the existing ones which only filtered on the time but not on the date

Hey Gerhard, in order to use additional conditions to filter the date table as well as the time table, I would need to pass in both of those tables into the function, but it only allows for one. Do I then use nested FILTER statements to solve for this?

so I had a look and tried to reproduce your isse.

It seems the easiest way to fix this is to have a single column that combines the date and time values. The rest of the calculations is then the very same as described in the blog post

would that work for you?

possibly yes. So it would be a column with all the possible combinations of days and time? Would that column have to be in my fact table? My time table is in seconds, 86,400 rows. And date table is about 5 years, 2,191 rows.

Also, this resource has a similar challenge and interesting solution, trying to figure out if it can solve our problem here.

can you try this code, it should do what you want without having to introduce a new table. It needs independent date and time tables though but I think you have those already:

# of Active Machines (time_v3) = CALCULATE( [# of Jobs], FILTER( GENERATE( SUMMARIZE( CALCULATETABLE( Jobs, ALL( 'Time Table' ), ALL('Date Table') ), Jobs[Job Start Date], Jobs[Job Start Time], Jobs[Job End Date], Jobs[Job End Time] ), FILTER( CROSSJOIN( VALUES('Time Table'[Time]), VALUES('Date Table'[Date]) ), AND( 'Date Table'[Date] +'Time Table'[Time] >= Jobs[Job Start Date] + Jobs[Job Start Time], 'Date Table'[Date] +'Time Table'[Time] <= Jobs[Job End Date] + Jobs[Job End Time] ) ) ), CONTAINS( VALUES( 'Time Table'[Time] ), 'Time Table'[Time], 'Time Table'[Time] ) && CONTAINS( VALUES( 'Date Table'[Date] ), 'Date Table'[Date], 'Date Table'[Date] ) ) ,CROSSFILTER( 'Time Table'[Time], Jobs[Job Start Time], NONE ) ,CROSSFILTER( 'Date Table'[Date], Jobs[Job Start Date], NONE ) )I’m new to DAX however fluent in SQL. I’m adapting your code to allow users to select a year month then display the case load for case managers (healthcare). Since the cases counted are in only Use Case 04, I suspect the filter context is the issue. I have two slicers on the page:

1. Assigned Date using the between slicer parameter set for the start of the month and the end of the month

2. Expiration Date using the between slicer setting as well with the date at the start of the month and the end of the month

When the Calendar[Date} was used as the single slicer for the Assigned Date – the case counts are too low as well as only use case 04 were counted.

Please advise – M Mara

sounds like you need two date-dimensions (and two slicers) – one linked to AssignedDate and the other linked to ExpirationDate

then you can select a month in the AssignedDate-Dimension to get all cases that where assigned in a particular month

to further limit the results to those that expired in a specific month you can additionally filter the ExpirationDate-Dimension

-gerhard

Hi Gerhard,

Kind of new bee in the world of Dax.

I came across an ask to present the open tickets by end of day/week/Month/Quarter and Year

My data is as below. (No close date meaning its still open)

TicketNumber CreationDate CloseDate

ABC 3-Jun-21 3-Jun-21

DEF 8-Jul-21

GHI 5-Jul-21

XYZ 12-May-21 1-Jun-21

I am having a date table which is related to creationdate

Also, the major ask related to this is to click on the open case count and drill to details list.

Can you please guide on this two needs please?

Thanks in advance.

Sagar

check out Chris Webbs blog post on this topic which I also referenced

https://blog.crossjoin.co.uk/2013/06/13/a-new-events-in-progress-dax-pattern/

to fix the the empty CloseDate you can either replace NULLs with the current date or change the logic in DAX according to Chris’ post

Hi Gerhard,

thank a lot for an article – I think it’s one of the most common problem. I have a similar but in my case I need to sum all the values from given period of time – not just counr them.

So my case is pretty similar but in addition and my table look like this (date yyyy-mm-dd):

Id, start, end, value

1 2022-01-01 2022-02-01 5

2 2022-03-01 2022-03-03 8

3 2022-02-01 2022-03-10 1

4 2022-01-05 2022-02-01 15

5 2022-01-15 2022-02-15 4

Lets assume each record is a job with its start and end time and value provides number of active CPUs.

I need to sum all active CPUs and provide the number of active jobs.

Thanks in advance for your help!

Regards

Woj

Sorry – just to add in the DAX formula I replaced distincount with SUM – but I’m afraid it gives wrong values..

so the formula is designed to work with any aggregation so replacing DISTINCTCOUNT() in the second line with SUM() should give you the right results

the outermost FILTER() just returns a table with all the relevant events in the time-frame – what you do with that table is up to you and there is no difference whether you use DISTINCTCOUNT(), SUM(), MAX(), etc.

can you please verify the results again after using SUM()

Ok the problem is that SUM seam to not work properly because within a day/hour or even a minute the same CPU core might be used (and released) several times. And the formula returns probably agregated value..

So the example I provided is simplified – in real the date is very granular and events (records) appear in seconds. This is probably a CPU monitoring log.

Start Time End time Active CPUs

01/01/2022 12:28:32 01/01/2022 12:34:30 72

01/01/2022 12:35:15 01/01/2022 12:47:32 72

01/01/2022 17:02:14 04/01/2022 00:07:04 36

01/01/2022 17:05:51 01/01/2022 17:13:21 72

01/01/2022 17:23:18 01/01/2022 17:37:15 72

01/01/2022 17:53:14 02/01/2022 12:54:41 180

Not sure but I’m afraid the problem is with aggregation the sum to entire day here..

so given this example, what should your output be?

I’d like to have a chart with active CPU cores (Y-axis) and time line (X-axis).

In other words the output shoud show a CPU consumption (number of active cores) within a timeline. But if the timeline is too granural like day or even hour – the formula should consider release of the CPU and its re-consumption within this specific time frame.

Hope it’s clear. I do appreciate your help. Thanks

Having a look at your example again it seems like you do not have overlapping time-frames, is that true?

in that case you would not need that solution at all but could simply use a regular relationship?

simply link it to your Date and Time dimension and use this?!

you would need to decide though which date/time to use, start or end but if you are talking about daily or hourly analysis and your time-frames are only a couple of seconds, this should be negligible , no?

Unfortunatelly I have an overlapping on the time frames. In my case there are several jobs which starts e.g. in January and ends even few weeks after – in the mean time another jobs starts and ends with different duration overlapping each other.

So for the calculation I need to include those jobs which started some time back and are still active on the moment of time.

I cannot rely on the day frame because your DAX formula SUM up them all (again in the particual day they appear and go down several times with different CPU consumption).

I.e. job was active for few seconds conuming 80 cores, within the same minute there were another 5 jobs active which consumed only 1 core each and one job overlapped with it – so it gives 81 CPU core consumption in this particular minute – the rest just appeared and went down almost immediately – so shouldn;t be considered for the SUM.

I’m afraid I can’t use relationship – because it provides me the dates of occurence a particular job – and I need a continues time line. I used a separate date table but without relationship connected to my table. Anyway it didn’t help much – because of mentioned time granularity.

I hope I was clear. Thanks

so assuming your want to analyze the consumption for a whole day and a single/the same job was active 3 time (1AM-3AM with 5 cores, 10AM-1PM with 3 cores and 8PM-10PM with 7 cores)

what output would you expect for that single day and that single job? Considering it must be a scalar value

While those three jobs do not overlap each other the highest peak should be taken – so 7 cores (max consumption) for a particual time frame (here – a day).

If there are any other jobs within the same time frame (they’re overlapping) – their consumption should be included (summed up).

so get the MAX() for each job and sum those up?

well, not really..

Gerhard, imagine you have a log of jobs (hondred thousends of records). Some of them work in parallel (overlapping) consuming CPUs same time, some appears and goes down after few seconds.

I need to see on the chart what’s the overall CPU consuption generated by the jobs on the time line.

For those which work in parallel even for a few seconds you need to sum the CPU consumption, and for those working singular you would need to just catch them up and reflect their consumption on the time line.

I think it’s a typical case to predict whether the overl CPU consumption hit the limit on the server on we still have some capacity there.

Hope it helps

at some point you still must be able to distinguish the two records of the same job running at the same time

but after understanding your requirement, I do not know whether this is the right pattern for you. Why cant you just use a simple BETWEEN join and put your seconds on the axis?

for that second SUM everything that started before and ended after that second