I also contributed the connector described in this post to the official delta.io Connectors page and repo (link). You will find the most recent updates in my personal repo which are then merged to the official repo once it has been tested thoroughly!

Working with analytical data platforms and big data on a daily basis, I was quite happy when Microsoft finally announced a connector for Parquet files back in November 2020. The Parquet file format is developed by the Apache foundation as an open-source project and has become a fundamental part of most data lake systems nowadays.

“Apache Parquet is a columnar storage format available to any project in the Hadoop ecosystem, regardless of the choice of data processing framework, data model or programming language.”

However, Parquet is just a file format and does not really support you when it comes to data management. Common data manipulation operations (DML) like updates and deletes still need to be handled manually by the data pipeline. This was one of the reasons why Delta Lake (delta.io) was developed besides a lot of other features like ACID transactions, proper meta data handling and a lot more. If you are interested in the details, please follow the link above.

So what is a Delta Lake table and how is it related to Parquet? Basically a Delta Lake table is a folder in your Data Lake (or wherever you store your data) and consists of two parts:

- Delta log files (in the sub-folder _delta_log)

- Data files (Parquet files in the root folder or sub-folders if partitioning is used)

The Delta log persists all transactions that modified the data or meta data in the table. For example, if you execute an INSERT statement, a new transaction is created in the Delta log and a new file is added to the data files which is referenced by the Delta log. If a DELETE statement is executed, a particular set of data files is (logically) removed from the Delta log but the data file still resides in the folder for a certain time. So we cannot just simply read all Parquet files in the root folder but need to process the Delta log first so we know which Parquet files are valid for the latest state of the table.

These logs are usually stored as JSON files (actually JSONL files to be more precise). After 10 transactions, a so-called checkpoint-file is created which is in Parquet format and stores all transactions up to that point in time. The relevant logs for the final table are then the combination of the last checkpoint-file and the JSON files that were created afterwards. If you are interested in all the details on how the Delta Log works, here is the full Delta Log protocol specification.

From those logs we get the information which Parquet files in the main folder must be processed to obtain the final table. The content of those Parquet files can then simply be combined and loaded into PowerBI.

I encapsulated all this logic into a custom Power Query function which takes the folder listing of the Delta table folder as input and returns the content of the Delta table. The folder listing can either come from an Azure Data Lake Store, a local folder, or an Azure Blob Storage. The mandatory fields/columns are [Content], [Name] and [Folder Path]. There is also an optional parameter which allows you the specify further options for reading the Delta table like the Version if you want to use time-travel. However, this is still experimental and if you want to get the latest state of the table, you can simply omit it.

The most current M-code for the function can be found in my Github repository for PowerBI: fn_ReadDeltaTable.pq and will also be constantly updated there if I find any improvement.

The repository also contains an PowerBI desktop file (.pbix) where you can see the single steps that make up for the final function.

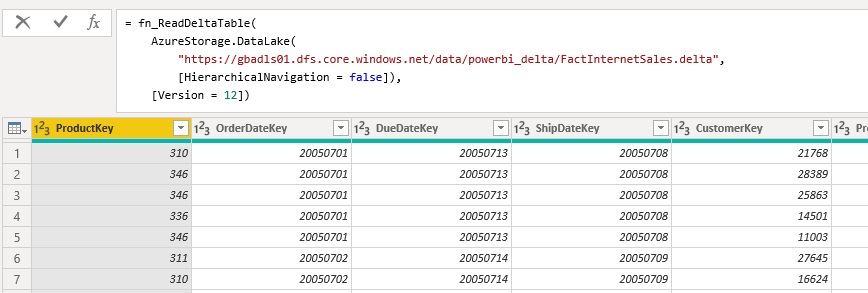

Once you have added the function to your PowerBI / Power Query environment you can call it like this:

= fn_ReadDeltaTable(

AzureStorage.DataLake(

"https://myadls.dfs.core.windows.net/public/data/MyDeltaTable.delta",

[HierarchicalNavigation = false]),

[Version = 12])

I would further recommend to nest your queries and separate the access to the storage (e.g. Azure Data Lake Store) and the reading of the table (execution of the function). If you are reading for an ADLS, it is mandatory to also specify [HierarchicalNavigation = false] ! If you are reading from a blob storage, the standard folder listing is slightly different and needs to be changed.

Right now the connector/function is still experimental and performance is not yet optimal. But I hope to get this fixed in the near future to have a native way to read and finally visualize Delta lake tables in PowerBI.

After some thorough testing the connector/function finally reached a state where it can be used without any major blocking issues, however there are still some known limitations:

- Partitioned tables

currently columns used for partitioning will always have the value NULLFIXED!values for partitioning columns are not stored as part of the parquet file but need to be derived from the folder pathFIXED!

- Performance

is currently not great but this is mainly related to the Parquet connector as it seems- very much depends on your data – please test on your own!

- Time Travel

currently only supports “VERSION AS OF”need to add “TIMESTAMP AS OF”

- Predicate Pushdown / Partition Elimination

currently not supported – it always reads the whole tableFIXED!

Any feedback is welcome!

Special thanks also goes to Imke Feldmann (@TheBIccountant, blog) and Chris Webb (@cwebb_bi, blog) who helped me writing and tuning the PQ function!

Downloads: fn_ReadDeltaTable.pq (M-code)