Defining data types and knowing the schema of your data has always been a crucial factor for performant data platforms, especially when it comes to string datatypes which can potentially consume a lot of space and memory. For Lakehouses in general (not only Fabric Lakehouses), there is usually only one data type for text data which is a generic STRING of an arbitrary length. In terms of Apache Spark, this is StringType(). While this applies to Spark dataframes, this is not entirely true for Spark tables – here is what the docs say:

- String type

- StringType: Represents character string values.

- VarcharType(length): A variant of StringType which has a length limitation. Data writing will fail if the input string exceeds the length limitation. Note: this type can only be used in table schema, not functions/operators.

- CharType(length): A variant of VarcharType(length) which is fixed length. Reading column of type CharType(n) always returns string values of length n. Char type column comparison will pad the short one to the longer length.

As stated, there are multiple ways to define a text column and while you cannot use VarcharType/CharType in your Spark dataframe, you can still use it to define the output tables of your lakehouse. Inspired by this blog post by Kyle Hale, I was running some similar tests on Microsoft Fabric.

In general I can say that the results are basically the same as the ones that Kyle got. This was kind of expected as in both cases Spark and Delta Lake was used to run the tests. However, for me it was also interesting to see what impact this data type change had on other components of the Fabric ecosystem, particularly the SQL Endpoint associated with my Lakehouse. Here is the very simple Spark code I used for testing. I am writing a dataframe with an IntegerType() and a StringType() to a new table which will create this table in the lakehouse for you:

schema = T.StructType([

T.StructField("charLength", T.IntegerType()),

T.StructField("value_max_len_10", T.StringType())

])

df = spark.createDataFrame([[(10),('abcdefghij')]], schema)

df.write.mode("append").saveAsTable("datatypes_default")

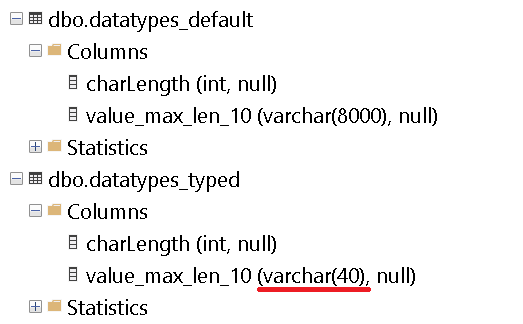

If you have a look at the table created in your Lakehouse via the SQL Endpoint using e.g. SQL Server Management Studio or Azure Data Studio, you will realize that text column is associated with a datatype VARCHAR(8000). For most columns, this is utterly oversized and can have a huge impact on performance as the size of the columns is used by optimizer to built an efficient execution plan.

Lets see what happens if we write the very same dataframe into an already existing table that was created using VARCHAR(10) instead:

%%sql

CREATE TABLE datatypes_typed (

charLength INT,

value_max_len_10 VARCHAR(10)

)

# writing the same dataframe as before but now to the pre-defined table

df.write.mode("append").saveAsTable("datatypes_typed")

Checking the SQL Endpoint again you will see that the data type of the column in the new table is now VARCHAR(40) – quite a big improvement over VARCHAR(8000) !

I have to admit that I do not yet know why it is VARCHAR(40) and not VARCHAR(10) but for the time being I am already happy with those results. I also tried other lengths but it seems to always show 4 times the defined length in the lakehouse table. Once I find out more about this, I will update the blog post!

Looks like they reserve for UTF-32?

yes, thats also what I thought, they are displaying DATALENGTH instead of (string) LENGTH

technically I think it should actually show as NVARCHAR(10)

I have used Excel for years for storing small tables up to 200,000 rows or more. It seems to handle strings more elegantly.

could you please run a test and check how your Excel behaves with 200M rows? 😉

If you want to store 10 characters in UTF-8, in SQL Server, you have to specify varchar(40). I’ll bet they’re allowing for that.

https://blog.greglow.com/2019/07/25/sql-think-that-varchar-characters-if-so-think-again/

Thanks Greg, that blob post exactly explains why this is happening!

Pingback: VARCHAR() in Microsoft Fabric Lakehouses and SQL Endpoints – Curated SQL