PowerBI.CICD repository

In the past I have been working on a lot of different Power BI projects and it has always been (and still is) a pain when it comes to the deployment of changes across multiple tiers(e.g. Dev/Test/Prod). The main problem here being that a file generated in Power BI desktop (</mark>.pbix<mark style="background-color:#ffffff" class="has-inline-color has-black-color">) is basically a binary file and the metadata of the actual data model (BIM) cannot be easily extracted or derived. This causes a lot of problems upstream when you want to automate the deployment using CI/CD pipelines. Here are some common approaches to tackle these issues:

- Use of Power BI deployment pipelines

The most native solution, however quite inflexible when it comes to custom and conditional deployments to multiple stages - Creation a Power BI Template (

.pbit) in addition to your.pbix<mark style="background-color:#ffffff" class="has-inline-color has-black-color">file and check in both

This works because the</mark></mark><mark style="background-color:#ffffff" class="has-inline-color">.pbit</mark><mark style="background-color:#ffffff" class="has-inline-color has-black-color"><mark style="background-color:#ffffff" class="has-inline-color has-black-color">file basically contains the BIM file but its creation is also a manual step - Extraction of the BIM file while PBI desktop is still running (e.g. using Tabular Editor)

With the support of external tools this is quite easy, but is still a manual step and requires a 3rd party tool - Development outside of PBI desktop (e.g. using Tabular Editor)

Probably the best solution but unfortunately not really suited for business users and for the data model only but not for the Power Queries

As you can see, there are indeed some options, but none of them is really ideal, especially not for a regular business user (not talking about IT pros). So I made up my mind and came up with the following list of things that I would want to see for proper CI/CD with Power BI files:

- Users should be able to work with their tool of choice (usually PBI desktop, optional with Tabular Editor or any other 3rd party tool)

- Automatically extracting the metadata whenever the data model changes

- Persisting the metadata (BIM) in git to allow easy tracking of changes

- Using the persisted BIM file for further automation (CD)

Solution

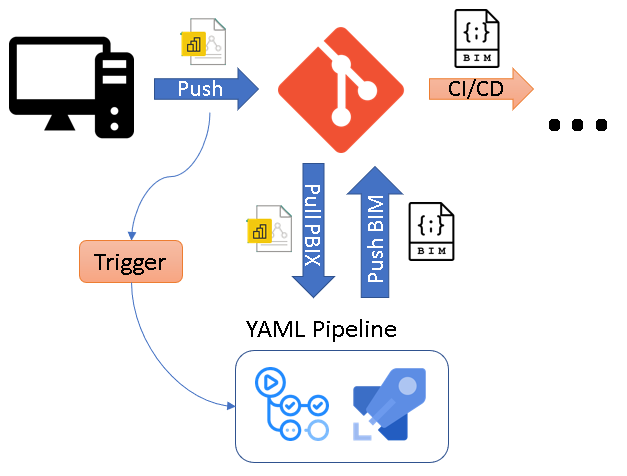

The core idea of the solution is to use CI/CD pipelines that automatically extracts the metadata of a .pbix file as soon as it is pushed to the Git repository. To do this, the .pbix file is automatically uploaded to a Power BI Premium workspace using the Power BI REST API and the free version of Tabular Editor 2 then extracts the BIM file via the XMLA endpoint and push it back to the repository.

I packaged this logic into ready-to-use YAML pipelines for Github Actions and Azure DevOps Pipelines being the two most common choices to use with Power BI. You can just copy the YAML files from the PowerBI.CICD repository to your own repo. Then simply provide the necessary information to authentication against the Power BI service and that’s it. As soon as everything is set up correctly. the pipeline will automatically create a .database.json for every PBIX file that you upload (assuming it contains a data model) and track it in your git repository!

All further details can be found directly in the repository which is also updated frequently!

Almost there Gerhard and the best approach I’ve seen. I really would like to find a way to only save the . PBIT file in the repository (without exporting or using SAVR as template).

if there would be a way to automatically generate the PBIT from the PBIX file without opening it – yes I fully agree that PBIT would be the way to go

unfortunately this is currently not possible without any manual interaction :/

Agreed, If we can upload .pbit in an automated fashion that would be golden. Processing huge .pbix file with data can be time consuming

well, as long as the creation of the .pbit is still a manual process, I dont think it actually solves the problem

the approach with .pbix files is fine as long as you only check in empty datamodels. I usually use a parameter to control whether data is loaded or not which can then be changed again once the dataset is uploaded

It looks like MSFT’s official method for automating PBIX to PBIX is to leverage Power Automate. Any thoughts on this approach?

https://powerusers.microsoft.com/t5/Power-Automate-Cookbook/Automate-the-process-of-exporting-Power-BI-files-pbix-to/td-p/489910

Hi Josh,

there are a lot of different ways how this can be done. The idea of this blog post was/is to make it as end-user friendly as possible so also non IT-professionals can use it.

This like RPA as shown in the video or mouse-macros have been here for ages and obviously you can also leverage them for a task like this.

The main disadvantage is that they require some time and block the user from doing anything else while the RPA/macro is running while the approach I showed is seamless.

anyway, you can use whatever suits your needs best!

-gerhard

I mean “save as” and not “SAVR”.

Last time I tried, the model.bim file was extracted as a one-line JSON file. This needs to be formatted before saving so that we easily can compare versions. I haven’t checked if you already have addressed this yet.

it just provides the standard output of Tabular Editor which is a well-formatted JSON file which works find with most comparison tools (e.g. for the review of a PR)

There is this excellent free tool – can create pbit using command line arguments:

https://pbi.tools/

See this:

https://youtu.be/B66gvRC-03Q

basically the same tool (https://toolkit.action-bi.com/) as mentioned in another comment already – I do not know which homepage is the most recent one

according to the coce, the tool uses PBI Desktop binaries outside of the context of Power BI which is not officially supported by Microsoft

even though the tool is working fine, I cannot recommend it for that reason

https://pbi.tools/

Pingback: Automating Power BI Data Model Metadata Extraction – Curated SQL

Hey there! Such a timely post. Is there anyway to extract the .BIM directly from the .PBIX file (perhaps using the Tabular Editor CLI)? I would like to avoid the need to first deploy to the premium workspace.

no supported one, unfortunately

Tabular Editor relies on the PBIX file being loaded/opened to connect to it via XMLA which is always manual

To do it automatically the approach is the only supported way that I am aware of (hence the blog post)

Hi, this is also an interesting project: https://toolkit.action-bi.com/

yes, definitely!

However, for the extraction of the BIM metadata from a pbix-file the tools (CLI versions) uses an approach not supported by Microsoft, hence I personally would not recommend it

HI,

First of all, thank you very much for sharing such a great post with community!

I am stuck in part Extracting metadata. I struggling with creation of connection string. I am using SP certificate based. Any idea how to deal with this?

to be honest, I have never used SPs with certificates

The only thing I found about this topic was this SO thread:

https://stackoverflow.com/questions/73426399/xmla-endpoint-certificate-based-sp-for-power-bi-ci-cd-with-tabular-editor

it basically says: “When using a certificate you’ll have to fetch the AccessToken first, and then pass the AccessToken as the password in the conenction string, which should look like this:”

hope that helps,

-gerhard

Unfortunately still can’t export metadata. What should be changed in your example in case using SP (mean on the part when start Tabular editor)? Just wondering am I missing something?

Here is how my parms look like:

parms “Provider=MSOLAP;Data Source=source=powerbi://api.powerbi.com/v1.0/myorg/workspacename;Initial catalog=test123-20220822T085802;Password=@token;” “test123-20220822T085802” -SCRIPT “D:\a\1\s\ApplySerializeOptionsAnnotation.csx” -FOLDER “D:\a\1\s\test123” “test123”

so as it seems the problem is not really related to my code but more to Tabular Editor and how authentication against Power BI works in general – so it is probably better to ask there.

once you have a solution that works e.g. in your local PowerShell environment, I am very happy to help you integrating it into a CI/CD pipeline

-gerhard

HI Gerhard,

Yes, the point was to generate access token. The connection string will look like bellow. All the other parts are same as in you example. “powerbi://api.powerbi.com/v1.0/myorg/$($workspace.Name);Initial catalog=$($dataset.Name);Password=$token;Persist Security Info=True;Impersonation Level=Impersonate”

I was using Get-PowerBIAccessToken to generate token. Be careful, it returns token and authorization type (only token is needed).

If you like to update you example, feel free to contact me, I can send you how I did this with certificate.

great that it finally works!

to also help others that deal with this project, it would be good if you post the code-snipped that you used to generate the token from the certificate

-gerhard

Any way we can generate the pbix file after extracting the metadata.. Assuming two different individuals change the pbix file with pull requests. We can use git merge to merge the changes. Any way we can generate the pbix file from the merged changes into main

good question, actually I have never tried it

technically I think, if you add a new measure to a folder and load that folder with Tabular Editor, it should pick up the new measure

The binary format in the pbix file is analogous to the equivalent components in an Excel file, so the Mashup and other text components are quite readily extractable. I have spent much time working through the binary format of the xlsx file in order to do source-control on Excel files and the data model can be loaded to a MSAS Sql server outside of Excel for programmatic manipulation and reinstated to the xlsx archive. Similarly the mashup can be unpacked for source-control.

A similar effort should be possible here. If the starting point is source-control on the text and report components for diffs etc, that is much easier.

I know that Microsoft’s ALM tools are improving dramatically, but there might still be a need.

I’d be happy to collaborate on an open source repository and cloud app to facilitate source control on PBI files if there is interest.

Hi Jon,

yes, the PBIX is just a .zip file and technically everything can be reconstructed from there.

However, the dataset of a .pbix file is a binary and cannot be easy extracted. you need to load it somewhere first (PBI Desktop, PBI Service, AAS, …) to get to the metadata. I decided to use the PBI service for this as

– it has the least/no dependencies to other external tools and can easily be done on a build agent without additional software

– it can be easily automized/scripted

– all its components are supported (which is not the case for any solution that unpacks the .pbix file!)

for the other components like reports I think that https://pbi.tools/ supports this

my code is open source and can be found in the linked repository – if you have some good ideas, feel free to create a PR (or fork the repo and do your own stuff)

-gerhard