Recently I faced the requirement to give specific users of my Analysis Services Multidimensional cube the opportunity to process the cube whenever they want. There are several ways to achieve this:

– start a SQL Agent that processes the cube

– create some kind of WebService that does the processing

– create some custom .NET application that does the processing

– run some script (PowerShell, Command Line, ascmd.exe, …)

NOTE:

The post describes a solution which requires SSAS Server Admin rights. If you are interested in a solution which triggers an asynchronous processing without requiring SSAS Server Admin Rights please scroll down to the very bottom and download VS_Solution.zip

It basically runs the same script but in a separate thread in the context of the service account so the calling user does not need to have any specific rights on the server.

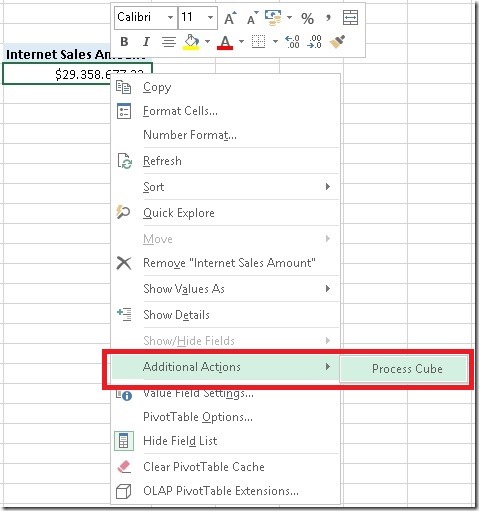

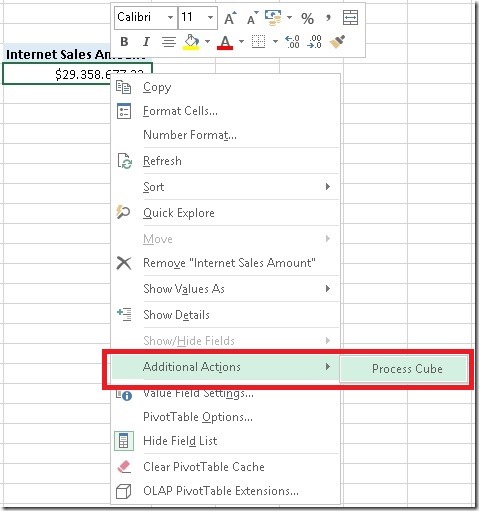

From a usability point-of-view none of the above is really convenient as all options involve some kind of external process or application. In this post I will show how to process a cube using cube actions which can be triggered from Excel Pivot Tables natively. So this is what we want to achieve:

This requires several steps:

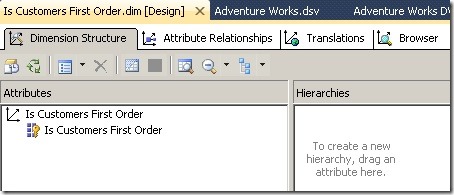

1) create custom .Net assembly

2) add that custom .Net assembly to your Analysis Services database

3) add an action to your cube that calls the assembly

Creating a Custom .Net assembly:

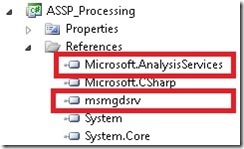

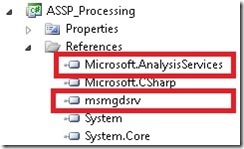

First of all we need to create a new “Visual C#” project of type “Class Library”. To work with Analysis Services we need to add two references:

“Microsoft.AnalysisServices” refers to Microsoft.AnalysisServices.dll which can be found in the shared features folder of your installation (default is c:Program FilesMicrosoft SQL Server110SDKAssemblies)

“msmgdsrv” refers to msmgdsrv.dll which can found be in the OLAPbin-folder of your SSAS instance (default is c:Program FilesMicrosoft SQL ServerMSAS11.MSSQLSERVEROLAPbin)

Once these references are added, we can start to create our processing code:

using System;

using AdomdServer = Microsoft.AnalysisServices.AdomdServer;

using Microsoft.AnalysisServices;

using System.Data;

namespace ASSP_Processing

{

public class Processing

{

public const string LoggingPrefix = “ASSP.ProcessObject: “;

public enum ResultHandling

{

Datatable = 0,

Exception = 1,

NULL = 2

}

public static DataTable ProcessObject(string cubeName, ResultHandling resultHandling)

{

DataTable ret = new DataTable();

ret.Columns.Add(“Result”);

Server server = null;

try

{

server = new Server();

//connect to the current session…

server.Connect(“*”);

AdomdServer.Context.CheckCancelled(); //could be a bit long running, so allow user to cancel

Database db = server.Databases.GetByName(AdomdServer.Context.CurrentDatabaseName);

string objectToProcessName = “#N/A”;

ProcessableMajorObject objectToProcess = db;

AdomdServer.Context.TraceEvent(0, 0, LoggingPrefix + “Database <“ + db.Name + “> found!”);

objectToProcessName = “DB[“ + db.Name + “]”;

if (!string.IsNullOrEmpty(cubeName))

{

Cube cube = db.Cubes.GetByName(cubeName);

if (cube != null)

{

objectToProcess = cube;

AdomdServer.Context.TraceEvent(0, 0, LoggingPrefix + “Cube <“ + cubeName + “> found!”);

objectToProcessName = objectToProcessName + ” > Cube[“ + cubeName + “]”;

}

}

if (objectToProcess != null)

{

AdomdServer.Context.TraceEvent(0, 0, LoggingPrefix + “Processing Object “ + objectToProcessName + ” …”);

objectToProcess.Process(ProcessType.ProcessFull);

ret.Rows.Add(new object[] { “S U C C E S S: Object “ + objectToProcessName + ” successfully processed!” });

AdomdServer.Context.TraceEvent(0, 0, LoggingPrefix + “Finished Processing Object “ + objectToProcessName + “!”);

}

}

finally

{

try

{

if (server != null)

server.Disconnect();

}

catch { }

}

// if processing was successful a row has been added beforehand

if (ret.Rows.Count == 0)

{

ret.Rows.Add(new object[] { “F A I L U R E: Error while processing an object!” });

}

switch (resultHandling)

{

case ResultHandling.Datatable:

return ret;

case ResultHandling.Exception:

throw new Exception(Environment.NewLine + Environment.NewLine + Environment.NewLine + ret.Rows[0][0].ToString());

case ResultHandling.NULL:

return null;

}

return null;

}

public static DataTable ProcessObject(string cubeName, int resultHandling)

{

return ProcessObject(cubeName, (ResultHandling)resultHandling);

}

public static DataTable ProcessObject(string cubeName)

{

return ProcessObject(cubeName, 1);

}

}

}

There are two things here that are worth to point out. First of all we need to somehow establish a connection to the server that hosts the SSAS database in order to process an object. The first thing that comes into mind would be to create a new connection to the server and run some processing XMLA. Unfortunately this does not work here because as this would result in a deadlock. The assembly will be called as a Rowset-Action which in terms of locking is similar to a query. So if we run separate processing command within our Rowset-Action this processing command can never be committed as there is always a query running on the database. The query (=Rowset-Action) will wait until the function is finished and the function waits until the processing is committed resulting in a classical deadlock!

To avoid this we need to connect to the current users sessions using “*” as our connection string:

//connect to the current session…

server.Connect(“*”);

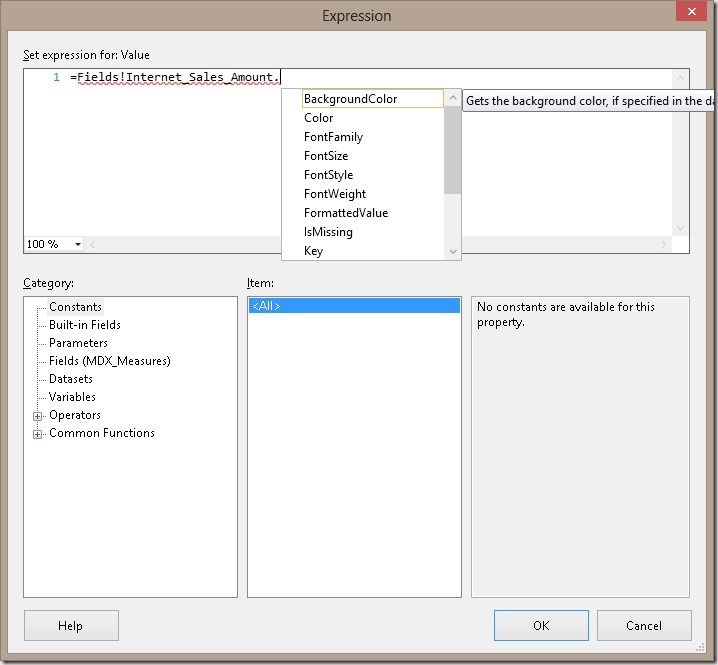

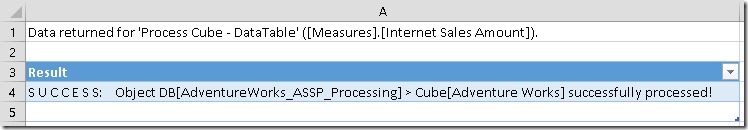

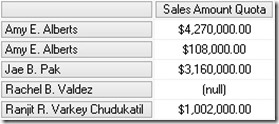

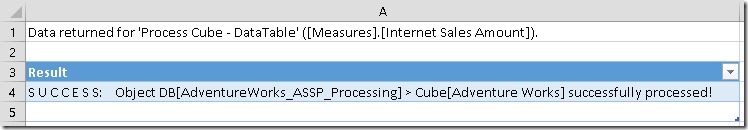

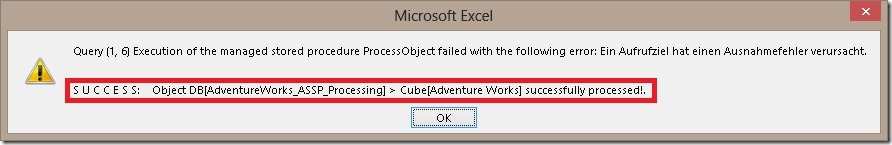

The second thing to point out is the return value of our function. In order to be used in a Rowset-action, the function has to return a DataTable object. The results would then be displayed similar to a drill through and a separate worksheet would pop up in Excel showing the result:

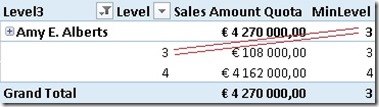

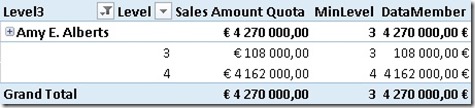

From my point-of-view this can be a bit annoying as after each processing this new worksheet pops up and you loose the focus of the pivot table. So I investigated a bit and found another way to display the output of the processing.

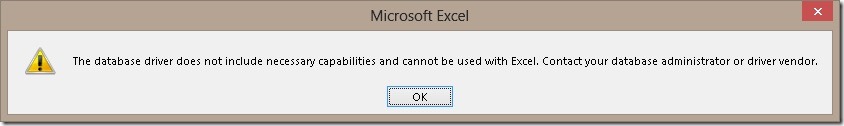

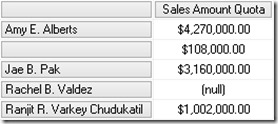

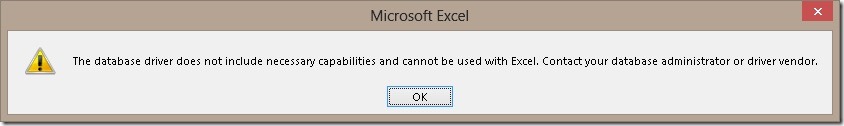

When a drill through / action throws an exception, this will also be displayed in Excel without actually displaying the result of the drill through / action in a separate worksheet. By default it may look like this message:

Well, not really much information here right?

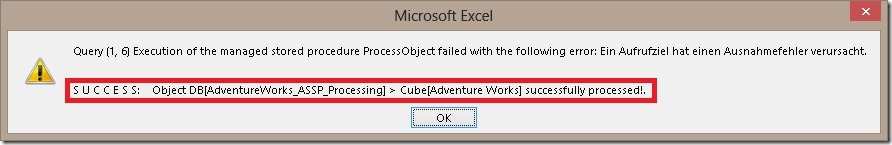

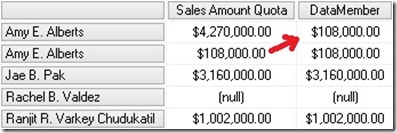

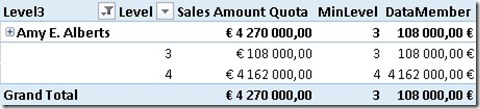

To display more information, e.g. that the processing was successful (or not) we can throw our own exception in the code after processing is done:

I added a separate parameter to control this behavior, in the code above the default would be option 3 – custom Exception.

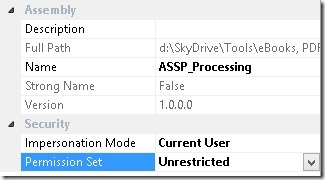

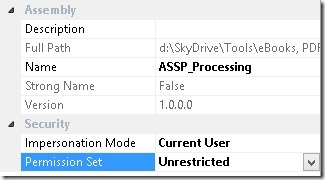

Once this project is built the DLL can be added to the SSAS database. It is necessary to grant the assembly “Unrestricted” permission set:

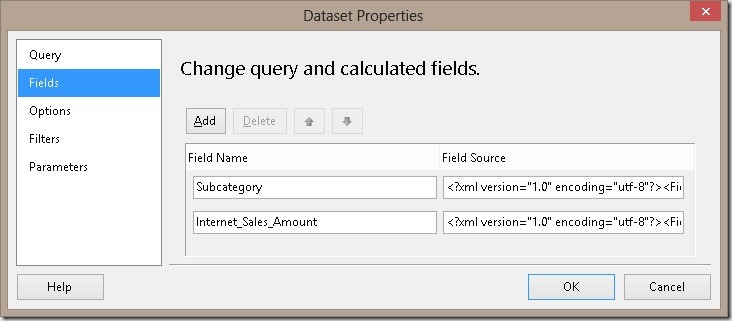

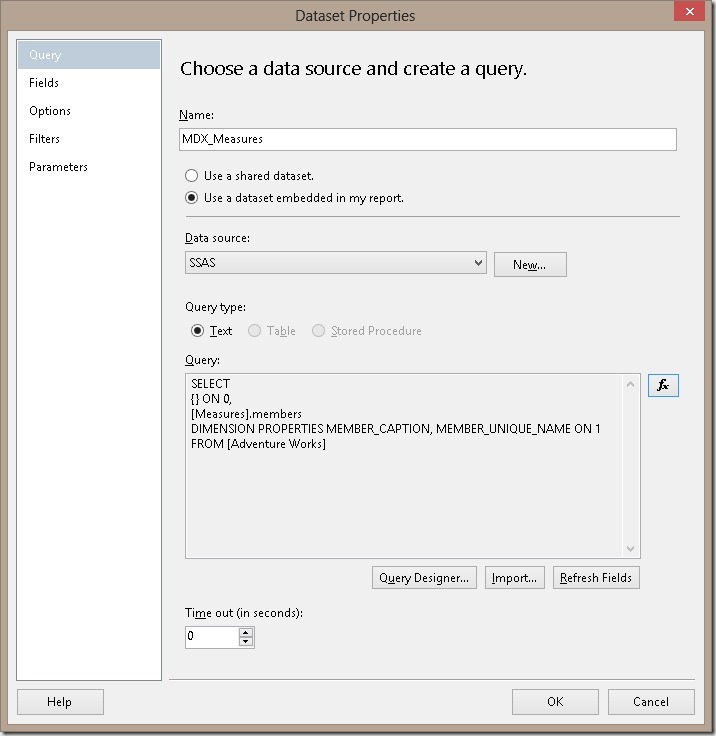

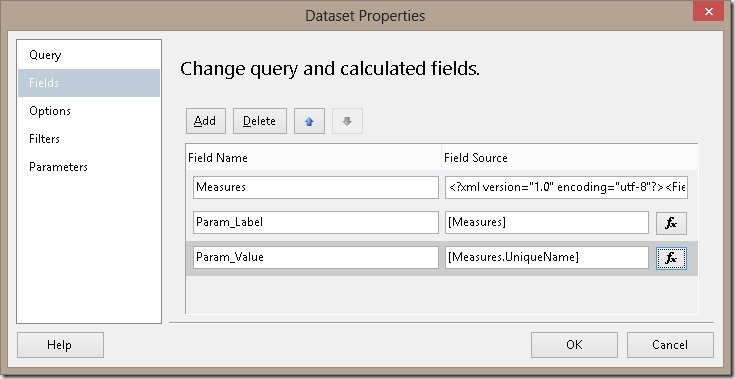

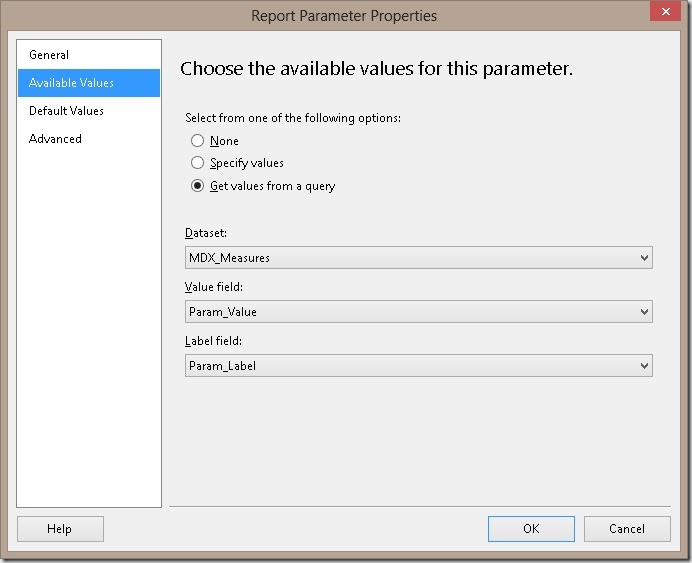

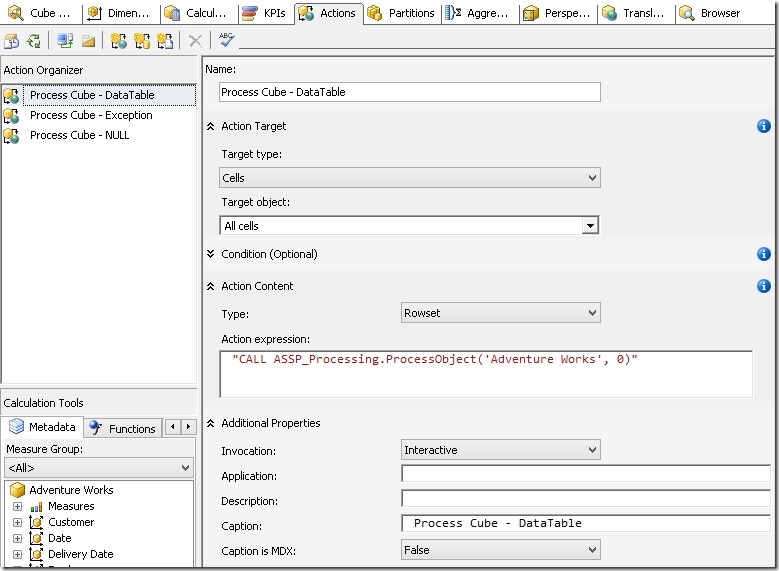

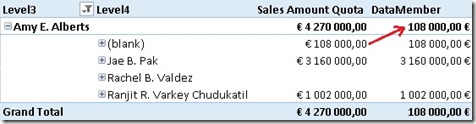

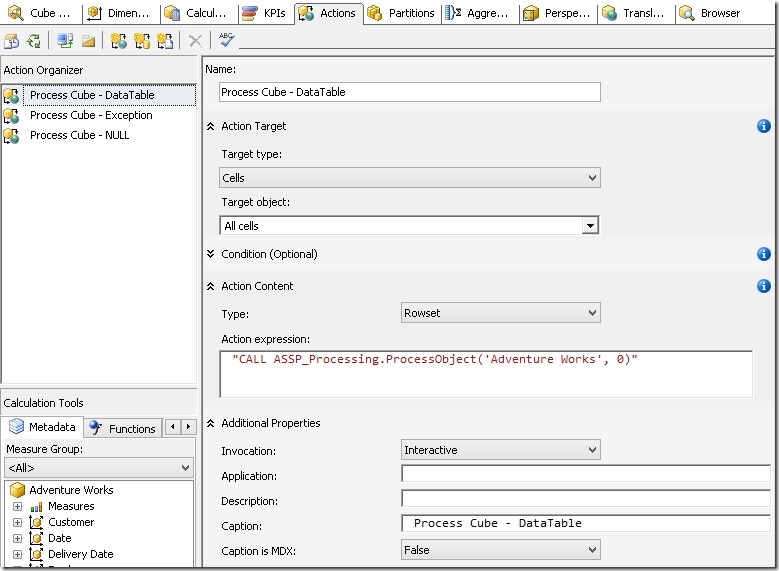

The last step would be to create the appropriate cube actions that call the assembly:

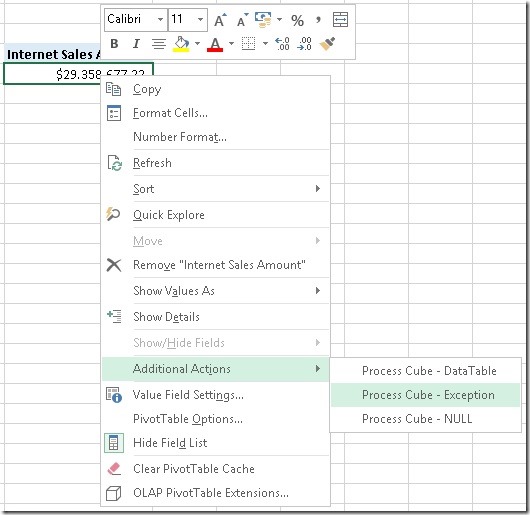

It is necessary that the action is of Type=Rowset, otherwise it would not be displayed in Excel! The Action Target is up the you, in this example it will be displayed on every cell. The Action expression is the actual call to our assembly passing the cube name as a parameter. The second parameter controls how the result is displayed and could also be omitted. In this example I added 3 separate actions, one for each result-type (as described above).

And that’s it! This simple steps allow you and your users to process any cube (or any other database object) from within your favorite client application, assuming it supports cube actions.

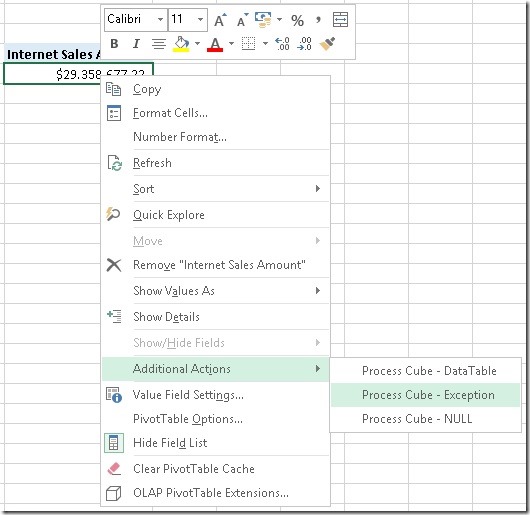

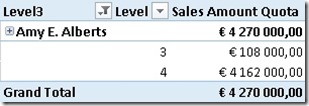

This is the final result where the three cube actions can be triggered from an Excel pivot table:

The attached zip-file includes everything that is necessary to recreate this example:

– SSAS project

– ASSP_Processing project

CubeProcessingAction.zip

Solution for Asynchronous Processing without Admin-Rights:

VS_Solution.zip