It is a very common scenario to create a SSAS Tabular Model out of an Power Pivot Model contained in an Excel workbook. Microsoft even created an wizard (or actually a separate Visual Studio project) that supports you doing this. Even further, this process is also a major part of Microsoft’s strategy to cover Personal BI, Team BI and Corporate BI within one technology being xVelocity. This all works just fine but there may also be scenarios where you need to do it the other way round – converting a Tabular model to Power Pivot. Several use-cases come into my mind but I am sure that the most important one is to making data available offline for e.g. sales people to take it with them on their every day work. And in this blog post I will show how this can be done!

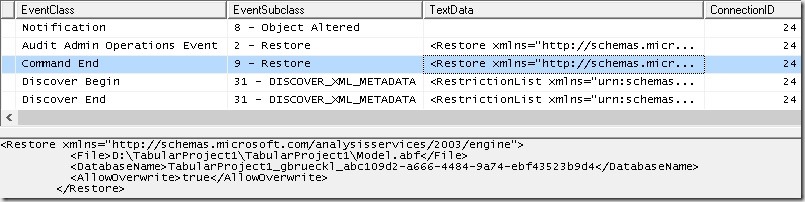

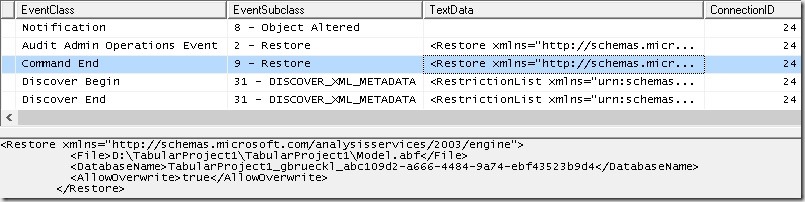

But before taking a closer look into how this can be accomplished, lets first see how the import from Power Pivot to SSAS Tabular works. To do this start SQL Server Profiler and connect to your tabular instance. Then create a new Tabular project in Visual Studio based on an existing Power Pivot workbook. At this point you will notice a lot of events happening on our SSAS Tabular server. The most important event for us is “Command End” with the EventSubclass “9 – restore”:

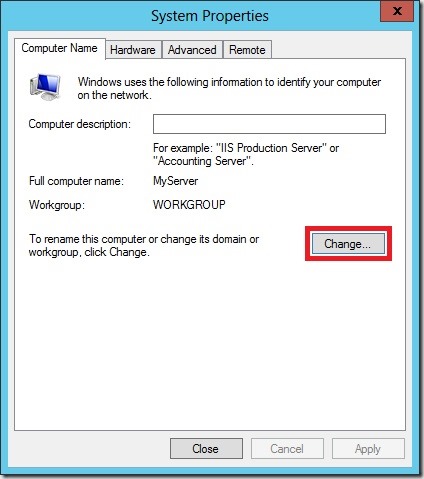

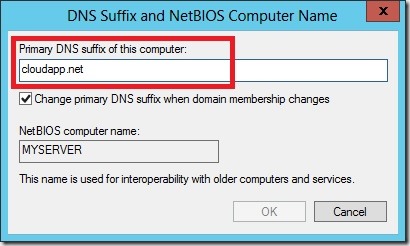

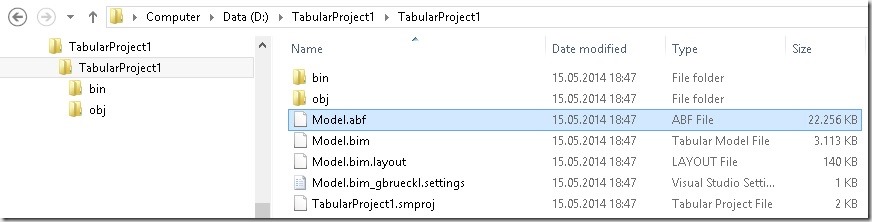

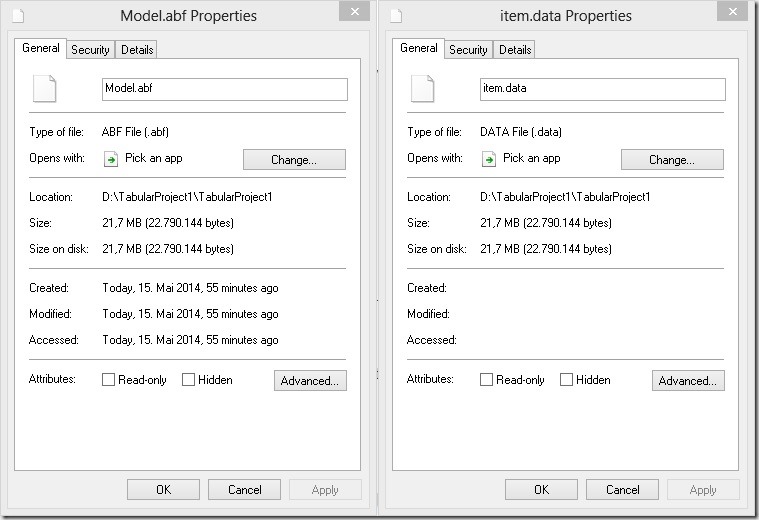

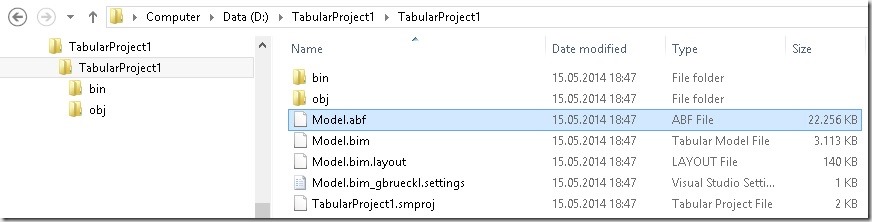

SSAS actually restores a backup from a “Model.abf” backup file which is located in our project directory that we just created:

So far so good – but where does this file come from?

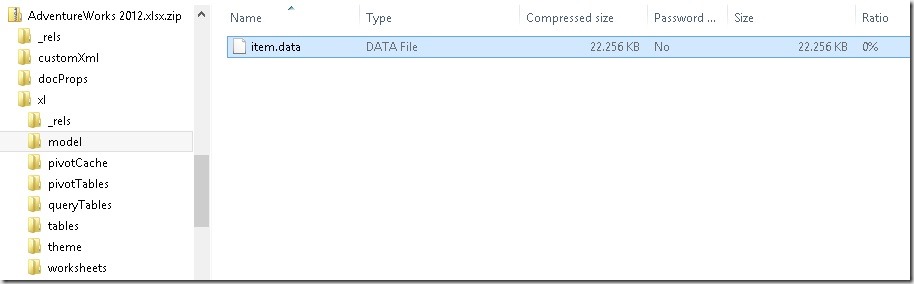

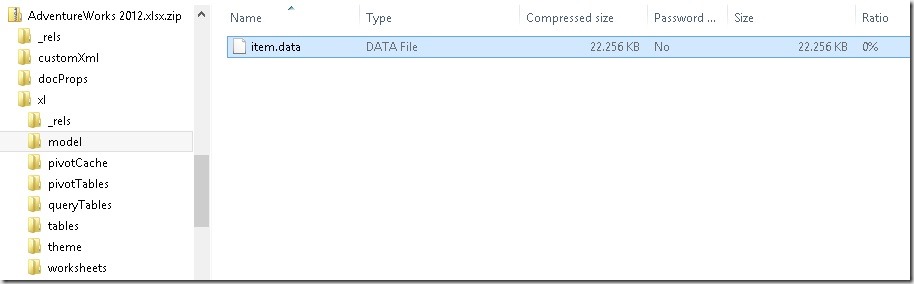

Well, the origin of the file has to be our Excel workbook that we imported. Knowing that all new office formats ending with “x” (.xlsx, .docx, …) are basically ZIP files, we can inspect our original Excel workbook by simply rename it to “.zip”. This allows us to browse the Excel file structure:

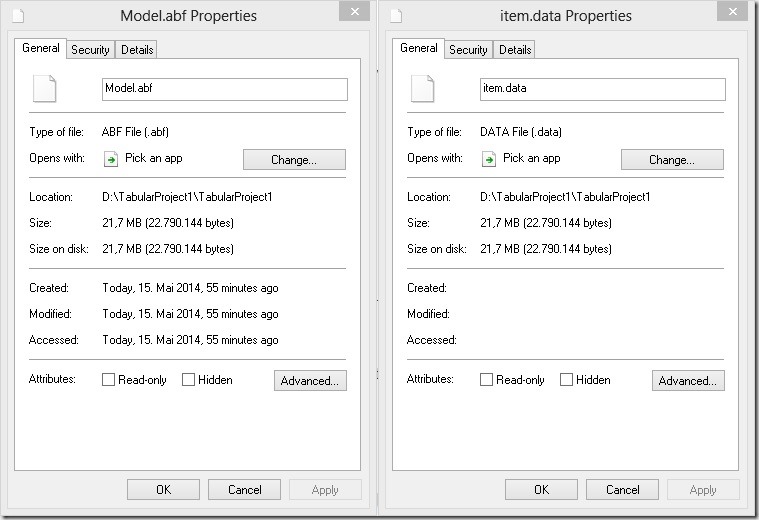

We will find a folder called “xl” which contains a sub-folder called “model”. This folder contains one item called “item.data”. If you take a closer look at the file size you may realize that both, the “Model.abf” file that we restored and the “item.data” file from our Excel workbook have the exact same size:

A Coincidence? Not really!

What happens behind the scenes when you import a Power Pivot model into SSAS Tabular is that this “item.data” file gets copied into your project directory and is renamed to “Model.abf” and then restored to the SSAS Tabular workspace instance by using an standard database restore.

Having this information probably makes you think: If it works in one direction, why wouldn’t it also work the other way round? And it does!

So here are the steps that you need to do in order to restore your SSAS Tabular backup into an Excel Power Pivot workbook:

- Create a backup of your SSAS Tabular database and rename it to “item.data”

- Create an empty Excel workbook and add a simple linked table to the Excel data model (which is actually Power Pivot).

This is necessary to tell Excel that the workbook contains a Power Pivot model which has to be loaded once the file is opened.

- Close the Excel workbook and rename it from “MyFile.xlsx” to “MyFile.xlsx.zip”

- Open the .zip-file in Windows Explorer and locate the “\xl\model\”-folder

- Replace the “item.data” file with the file that you created in step 1.

- Rename the .zip-file back to “MyFile.xlsx”

- Open the Excel Workbook

- Voilá! You can now work with the data model as with any other Power Pivot model!

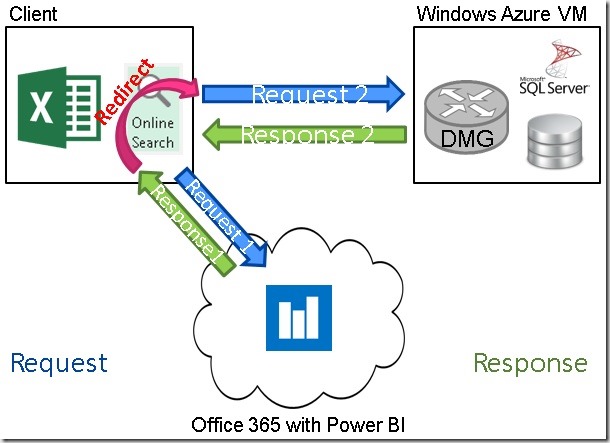

I tested this with a SSAS Tabular backup from SQL Server 2012 SP1 being restored to the streamed version of Excel from Office 365 with the latest version of Power Pivot. I assume that it also works with older versions but have not tested all combinations yet.

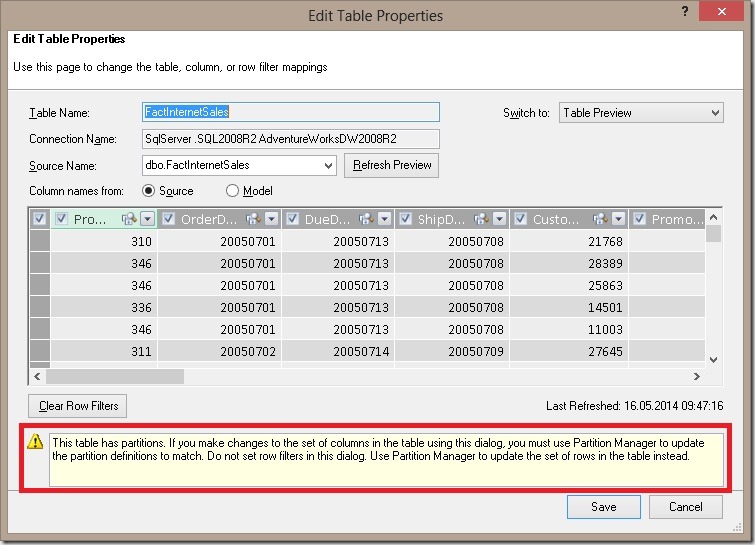

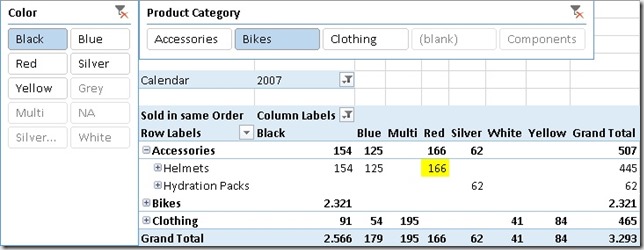

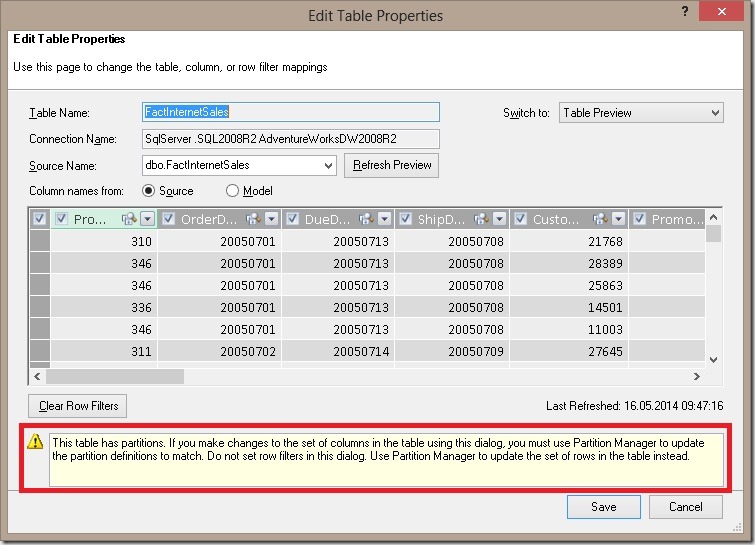

There are also some features that will not work, for example roles. If your Tabular database contains roles you will not be able to use this approach. Excel will complain that the Power Pivot model is broken. However, other Tabular features like partitions actually work with the little limitation that you cannot change them later on in the Power Pivot model or process them separately:

Another thing to note here is that only up to 3 partitions are allowed, otherwise you will get the same error as for roles. I think this is related to the limitation of 3 partitions for SQL Server Analysis Services Standard Edition as Chris Webb described here.

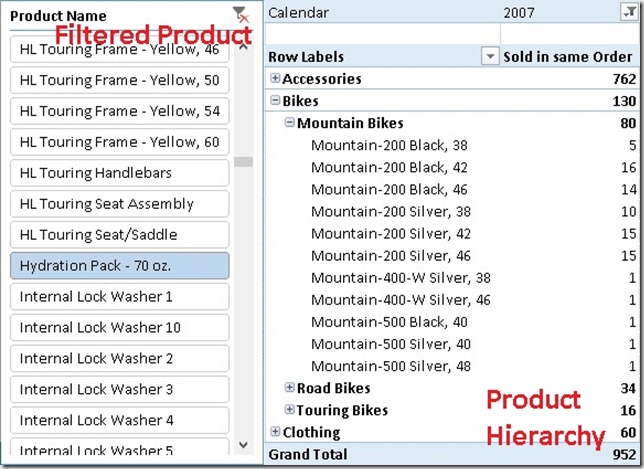

Besides these obvious features there are also some other cool things that you can do in Tabular which are not possible in Power Pivot. Most (or actually all) of them are accessible only by using BIDS Helper – a great THANK YOU to the developers of BIDS Helper at this point!

BIDS Helper enables you to add classical multidimensional features also to Tabular models which is not possible using standard Visual Studio only. Those include:

- DisplayFolders

- Translations (metadata only)

- Actions

- …

I tested it for DisplayFolders and Actions and both are working also in Power Pivot after the backup was restored and I further assume that all the other things will also work just fine.

Simply keep in mind that Power Pivot is basically a fully featured Analysis Services instance running within Excel!

For my (and your) convenience I also created a little PowerShell script that does all the work:

- # Load the assembly with the ZipFile class

- [System.Reflection.Assembly]::LoadWithPartialName("System.IO.Compression.FileSystem") | Out-Null

- # Load the assembly to access Analysis Services

- [System.Reflection.Assembly]::LoadWithPartialName("Microsoft.AnalysisServices") | Out-Null

- # Also install "Analysis Services PowerShell" according to http://technet.microsoft.com/en-us/library/hh213141.aspx

-

- # INPUT-Variables, change these to match your environment

- $rootFolder = "D:\Test_PowerShell\"

- $emptyExcelFile = $rootFolder + "EmptyExcel.xlsx"

- $ssasServerName = "localhost\TAB2012"

- $ssasDatabaseName = "AdventureWorks"

-

- # internal variables

- $newExcelFile = $rootFolder + $ssasDatabaseName + ".xlsx"

- $newExcelFileZip = $newExcelFile + ".zip"

- $unzipFolder = $rootFolder + "TEMP_ExcelUnzipped"

- $backupFile = $rootFolder + $ssasDatabaseName + ".abf"

- $itemDestination = $unzipFolder + "\xl\model\item.data"

-

- # Copy the empty Excel file and rename it to ".zip"

- Copy-Item -Path $emptyExcelFile -Destination $newExcelFileZip

-

- # Unzip the file using the ZipFile class

- [System.IO.Compression.ZipFile]::ExtractToDirectory($newExcelFileZip, $unzipFolder)

-

- # Create a backup of the SSAS Tabular database

- Backup-ASDatabase -Server $ssasServerName -Name $ssasDatabaseName -BackupFile $backupFile -AllowOverwrite -ApplyCompression

-

- # Copy the backup-file to our extracted Excel folder structure

- Copy-Item -Path $backupFile -Destination $itemDestination -Force

-

- # Check if the target file exists and delete it

- if (Test-Path -Path $newExcelFile) { Remove-Item -Path $newExcelFile }

-

- # Zip the folder-structure again using the ZipFile class and rename it to ".xlsx"

- [System.IO.Compression.ZipFile]::CreateFromDirectory($unzipFolder, $newExcelFile)

-

- # Cleanup the unecessary files

- Remove-Item -Path $unzipFolder -Recurse

- Remove-Item -Path $backupFile

- Remove-Item -Path $newExcelFileZip

The last thing to mention here is that I don’t know if this is officially supported in any way by Microsoft – actually I am pretty sure it is not – so watch out what you are doing and don’t complain if something is not working as expected.