An ABC Analysis is a very common requirement for for business users. It classifies e.g. Items, Products or Customers into groups based on their sales and how much impact they had on the cumulated overall sales. This is done in several steps.

I just published a new version of the Dynamic ABC Analysis at www.daxpatterns.com. The article can be found here.

1) Order products by their sales in descending order

2) Cumulate the sales beginning with the best selling product till the current product

3) Calculate the percentage of the cumulated sales vs. total sales

4) Assign a Class according to the cumulated percentage

Marco Russo already blogged about this here. He does the classification in a calculated column based on the overall sales of each product. As calculated columns are processed when the data is loaded, this is not dynamic in terms of your filters that you may apply in the final report. If, for example, a customer was within Class A regarding total sales but had no sales last year then a report for last year that uses this classification may give you misleading results.

In this blog I will show how to do this kind of calculation on-the-fly always in the context of the current filters. I am using Adventure Works DW 2008 R2 (download) as my sample data and create a dynamic ABC analysis of the products.

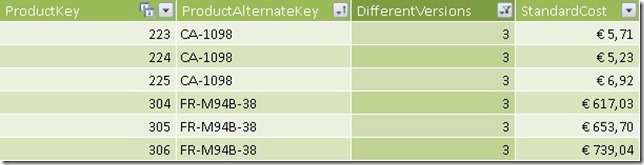

The first thing we notice is that our product table is a slowly changing dimension of type 2 and there are several entries for the same product as every change is traced in the same table.

So we want to do our classification on the ProductAlternateKey (=Business Key) column instead of our ProductKey (=Surrogate Key) column.

First we have to create a ranking of our products:

IF(NOT(ISBLANK([SUM SA])),

RANKX(

CALCULATETABLE(

VALUES(DimProduct[ProductAlternateKey]),

ALL(DimProduct[ProductAlternateKey])),

[SUM SA])))

Check if there is only one product in the current context and that this product also has sales. If this is the case we calculate our rank. We need to do the CALCULATETABLE to do the ranking within the currently applied filters on other columns of the DimProduct-table e.g. if a filter is applied to DimProduct[ProductSubcategoryKey] we want to see our ranking within that selected Subcategory and not against all Products.

I also created a measure [SUM SA] just to simplify the following expressions:

The second step is to create a running total starting with the best-selling product/the product with the lowest rank:

TOPN(

[Rank CurrentProducts],

CALCULATETABLE(

VALUES(DimProduct[ProductAlternateKey]),

ALL(DimProduct[ProductAlternateKey])),

[SUM SA]),

[SUM SA])

We use a combination of SUMX() and TOPN() here. TOPN() returns the top products ordered by [SUM SA]. Further we use our previously calculated rank to only get the products that have the same or more sales than the current product. For example if the current product has rank 3 we sum up the top 3 products to get our cumulated sum (=sum of the first 3 products) for this product. Again we need to use CALCULATETABLE() to retain other filters on the DimProduct-table.

The third step is pretty easy – we need to calculate percentage of the cumulated sales vs. the total sales:

[CumSA CurrentProducts]

/

CALCULATE([SUM SA], ALL(DimProduct[ProductAlternateKey]))

This calculation is straight forward and should not need any further explanation.

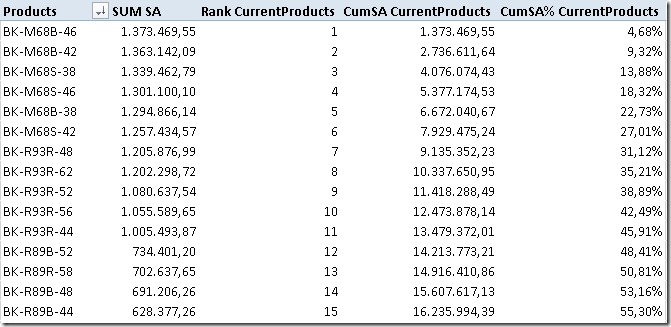

The result of those calculations can be seen here:

To do our final classification we have to extend our model with a new table that holds our classes and their border-values:

| Class | LowerBoundary | UpperBoundary |

| A | 0 | 0.7 |

| B | 0.7 | 0.9 |

| C | 0.9 | 1 |

Class A should contain products which’s cumulated sales are between 0 and 0.7 – between 0% and 70%.

Class B should contain products which’s cumulated sales are between 0.7 and 0.9 – between 70% and 90%.

etc.

(This table can later be extended to support any number of classes and any boundaries between 0% and 100%.)

To get the boundaries of the selected class we create two measures that are later used in our final calculation:

Our final calculation looks like this:

[SUM SA],

CALCULATE(

[SUM SA],

FILTER(

VALUES(DimProduct[ProductAlternateKey]),

[MinLowerBoundary] < [CumSA% CurrentProducts]

&& [CumSA% CurrentProducts] <= [MaxUpperBoundary])))

If our Classification-table is not filtered, we just show our [SUM SA]-measure. Otherwise we extend the filter on our DimProduct[ProductAlternateKey] using our classification filtering out all products that do not fall within the borders of the currently selected class.

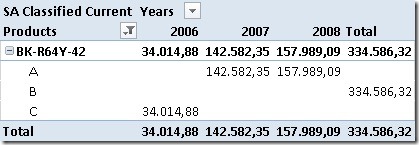

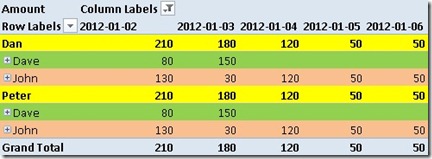

This measure allows us to see the changes of the classification of a specific product e.g. over time:

In 2006 our selected product was in Class C. For 2007 and 2008 it improved and is now in Class A. Still, overall it resides in Class B.

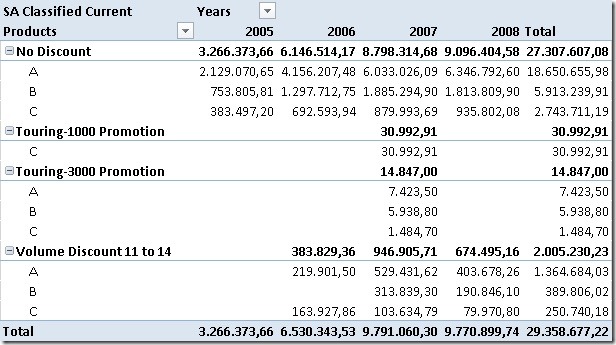

We may also analyze the impact of our promotions on the sales of our classified products:

Our Promotion “Touring-1000 Promotion” only had impact on products in Class C so we may consider to stop that promotion and invest more into the other promotions that affect all classes.

The classification can be used everywhere you need it – in the filter, on rows or on columns, even slicers work. The only drawback is that the on-the-fly calculation can take quite some time. If I find some time in the future i may try to further tune them and update this blog-post.

The example workbook can be downloaded here:

Though it is already in Office 2013 format an may not be opened with any previous versions of Excel/PowerPivot.

It also includes a second set of calculations that use the same logic as described above but does all the calculations without retaining any filters on the DimProducts-table. This allows you to filter on Class “A” and ProductSubcategory “Bike Racks” realizing that “Bike Racks” are not a Class “A” product or to see which Subcategories or Categories actually contain Class A, B or C products!

![PT_Warehouse_IsValid_thumb[2] PT_Warehouse_IsValid_thumb[2]](https://blog.gbrueckl.at/wp-content/uploads/2012/02/pt_warehouse_isvalid_thumb2_thumb.jpg)

![PT_Amount_by_Warehouse_thumb[1] PT_Amount_by_Warehouse_thumb[1]](https://blog.gbrueckl.at/wp-content/uploads/2012/02/pt_amount_by_warehouse_thumb1_thumb.jpg)

![PT_Amount_by_Warehouse_SingleDate_thumb[2] PT_Amount_by_Warehouse_SingleDate_thumb[2]](https://blog.gbrueckl.at/wp-content/uploads/2012/02/pt_amount_by_warehouse_singledate_thumb2_thumb.jpg)