As you probably know from my last blog post, I am currently upgrading the PowerBI reporting platform of one of my customer from a PowerBI backend (dataset hosted in PowerBI service) to an Azure Analysis Services backend. The upgrade/import of the dataset into Azure Analysis Services itself worked pretty flawless and after switching the connection of the reports everything worked as expected and everyone was happy. However, things got a bit tricky when it came to automatically refreshing the Azure Analysis Services database which was based on an Azure Data Lake Store. For the original PowerBI dataset, this was pretty straight forward as a scheduled refresh from an Azure Data Lake store data source works out of the box. For Azure Analysis Services this is a bit different.

When you build and deploy your data model from Visual Studio, your are prompted for the credentials to access ADLS which are then stored in the data source object of AAS. As you probably know, AAS uses OAuth authentication to access data from ADLS. And this also causes a lot of problems. OAuth is based on tokens and those tokens are only valid for a limited time, by default this is 2 hours. This basically means, that you can process your database for the next 2 hours and it will fail later on with an error message saying that the token expired. (The above applies to all OAuth sources!)

This problem is usually solved by using an Azure Service Principal instead of a regular user account where the token does not expire. Unfortunately, this is not supported at the moment for ADLS data sources and you have to work around this issue.

IMPORTANT NOTE: NONE OF THE FOLLOWING IS OFFICIALLY SUPPORTED BY MICROSOFT !!!

So the current situation that we need to solve is as follows:

- we can only use regular user accounts to connect AAS to ADLS as service principals are not supported yet

- the token expires after 2 hours

- the database has to be processed on a regular basis (daily, hourly, …) without any manual interaction

- manually updating the token is (of course) not an option

Before you continue here, make sure that you read this blog post first: https://blogs.msdn.microsoft.com/dataaccesstechnologies/2017/09/01/automating-azure-analysis-service-processing-using-azure-automation-account/

It describes the general approach of using Azure Automation to process an Azure Analysis Services model and most of the code in this blog post if based on this!

Also this older blog post will be a good read as some concepts and code snippets are reused here.

Back to our example – as we were already using Azure Automation for some other tasks, we decided to also use it here. Also, PowerShell integrates very well with other Azure components and was the language of choice for us. To accomplish our goal we had to implement 3 steps:

- acquire a new OAuth token

- update the ADLS data source with the new token

- run our processing script

I could copy the code for the first step more or less from one of my older blog post (here) where I used PowerShell to acquire an OAuth token to trigger a refresh in PowerBI.

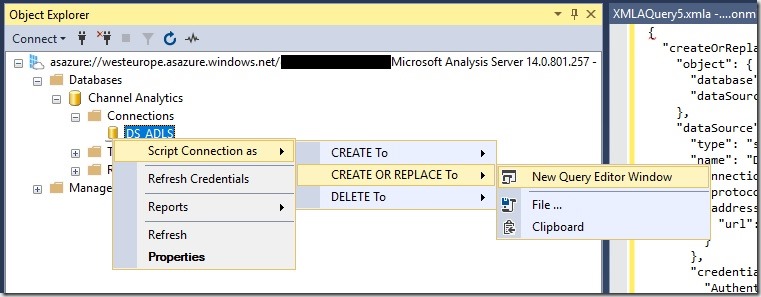

The second step is to update ADLS data source of our Azure Analysis Services model. To get started, the easiest thing to do is to simply open the AAS database in SQL Server Management Studio and let it script the existing datasource for you:

The resulting JSON will look similar to this one:

“createOrReplace”: {

“object”: {

“database”: “Channel Analytics”,

“dataSource”: “DS_ADLS”

},

“dataSource”: {

“type”: “structured”,

“name”: “DS_ADLS”,

“connectionDetails”: {

“protocol”: “data-lake-store”,

“address”: {

“url”: “https://mydatalake.azuredatalakestore.net”

}

},

“credential”: {

“AuthenticationKind”: “OAuth2”,

“token_type”: “********”,

“scope”: “********”,

“expires_in”: “********”,

“ext_expires_in”: “********”,

“expires_on”: “********”,

“not_before”: “********”,

“resource”: “********”,

“id_token”: “********”,

“kind”: “DataLake”,

“path”: “https://mydatalake.azuredatalakestore.net/”,

“RefreshToken”: “********”,

“AccessToken”: “********”

}

}

}

}

The important part for us is the “credential” field. It contains all the information necessary to authenticate against our ADLS store. However, most of this information is sensitive so only asterisks are displayed in the script. The rest of the JSON (except for the “credential” field) is currently hardcoded in the PowerShell cmdlet so if you want to use it, you need to change this manually!

The PowerShell cmdlet then combines the hardcoded part with an updated “credential”-field which is obtained by invoking a REST request to retrieve a new OAuth token. The returned object is modified a bit in order to match the required JSON for the datasource.

Once we have our final JSON created, we can send it to our Azure Analysis Services instance by calling the Invoke-ASCmd cmdlet from the SqlServer module.

Again, please see the original blog post mentioned above for the details of this approach.

After we have updated our datasource, we can simply call our regular processing commands which will then be executed using the newly updated credentials.

The script I wrote allows you to specify which objects to process in different ways:

- whole database (by leaving AASTableName and AASPartitionName empty)

- a single or multiple table and all its partitions (by leaving only AASPartitionName empty)

- or multiple partitions of a single table (by specifying exactly one AASTableName and multiple AASPartitionNames

If multiple tables or partitions are specified, the elements are separated by commas (“,”)

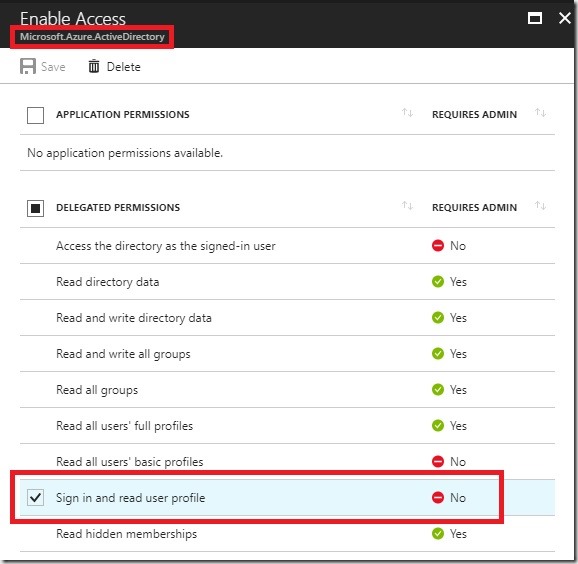

So to make the Runbook work in your environment, follow all the initial steps as described in the original blog post from Microsoft. In addition, you also need to create an Application (Type = “Native”) in your Azure Active Directory to obtain the OAuth token programmatically. This application needs the “Sign in and read user profile” permission from the API “Windows Azure Active Directory (Microsoft.Azure.ActiveDirectory)”:

Also remember the ApplicationID, it will be used as a parameter for the final PowerShell Runbook (=parameter “ClientID”!

When it comes to writing the PowerShell code, simply use the code from the download at the end of this blog post.

For the actual credential that you are using, make sure that it has the following permissions:

- to update the AAS datasource (can be set in the AAS model or for the whole server)

- has access to the required ADLS files/folders which are processed (can be set e.g. via ADLS Data Explorer)

- (if you previously used your own account to do all the AAS and ADLS development, this should work just fine)

In general, a similar approach should work for all kinds of datasources that require OAuth authentication but so far I have only tested it with Azure Data Lake Store!

Download: AAS_Process_OAuth_Runbook.ps1

Great post. I’m using an Azure Data Warehouse. Looks like I’ll have the same issue as ADW doesn’t support other connection types.

Hi Chris, actually, for Azure SQL DW you could also use SQL authentication which works just fine with regular automated refreshes. There is no need to OAuth from my point of view

-gerhard

Have you solved for situations where the Oath token expires before the processing completes (and then the process fails because credentials to the Data lake source are invalid)?

usually the tokens should be valid for 1 hour

it may be possible to issue a token that is valid for a longer time but I have not done this yet

You would need to check if you can specify how long the token should be valid when generating it using Invoke-WebRequest

Hi Gerhard, I’m seeing this issue with a Oauth connection to a SharePoint list. When I script the connection I see there is a refresh token, when I refresh list via SMSS seems to handle token refresh automatically, but not via PowerShell. I’m starting to think this is a bug? Seems crazy that despite having a username and password that authenticates fine to SharePoint I can’t use that on a scheduled refresh in SSAS…

so you are saying that when you refresh the token via SSMS it works correctly and if the token expires a new token is generated using the refresh-token?

and the same is not working if you update the connection using PowerShell?

Hi Gerhard, I build the cube pointing it to Data lake. I deployed the cube on AAS and processed with small data set where I just pulled 1000 records for each partitions and it worked fine but when i tried to process it with large data set with ~45Million record per partition (48 partition), it failed giving error

Error 0: Error processing partition ‘Partition 47’ of table ‘table 1’ [Internal Error Code: ‘0xc1030001’].

Error 0: Unable to complete Tabular transaction.

Do you have any solution on this ? Need to change any server property?

Thank you

Don’t know, looks pretty random to me and more like an issue with AAS. I suggest to open a support case at Microsoft

-gerhard

It looks a good post but when I tried to use same REST API Call to obtain refresh token, have recieved following error with API call.

“error_description”: “AADSTS50034: The user account does not exist in the directory. To sign into this application, the account must be added to the directory.

Obviously, RunBook credentials are for Service Principal and Service principal does not exists as USER in tenant.

Can you please help me with what wrong am doing?

in the sample I use a regular user account for both, connecting to ADLS and also to connect to AAS to actually update the data source

so the RunBook credential has to hold a username (user@domain.com) and its password

there are not service principals involved at all

it looks like you used a service principal in your credential

would this also work in cases where user accounts have to go through 2FA for access?

no, for 2FA/MFA this would not work

but you could create a regular AAD account without 2FA/MFA and (ab)use it as service account

Hi,

My cube is deployed on on-prem server. I trying to follow your approach but it is not working. It is saying given credentials cannot be used. Can you please help me out?

well, do you use the same credentials as you use e.g. when working with VS?

did you set up your AAD application correctly?

Gerhard, Good Day! I ve got an on-prem SSAS Tabular 19 and run this PowerShell script in SQL Agent to refresh cred-s of Office 365 datasource:

$query = ‘select * from $System.TMSCHEMA_DATA_SOURCES’

$server = “XXXX”

$database = “XXX”

$tenant = “XXX”

function RefreshSharepointToken {

Param([string]$refreshToken)

$uri = “https://login.windows.net/$tenant/oauth2/token”

$body = @{

refresh_token = $refreshToken;

grant_type = “refresh_token”;

}

return Invoke-RestMethod -Uri $uri -Method Post -Body $body

}

function UpdateSSASDataSource {

Param($dataSource, $tokenResponse)

# get the expired datetime

$startDate = Get-Date -Year 1970 -Month 1 -Day 1 -Hour 0 -Minute 0 -Second 0

$expireDateTime = $startDate.AddSeconds($tokenResponse.expires_on).tostring(“R”)

# convert json fields to objects

$connectionDetails = $dataSource.ConnectionDetails | ConvertFrom-Json

$credential = $dataSource.Credential | ConvertFrom-Json

$dataSourceName = $dataSource.Name

$connectionDetailsProtocol = $connectionDetails.protocol

$connectionDetailsAddressUrl = $connectionDetails.address.url

$credentialAuthenticationKind = $credential.AuthenticationKind

$credentialPrivacySetting = $credential.PrivacySetting

$credentialProviderType = $credential.ProviderType

$tokenResponseRefreshToken = $tokenResponse.refresh_token

$tokenResponseAccessToken = $tokenResponse.access_token

$tmsl = @”

{

“createOrReplace”: {

“object”: {

“database”: “$database”,

“dataSource”: “$dataSourceName”

},

“dataSource”: {

“type”: “structured”,

“name”: “$dataSourceName”,

“connectionDetails”: {

“protocol”: “$connectionDetailsProtocol”,

“address”: {

“url”: “$connectionDetailsAddressUrl”

},

“authentication”: null,

“query”: null

},

“credential”: {

“AuthenticationKind”: “$credentialAuthenticationKind”,

“Expires”: “$expireDateTime”,

“RefreshToken”: “$tokenResponseRefreshToken”,

“ProviderType”: “$credentialProviderType”,

“PrivacySetting”: “$credentialPrivacySetting”,

“AccessToken”: “$tokenResponseAccessToken”

}

}

}

}

“@

Write-Output $tmsl

## execute the TMSL and return the result

[xml]$results = Invoke-ASCmd -Server $server -Database $database -Query $tmsl

return $results

}

## query the model for data sharepoint data sources

[xml]$xmlResults = Invoke-ASCmd -Server:$server -Database:$database -Query:$query

## look for OAuth2 creds and refresh them

foreach ($dataSource in $xmlResults.return.root.row) {

$dataSourceName = $dataSource.Name

if ($dataSource.Credential) {

$credJson = ConvertFrom-Json $dataSource.Credential

## update any OAuth2 data sources

if ($credJson.AuthenticationKind -eq “OAuth2”) {

## get the Expired time for the token

$expireTime = [datetime]::parseexact($credJson.Expires, “R”, $null)

$currentTime = [System.TimeZoneInfo]::ConvertTimeBySystemTimeZoneId((Get-Date), “Greenwich Standard Time”)

## only update expired tokens

#if ($currentTime -gt $expireTime) {

## Get the refresh token and call the API to refresh

Write-Output “Refreshing Token for $dataSourceName”

$token = $credJson.RefreshToken

$refreshToken = RefreshSharepointToken $token

## check that we have an access token

if ($refreshToken.access_token) {

## update the TMSL with the new access token

Write-Output “Updating $dataSourceName”

$updateResults = UpdateSSASDataSource $dataSource $refreshToken

}

else {

Write-Error “Could not retrive access_token”

Write-Error $refreshToken

}

#}

}

}

}

It looks like refreshing token but then SSAS throughs an error :

Failed to save modifications to the server. Error returned: ‘COM error: Microsoft.Data.Mashup; The given privacy setting is not recognized..

May be you can advice anything – what is wrong with the script or settings SQL?

Thank you!

it is pretty hard to debug a script like that without having the proper environment at hand

what I did to come up with my code was to script the change in SSMS and the rebuild it in PowerShell. With some try-and-error this worked just fine in my case and will be similar also for O365

I am trying to connect Azure SQL DW in Direct Query mode with SSDT and get this error – Failed to save modifications to the server. Datasource has authentication kind OAuth2. This is not supported in DirectQuery 1400 mode.

I read in the MS docs that Direct Query mode is not supported with OAuth credentials.

Need help as I am stuck.

well, if MS docs already states that Direct Query mode is not supported with OAuth credentials, I do not know how I could help you here?!

Hello Gerhard,

This is a great post.

We are refreshing Azure Analysis Services tabular model from Azure SQL Database using REST API in ADF and are facing similar issues. Oddly however we don’t see the issue everyday so when we run the refresh.

Since the post is from a few years ago, I wanted to check if there are any upgrades to this functionality?

We want to avoid SQL Authenticated accounts in our Azure SQL Database.

Thank you.

Hi Manav,

unfortunately I am not aware of any change in behavior here 🙁

-gerhard