Azure Data Factory v2 is Microsoft Azure’s Platform as a Service (PaaS) solution to schedule and orchestrate data processing jobs in the cloud. As the name implies, this is already the second version of this kind of service and a lot has changed since its predecessor. One of these things is how datasets and pipelines are parameterized and how these parameters are passed between the different objects. The basic concepts behind this process are well explained by the MSDN documentation – for example Create a trigger that runs a pipeline on a schedule. In this example an trigger is created that runs a pipeline every 15 minute and passes the property “scheduledTime” of the trigger to the pipeline. This is the JSON expression that is used:

"scheduledRunTime": "@trigger().scheduledTime"

}

@trigger() basically references the object that is returned by the trigger and it seems that this object has a property called “scheduledTime”. So far so good, this is documented and fulfills the basic needs. Some of these properties are also documented here: System variables supported by Azure Data Factory but unfortunately not all of them.

So sometimes this trigger objects can be much more complex and also contain additional information that may not be documented. This makes it pretty hard for the developer to actually know which properties exist and how they could be used. A good example are Event-Based Triggers which were just recently introduced where the documentation only mentions the properties “fileName” and “folderPath” but it contains much more (details see further down). For simplicity I will stick to scheduled triggers at this point but the very same concept applies to all kinds of triggers and actually also to all other internal objects like @pipeline(), @dataset() or @activity() as well!

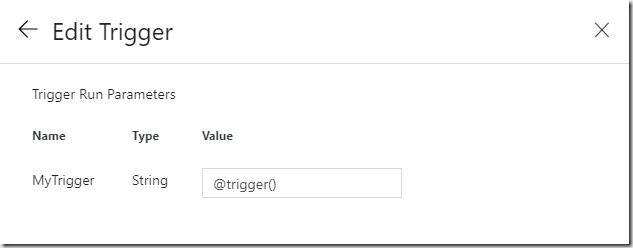

So how can you investigate those internal objects like @trigger() and see what they actually look like? Well, the answer is quite simple – just pass the object itself without any property to the pipeline. The target parameter of the pipeline can either be of type String or Object.

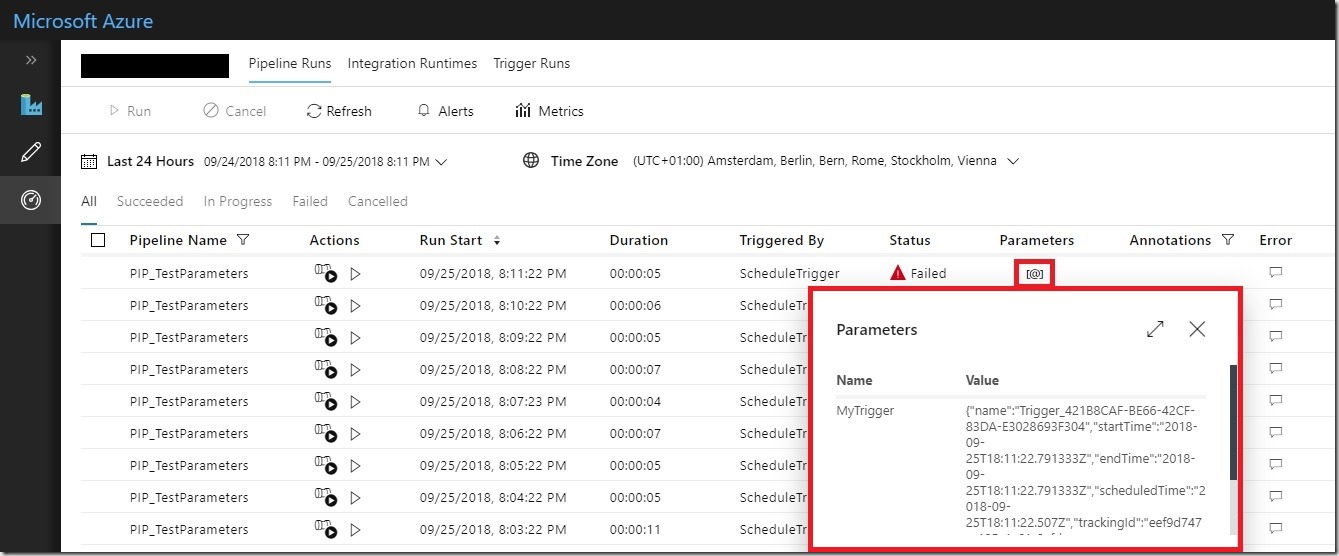

This allows you to see the whole object on the Monitoring-page once the pipeline is triggered:

For the Scheduled-trigger, the object looks like this:

"name": "Trigger_12348CAF-BE66-42CF-83DA-E3028693F304",

"startTime": "2018-09-25T18:00:22.4180978Z",

"endTime": "2018-09-25T18:00:22.4180978Z",

"scheduledTime": "2018-09-25T18:00:22.507Z",

"trackingId": "1234a112-7bb9-4ba6-b032-6189d6dd8b73",

"clientTrackingId": "12346637084630521889360938860CU33",

"code": "OK",

"status": "Succeeded"

}

And as you can guess, you can pass any of these properties to the pipeline using the syntax

“@trigger().<property_name>” or even the whole object! The syntax can of course also be combined with all the built-in expressions.

This should hopefully make it easier for you to build and debug more complex Azure Data Factory v2 pipelines!

Below you can find an example of the object that a Event-Based Trigger creates:

"name": "Trigger_12348CAF-BE66-42CF-83DA-E3028693F304",

"outputs": {

"headers": {

"Host": "prod-1234.westeurope.logic.azure.com",

"x-ms-client-tracking-id": "1234c153-fc96-4b8e-9002-0f5096bcd744",

"Content-Length": "52",

"Content-Type": "application/json; charset=utf-8"

},

"body": {

"folderPath": "data",

"fileName": "myFile.csv"

}

},

"startTime": "2018-09-25T18:22:54.8383112Z",

"endTime": "2018-09-25T18:22:54.8383112Z",

"trackingId": "07b3d1a1-8735-4ff0-9cc6-c83d95046101",

"clientTrackingId": "56dcc153-fc96-4b8e-9002-0f5096bcd744",

"status": "Succeeded"

}

Note that right now, it does not say whether the trigger fired because the file was created, updated or deleted! But I hope this will be fixed by the product team in the near future.