If you follow my blog frequently, you may have realized that in many of them I cover scenarios where I need to create relationships on columns with non-unique values. For example when handling SCD2 facts and dimensions or dealing with parallel hierarchies. In all the examples I use a combination of FILTER– and CONTAINS-function to create those non-unique relationships between tables. Recently I ran into performance issues using this approach and was thinking about on how to tune those kind of "relationships".

First of all I was taking a look at the DAX Query Plan using SQL Server Profiler to get some more information on what’s going on inside xVelocity and DAX. I used an calculation from my previous post as an example:

Value_Sender:=

CALCULATE([Value_SUM],

FILTER(

'Facts',

CONTAINS(

'Sender',

'Sender'[SenderID],

'Facts'[SenderID]))

)

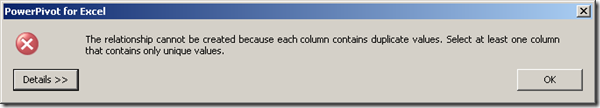

We had to use this calculation as SenderID was not unique in our Sender-table (and of course also not in our Facts-table). When we tried to create a relationship we got the error

"The relationship cannot be created because each column contains duplicate values. Select at least one column that contains only unique values."

This makes absolutely sense as tabular models natively only support 1:n / n:1 relationships and therefore at least one column has to contain only unique vales.

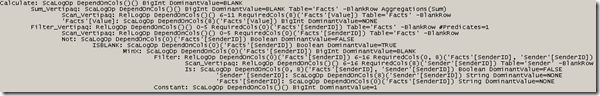

The calculation from above using FILTER and CONTAINS still allowed us to link our tables on those non-unique column values. The drawback of the calculation is that it may not perform very well as it cannot use the full power of xVelocity that heavily relies on predefined relationships between columns and tables. But in our case we create the relationship on-the-fly not using xVelocity’s full potential. Further, by using CONTAINS, which is not a native xVelocity-function, the engine has to callback to DAX formula engine as we can see in the profiler:

VertiPaq SE Query / 0 – VertiPaq Scan (8 times of which 4 are of subclass Internal)

[CallbackDataID(….)] is always an indicator that the xVelocity engine could not resolve the query on its own but had to call functions from the DAX formula engine as Jeffrey Wang described here. This in the end results in poor query performance (at least compared to a pure xVelocity query).

DAX Query Plan / 1 – DAX VertiPaq Logical Plan (just for sake of completeness)

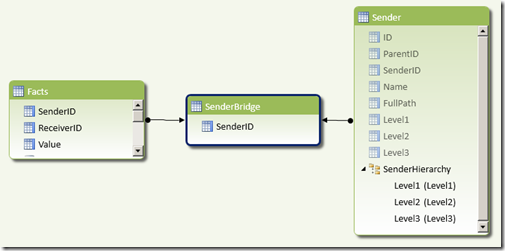

So the key to tune those kind of relationships is to relate the tables at design time to get full xVelocity performance at query time. As the tables cannot be related directly, we have to add an intermediate table containing only unique values – in our case unique SenderIDs. This table can then be used to create 1:n relationships with our Sender and our Facts table

| SenderID |

| TOT |

| EUROPE |

| GER |

| FR |

| NA |

| USA |

| CAN |

| EXTERNAL |

| TOT_2 |

| JV |

| HOLD |

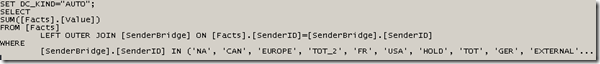

If you are using a SQL database as datasource you can simply create a view using SELECT DISTINCT or write the select statement directly in PowerPivot.

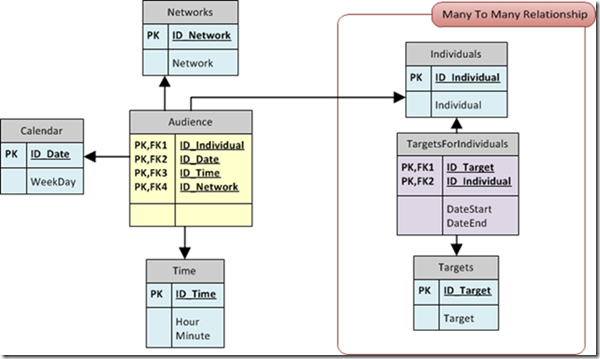

Once the table has been added we can create relationships as shown below:

This design is similar to a many-to-many design with the only difference that we combined our intermediate table and our bridge table into one single table. The rest is similar to resolving many-to-many relationship as I described here. So once we have created these relationships we can change our calculations as follows:

Value_Sender_Bridge:=

CALCULATE(

[Value_SUM],

'SenderBridge',

'Sender'

)

This calculation can be resolved by the xVelocity engine without any callbacks to DAX formula engine. Taking a look at the profiler trace proves this:

VertiPaq SE Query / 0 – VertiPaq Scan (4 times of which 2 are of subclass Internal)

None of the Veripaq SE queries used [CallbackDataID(….)] or any other complex function. Only joins have been used which can be handled by the xVelocity engine very easily:

DAX Query Plan / 1 – DAX VertiPaq Logical Plan (just for sake of completeness)

Also the logical plan could be drastically simplified containing only Sum_Vertipaq and Scan_Vertipaq operators telling us that the query can be executed in pure Vertiscan mode.

Details on Vertipaq operators and Vertiscan mode can be found here.

I have not run a lot of tests on bigger tables yet where I could have compared the overall performance but this may follow in a later post. With this post I just wanted to show how to link tables on columns containing non-unique values.

It would be great if tabular models would support those kind of relationships out of the box and that you can create them like any other relationships using drag&drop in the diagram view. This would have made many of my previous workshops at customers much easier ![]() .

.