Some time ago Microsoft released the first preview of a tool which allows you to monitor and control your Azure Data Factory (ADF). It is a web UI where you can select your Pipeline, Activity or Dataset and check its execution state over time. However, from my very personal point of view the UI could be much better, especially much clearer(!) as it is at the moment. But that’s not really a problem as the thing I like the most about ADF is that its quite open for developers (for example Custom C#/.Net Activities) and it also offers a quite comprehensive REST API to control an manage it.

For our monitoring purposes we are mainly interested in the LIST interface but we could do basically every operation using this API. In my example I only used the Dataset API, the Slices API and the Pipeline API.

First we start with the Dataset API to get a list of all data sets in our Data Factory. This is quite simple as we just need to build our URL of the REST web service like this:

- https://management.azure.com/subscriptions/{SubscriptionID}/resourcegroups/{ResourceGroupName}/providers/Microsoft.DataFactory/datafactories/{DataFactoryName}/datasets?api-version={api-version}

You can get all of this information for the Azure Portal by simply navigating to your Data Factory and checking the URL which will be similar to this one:

- https://portal.azure.com/#resource/subscriptions/12345678–3232–4a04–a0a6–6e44bf2f5d11/resourceGroups/myResourceGroup/providers/Microsoft.DataFactory/dataFactories/myDataFactory

So this would be my values for the API Call:

– {SubscriptionID} would be “12345678-3232-4a04-a0a6-6e44bf2f5d11”

– {ResourceGroupName} would be “myResourceGroup”

– {DataFactoryName} would be “myDataFactory”

– {api-version} would be a fixed value of “2015-10-01”

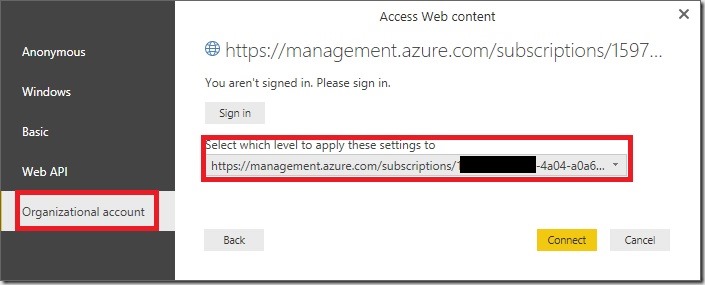

Once you have your URL you can use PowerBI to query the API using Get Data –> From Web

Next you need to authenticate using your Personal or Organizational Account – the same that you use to sign in to the Portal – and also the level for which you want to use the credentials. I’d recommend you to set it either to the subscription level or to the data-factory itself, depending on your security requirements. This ensures that you are not asked for credentials for each different API:

This works in a very similar way also for the Slices API, the Pipeline API and all other APIs available! The other transformations I used are regular PowerQuery/M steps done via the UI so I am not going to describe them in more detail here. Also, setting up the relationships in our final PowerPivot model should be straight forward.

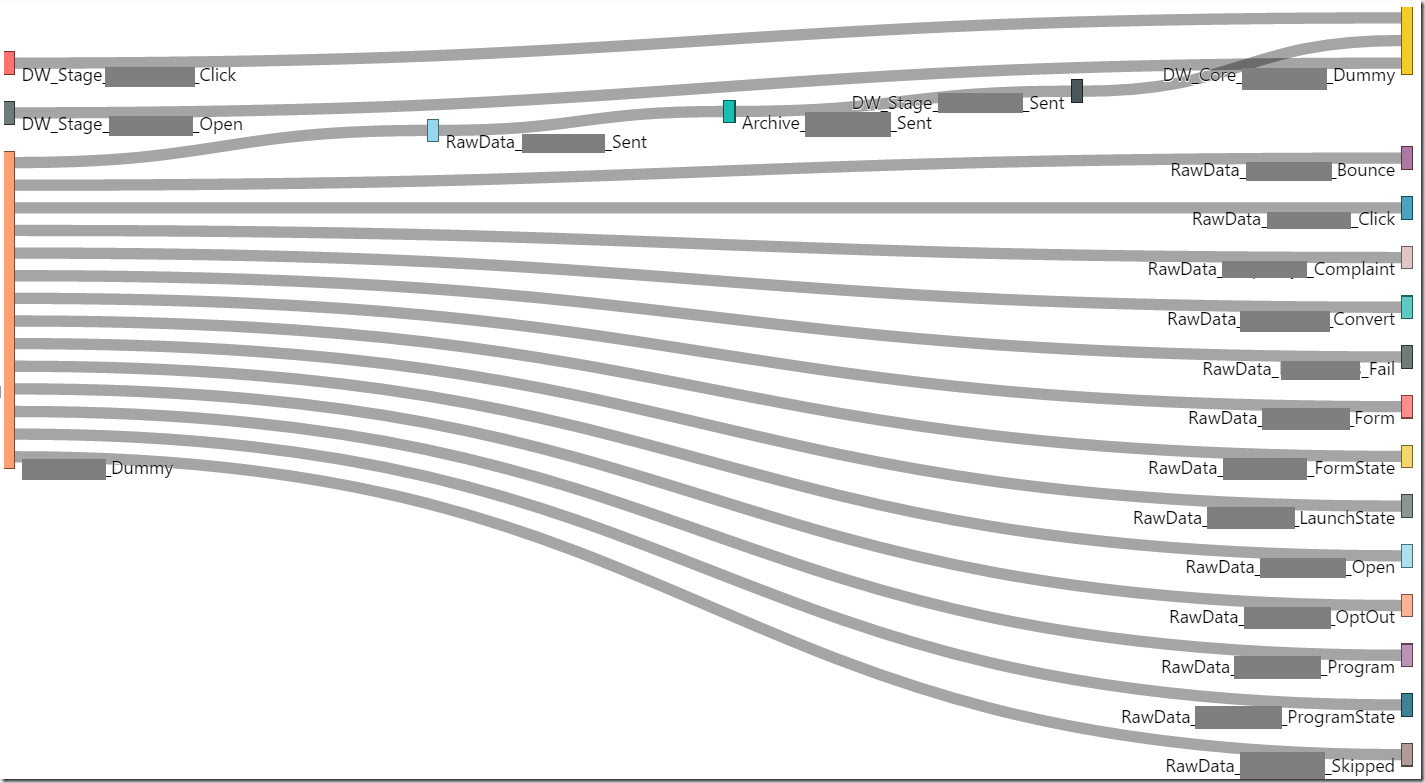

Now that we have all the required data in place, we can start with our report. I used some custom visuals for the calendar view, some slicers and a simple table to show the details. I also used a Sankey Chart to visualize the dependencies between the datasets.

Compared to the standard GUI for monitoring this provides a much better overview of slices and their current states and it also allows easy filtering. I am sure there are a lot of other PowerBI visualizations which would make a lot of sense here, these are just to give you an idea how it could look like, but of course you have all the freedom PowerBI offers you for reporting!

The only drawback at the moment is that you cannot reschedule/reset slices from PowerBI but for my monitoring-use-case this was not a problem at all. Also, I did not include the SliceRun API in my report as this would increase the size of the data model a lot, so detailed log information is not available in my sample report.

The whole PowerBI template is available for download on my GitHub site: https://github.com/gbrueckl/Azure.DataFactory.PowerBIMonitor

Hello,

I want to get in touch with you. I need some help regarding this report.

Please let me know your skype id or phone number so that we can talk.

Thanks for your help.

you can just drop me an email or use the contact form at http://wordpress.gbrueckl.at/about/

-gerhard

Hi Gerhard, do you have a report that can run against ADF V2?

Hi Chris,

unfortunately the REST APIs are fundamentally different from V1 and V2 hence it is not easily possible to build a similar report for V2

sorry,

-gerhard

I dont see the option – ‘you can use PowerBI to query the API using Get Data –> From Web’

Does this document apply to the current version? I am trying to avoid Monitor & Manage option and still be able to see the Pipeline activity. I tried to use the Azure Log content pack but never succeeded. Any help is appreciated…Thanks

I just checked in the latest version of PBI Desktop – if you go to Get Data in the ribbon, there is the option “Web” – simply build the correct URL as described in the post and paste it there.

the whole blog post is for Azure Data Factory Version 1! it does not work with ADF v2 as it uses a different API.

I am working on a monitoring solution for Azure Data Factory v2 using Power BI but I have not ETA yet

-gerhard

Hi, Any updates on ETA for solution for Azure Data Factory v2

Hi Shalabh,

unfortunately ADFv2 does not provide the status of a dataset for each slice as it was provided in ADFv1. Therefore the monitoring solution currently would/can not work the same way as in ADFv1. I did some prototyping already but could not find a proper way to do monitoring using the information that is provided by the API.

If I come up with a proper solution for ADFv2, I will write a dedicated blog post about it

regards,

-gerhard

So you are saying that the current PowerBI workbook only works with V1 of data factory. If that’s the case, I noticed that the URLs for the management API are very similar if not identical however, I am unable to get them to work in the Power BI workbook. Any ideas on how I should re-format the URL to access datasets, pipelines or activities?

Hi Gerhard,

I tried to use the method you have shared to monitor my pipelines in ADF but i am facing the following issues :

a. I am able to fetch only 35 pipelines and 100 data sets.

b. Pipelines and data sets fetched are not in a particular order ,it is just picking them in a random order.

Actually the solution I am working on is having approximately 250 pipelines and 1000 datasets.

Is there any way to pull all of them in powerbi that to in a particular order as i have to perform some operations on the data fetched.

Regards

Aditya

Hi,

Well, by default the ADF API only returns 100 elements. If you have more than 100 elements (e.g. datasets), then the API returns a “nextLink” property containing a link where you get the next 100 elements and so on. Here is the M-Code that does this recursively:

let

ReadRestAPIRecursive = (

URL as text,

optional NextLinkName as text

) as list =>

let

//URL = “https://management.azure.com/subscriptions/9b58aada-e077-4b89-a95e-85d0cd66b941/resourcegroups/ewCustomerAnalyticsHub/providers/Microsoft.DataFactory/datafactories/ewCAH-DataFactory/datasets/DW_Stage_Responsys_Complaint/slices?start=2014-12-31&end=2017-01-05&api-version=2015-10-01”,

//NextLinkName = null,

NextLink = if NextLinkName = null then “nextLink” else NextLinkName,

ApiResult = Json.Document(Web.Contents(URL)),

NextLinkUrl = try Record.Field(ApiResult, NextLink) otherwise null,

CombinedResults = if NextLinkUrl = null then {ApiResult} else List.Combine({{ApiResult}, ReadRestAPIRecursive(NextLinkUrl, NextLink)}) in

CombinedResults

in

ReadRestAPIRecursive

This should solve your issue

I will also update the template on GitHub accordingly!

Kind regards,

-gerhard

I just updated the PowerBI template in the GitHub repository to do the recursive calls and load all available elements!

Hi Gerhard,

any ideas for a proper solution for ADFv2? There isnt much I have found on the internet to get this type of process status dashboard.

Hi andy,

the reason why this does not exist for ADFv2 yet is, that ADFv2 does not have the concept of a dataset slice-status. A status (success, failed) only exists on pipeline and trigger level. So you would need to check the pipeline/trigger and its parameters (where you have the information about which slice is processed) and then traverse this information down to the dataset. This is very complex and not really feasible considering nested pipelines, etc.

the closest you can get is using Azure Log Analytics where you can filter on pipeline parameters to more or less achieve what I used to do in ADFv1

regards,

-gerhard

hi, I am trying to add a different request with method as Post

https://management.azure.com/subscriptions/{SubscriptionID}/resourcegroups/{ResourceGroupName}/providers/Microsoft.DataFactory/datafactories/{DataFactoryName}/activitywindows?api-version={api-version}

and trying to get the response in powerbi, however it fails with wrong method error. What needs to be changed ?

I need the response from ListActivityWindows (without input the dataset name) https://docs.microsoft.com/en-us/rest/api/datafactory/v1/data-factory-data-factory#request-4

So the functions I provided all use a GET request. However, it is also possible to invoke POST requests

here is an example: https://blog.crossjoin.co.uk/2014/04/19/web-services-and-post-requests-in-power-query/

or here https://social.msdn.microsoft.com/Forums/en-US/029fed1d-56fe-476a-9d7d-f367c5036b6e/posting-xml-to-a-restful-service?forum=dataexplorer

basically you would have to put the request body into the content-parameter of the Web.Contents function so it will use POST instead of GET

Is it mandatory to pass the authentication parameters in header, though the same is not needed for GET ?

The authentication parameters are always required, regardless of the type of the request (GET, POST, …)

otherwise everyone could call your API 🙂

Yes, I understand that.. I will be more clear. While using your template, I am able to retrieve the dataset details but my requirement is that, it also includes activities and hence I needed the ‘list activity windows’. However, when I changed the method and uri in your script, it still gives me error, as it’s not accepting the authentication described in this post. Is this natural for POST while it accepts the same for GET ?

I just did some tests and I also run into the following error: “DataSource.Error: Web.Contents with the Content option is only supported when connecting anonymously.”

After some research I found out that POST requests actually do not support any authentication except for anonymous so I am afraid this will not work natively.

The only workaround would be to write a custom connector which I think will be a lot of overhead

Sorry!

I was able to pass the manually pass the bearer token and leave the query authentication as anonymously. This works. However I struggle doing this inside of a function to expand the data set larger than 100 records. I am thinking of pushing the challenge to an azure function. Allowing PowerBI to authenticate against a GET to the function and the function compile the JSON.

let

AuthKey = “Bearer ” & AuthKey,

url=”https://management.azure.com/subscriptions/

body=”{#(lf) “”lastUpdatedAfter””: “””” “”lastUpdatedBefore””: “”””#(lf)}”,

Source = Json.Document(Web.Contents(url,[

Headers = [#”Authorization”=AuthKey ,

#”Content-Type”=”application/json”],

Content = Text.ToBinary(body)

]

)),

value = Source[value],

Hi Chris,

I was also investigating into this some time ago but I stopped when I realized that there is no concept of tracking slices as it was possible in ADFv1. This makes a lot of things much more complicated but I still think that there are good reasons to implement and ADFv2 monitor in PowerBI – However, it will work fundamentally different to what I did in ADFv1.

You can only monitor Pipeline/Trigger runs but not the status of a dataset itself. The approach is actually the very same as in the PowerBI template that I provide on GitHub. I just did some quick tests and tried to port the logic to ADFv2 and it works just fine – basically only the API URLs are slightly different for v2.

This would be the M-code to e.g. get the pipelines:

let

Source = ReadRestAPIRecursive(“https://management.azure.com/subscriptions/” & SubscriptionID & “/resourcegroups/” & ResourceGroup & “/providers/Microsoft.DataFactory/factories/” & DataFactory & “/pipelines?api-version=” & Date.ToText(APIVersion, “yyyy-MM-dd”)),

#”Union Pages” = Table.FromList(Source, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#”Expand Pages” = Table.ExpandRecordColumn(#”Union Pages”, “Column1”, {“value”}, {“Column1.value”}),

#”Expand Rows” = Table.ExpandListColumn(#”Expand Pages”, “Column1.value”),

#”Expanded Column1.value” = Table.ExpandRecordColumn(#”Expand Rows”, “Column1.value”, {“id”, “name”, “type”, “properties”, “etag”}, {“id”, “name”, “type”, “properties”, “etag”}),

#”Expanded properties” = Table.ExpandRecordColumn(#”Expanded Column1.value”, “properties”, {“parameters”, “folder”}, {“parameters”, “folder”}),

#”Expanded folder” = Table.ExpandRecordColumn(#”Expanded properties”, “folder”, {“name”}, {“folder”})

in

#”Expanded folder”

The M-function “ReadRestAPIRecursive” recursively reads through the API until there is no furhter “nextLink” provided

Authentication worked just fine for me using built-in Organization Account Authentication

No problem. Thanks for your help. Currently, I am using a workaround to get the details of the activity name mapped with dataset availability.

Hi I am getting a 404 not found exeption Datasets

Web.Contents failed to get contents from ‘https://management.azure.com/subscriptions/xxxx…