I recently built a tool which should help to debug the MDX scripts of an Analysis Services cube in order to track down formula engine related performance issues of a cube. As you probably know most of the performance issues out there are usually caused by poorly or wrong written MDX scripts. This tool allows you to execute a reference query and highlights the MDX script commands that are effectively used when the query is run. It provides the overall timings and how long each additional MDX script command extended the execution time of the reference query. The results can then either be exported to XML for further analysis in e.g. Power BI or a customized version of the MDX script can be created and used to run another set of tests.

The tool is currently in a beta state and this is the first official release – and also my first written tool that I share publicly so please don’t be too severe with your feedback ![]() – just joking every feedback is good feedback!

– just joking every feedback is good feedback!

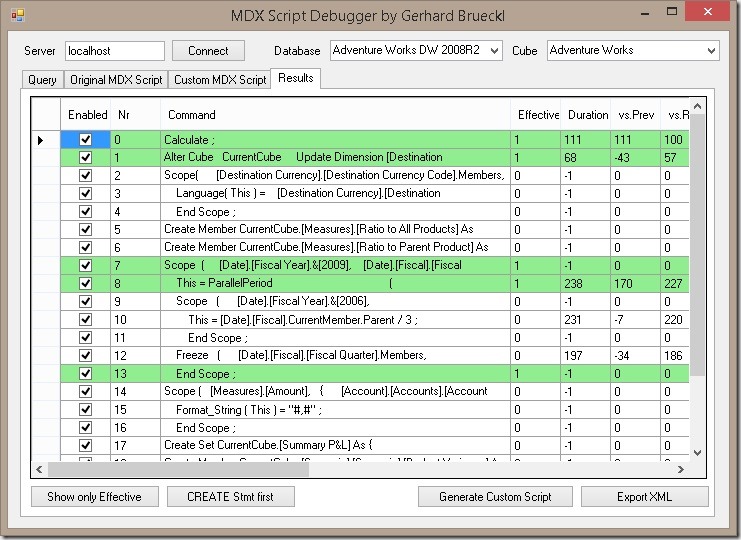

Below is a little screenshot which shows the results after the reference query is executed. The green lines are effectively used by the query whereas the others do not have any impact on the values returned by the query.

A list of all features, further information and also the source code can be found at the project page on codeplex:

https://mdxscriptdebugger.codeplex.com/

Also the download is available from there:

https://mdxscriptdebugger.codeplex.com/releases

Looking forward to your feedback and hope it helps you to track down performance issues of your MDX Scripts!

Hi,

Nice tool, but can’t you already locate the bad performing MDX by using ms profiler?

Hi Yuri,

SQL Server Profiler does not help you to track down Formula Engine (FE) bound performance issues. it can just tell you whether your bottleneck is FE or SE (Storage Engine) in general.

Debugging FE is very hard, especially if you have a long MDX script with nested calculations – which is the root cause of (according to my experience) 95% of all performance issues

-gerhard