Data is the daily bread-and-butter for any analyst. In order to provide good results you also need good data. Sometimes this data is very well prepared beforehand and you can use it as it is but it is also very common that you need to prepare and transform the data on your own. To do this Microsoft has introduced Power Query (on tool of the Power BI suite). Power Query can be used to extract, transform and load data into Excel and/or Power Pivot directly.

When using any data you usually know what the data looks like and what to expect from certain columns – e.g. a value is delivered as a number, a text contains exactly 4 characters, etc.

Though, sometimes this does not apply for all rows of that dataset and your transformation logics may cause errors because of that. In order to avoid this and still have a clean data load you need to handle those errors. Power Query offers several options to this which I will elaborate in this post.

This is the sample data I will use for the following samples:

| A | B | C |

| 1 | 4 | AXI23 |

| 2 | 5 | TZ560 |

| NA | 6 | UP945 |

we will perform simple transformations and type casts on this table to generate some errors:

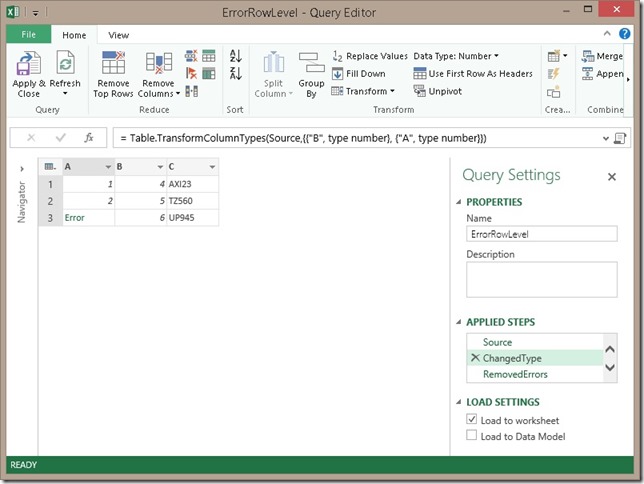

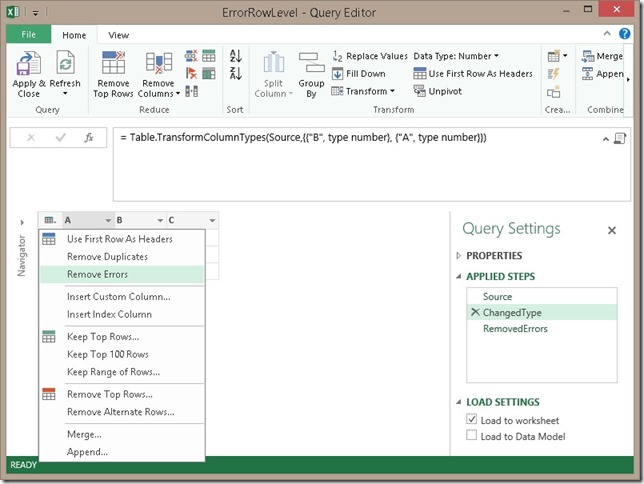

Error-handling on row-level

This is the easiest way of handling errors. Whenever a transformation causes an error, we can simply remove the whole row from the result set:

This will generate the following result table:

| A | B | C |

| 1 | 4 | AX312 |

| 2 | 5 | TZ560 |

As you can see, the third row was simply removed. This is the easiest way on how to remove errors from your result set but of course this may not be what you want as those removed rows may contain other important information in other columns! Assume you want to calculate the SUM over all values in column B. As we removed the third row we also removed a value from column B and the SUM is not the same as without the error-handling (9 vs. 15)!

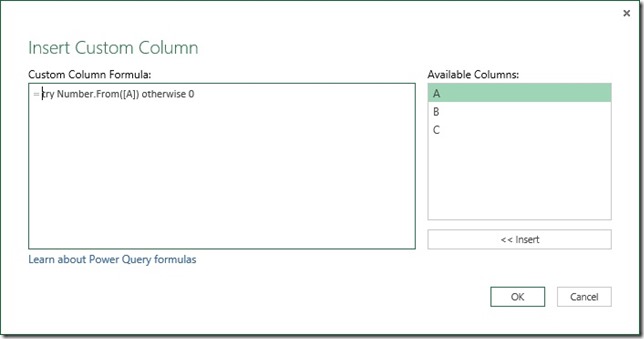

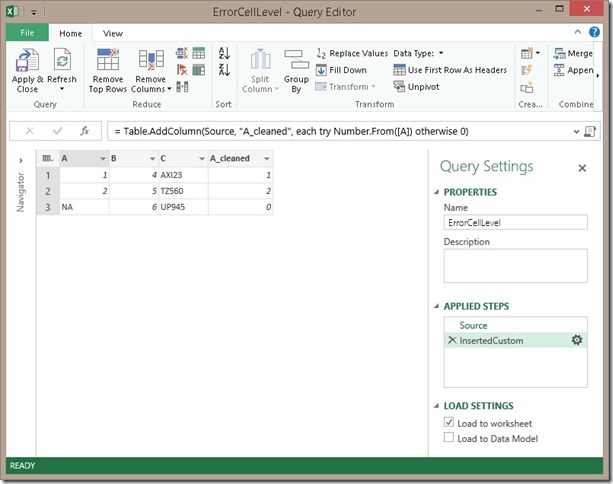

Error-handling on cell-level

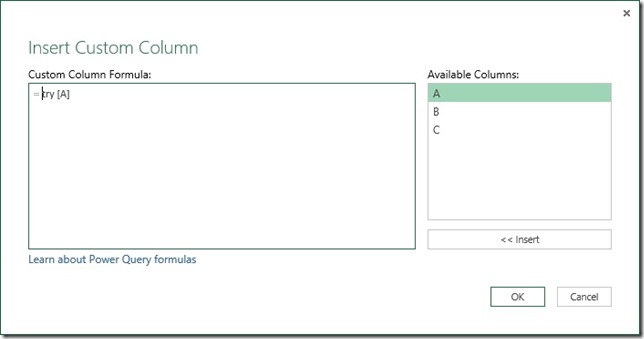

As we now know that column A may result in an error, we can handle this error during our transformation steps. As “NA” could not be converted to a number we see Error as result for that column. Clicking on it gives use some more details on the error itself. To handle this error we need to create a new calculated column where we first check if the value can be converted to a number and otherwise return a default numeric value:

The M-function that we use is “try <expressions to try> otherwise <default if error>” which is very similar to a try-catch block in C#. If the expression causes an error, the default will be used instead. Details on the try-expression can be found in the Microsoft Power Query for Excel Formula Language Specification (PDF) which can be found here and is a must read for everyone interested in the M language.

We could further replace our column A by the new column A_cleaned to hide this error handling transformation.

| A | B | C | A_cleaned |

| 1 | 4 | AXI23 | 1 |

| 2 | 5 | TZ560 | 2 |

| NA | 6 | UP945 | 0 |

Error-handling on cell-level with error details

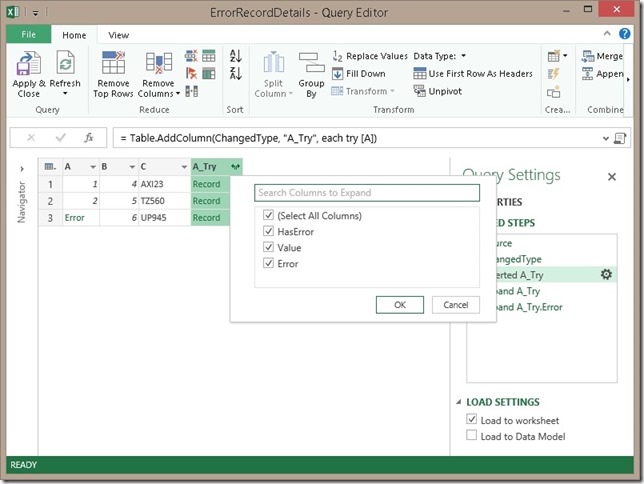

There may also be cases where it is OK to have one of this errors but you need/want to display the cause of the error so that a power user may correct the source data beforehand. Again we can use the try-function, but this time without the otherwise-clause. This will return a record-object for each row:

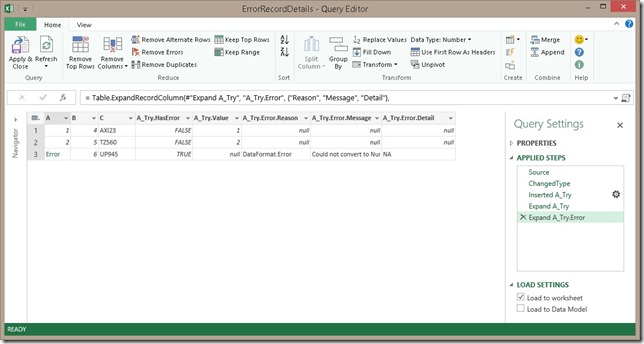

After expanding the A_Try column and also the A_Try.Error column we will get all available information on the error:

| A | B | C | A_Try.HasError | A_Try.Value | A_Try.Error.Reason | A_Try.Error.Message | A_Try.Error.Detail |

| 1 | 4 | AXI23 | FALSE | 1 | |||

| 2 | 5 | TZ560 | FALSE | 2 | |||

| 6 | UP945 | TRUE | DataFormat.Error | Could not convert to Number. | NA |

As you can see we get quite a lot of columns here. We could e.g. use A_Try.HasError to filter out error rows (similar to error-handling on row-level) or we could use it in a calculated column to mimic error-handling on cell-level. What you want to do with all the information is up to you, but in case you don’t need it you should remove all unnecessary columns.

Downloads:

Power Query Error Handling Workbook: Power Query Error Handling.xlsx

Pingback: Handling Added Or Missing Columns In Power Query | Chris Webb's BI Blog

Pingback: Handling Added Or Missing Columns In Power Query - SQL Server - SQL Server - Toad World

Hey guys. I have a bit of a problem and I hope you can help. So I’ve used the ‘Each Try’ function to drill down into data capture errors from a database imported from smartsheets. It works beautifully when run on my desktop version, but as soon as I publish it to PowerBI.com, the dashboards show blanks. I have tried everything and it still does not work published. Any help will be appreciated.

does it load any rows at all?

or are you just missing the rows which caused an error where the Try-logic is not working as expected?

Hello! I have question regarding try otherwise. Is there any catch with it if I using it with Folder.Files? Here is my code :

Source = try Folder.Files(fnPath(1)&”LD_Data”)

otherwise

try Folder.Files(fnPath(2)&”LD_Data”)

otherwise Folder.Files(fnPath(0)&”LD_Data”),

This not working. It stops on first try BUT THIS code

Source = try Excel.Workbook(File.Contents(fnPath(1)&”Test_Data\OldTest.xlsx”), null, true)

otherwise

try Excel.Workbook(File.Contents(fnPath(2)&”Test_Data\OldTest.xlsx”), null, true)

otherwise Excel.Workbook(File.Contents(fnPath(0)&”Test_Data\OldTest.xlsx”), null, true),

works. Can you tell me why? It’s driving me crazy 😉

well, actually they should both work.

I don’t see any reason why it would not work but I would need to take a look at it myself

I will keep you updated

OK, so I just ran a little test with this code:

= try Folder.Files(“C:\MyNonExistingfolder”)

it returns a record with [HasError] = false and [Value] = {Table}

However, the {Table} then throws an error

This looks very much like a bug to me or at least a non-intuitive behavior

but as the example shows, it depends on the return-value o the actual function and whether the [HasError] is set correctly

I will ask the Product Team about this behavior

ok, this is what I got to work around this issue:

let

Folder.TryFiles = (path as text) =>

let

FolderExists = not (try Table.Buffer(Table.FirstN(Folder.Files(path), 1)))[HasError],

Files = if FolderExists = true then Folder.Files(path) else error Error.Record(“Path not found”, “The path ‘” & path & “‘ could not be found!”, null, “Folder ” & path & ” was not found!”)

in

Files,

try_Folder_Files= try Folder.TryFiles(“C:\EmptyFolder”) otherwise Folder.TryFiles(“C:\MyExistingFolder”)

in

try_Folder_Files

We need to use Table.Buffer in order to force an evaluation of the expression and see if it returns an error.

If it returns an error, we also return an error so TRY can proceed with the next OTHERWISE expression, else we return the files

hope that helps

-gerhard

Thank you very match. This worked perfectly. My solution was to move everything to share point. But this led to another problem – application got very slow. each refresh takes up to 2 min. it is normal or the problem lies in my query structure?

I would guess this is because files on SharePoint are retrieved in a different way (compared to local files).

also, SharePoint is a web service which is usually more chatty.

It is pretty hard to tell if this is related to your query structure but I agree that 2 minutes is quite long – well, depending on the amount of files that you load

Dear all, Thanks for clarifications. I just want to add that in Power BI errors generated by above bug cannot be removed. I have reported it to Microsoft and they accepted an issue for resolution

Hi Gerhard,

Thanks for the tips. I have a question regarding on the converting issue. My message is as following:

DataFormat.Error: We couldn’t convert to Number.

Details:

1995DC

The value “1995DC” is a Product ID I am working with. My problem is, I don’t want to convert this into numbers, I want it to stay as texts for later merging purpose. In addition, I have already convert all data under this Product ID column from “general” to “text”. When I transform the data in Power Query, it still tries to convert to numbers. Is there any way I can ask the system not to convert the data to number?

Thanks,

William

Hi William,

usually, when you import data with the wizard, it automatically creates a second step for you which changes the type of each column to what PQ guesses the type might be. Can you check, on the right side, where all your single PQ steps are listed, there should be the first step called “Source” followed by a second one called “Change Type”. I would assume that the conversion to number is done there already and then you run into another issue when you try to convert the error back to text

regards,

-gerhard

Hi Gerhard, I have the same problem but my data in the column is already “Whole Numbers” type when imported to PQ. I have filtered the column to remove empty cells, and the problem persists. Do you have any suggestion?

what exactly does your data look like?

can you share a sample?

There are 4 columns of data: column 1 is the attribute, column 2 is the Customer Number, column 3 is the Date, and column 4 is the Qty. I want to Pivot Column using the attribute names from column 1 over the Qty (Type Whole Numbers). I just tried changing Customer Number in column 2 to Type Text and this resolves the issue. I thought the problem was with the Qty column …. well, I learned something new today. Thanks.

Hi Gerhard,

Whenever I use the Left Outer Join type of Merge in my Power Query 2010 Excel add-on, the number of rows in the merged table is MORE than the number of rows of the original “left” table. My data has 40k+ rows.

Do you have any idea what seems to be causing this error?

Thanks, a lot,

Bernadette

Hi Bernadette,

this is probably because the dataset that you join to your existing dataset contains multiple rows for the same value.

regards,

-gerhard

Hi Gerhard,

Many many thanks for your response. ?

Is there any way to fix this? So that the existing data set will pick up only the 1st match?

Regards,

Bernadette

well, you would need to filter the joined data set first to only contain one row for each value

if the whole rows are duplicate, you can simply use “Remove Duplicates”

otherwise you would need to find a more advanced logic which removes your duplicates but thats very much up to you how you design this as I do not know your business requirements

Thanks for sharing this information.

Thank you for this, it is very helpful for a new learner! I am importing files from folder using PowerQuery, and my dataset has over 100 columns. About 30+ have user-entered messy data, so errors can be in any of these columns (next update will be to implement more data validation…). But for now, is there a way to use Try() to scan multiple columns for errors or do I need to create individual “A_cleaned” accessory columns for each potential problem column? My end goal is to create a sheet with the file names and the error values for my team to go back and fix the issues in the source files before I re-run the query.

I would probably write a function that applies the try/otherwise to each column individally and then combines the result in a single column again to tell you which column caused an error for each row

Ideally this is done automatically – basically reading all existing column names, dynamically generating the try/otherwise logic, applying at and removing the old columns again

this may be a good starting point to see how this could be done dynamically:

https://excel.solutions/2019/07/power-query-data-transformation-of-dynamic-columns/

Pingback: [solved] Using PowerQuery how can you ignore a scheduled refresh if the API you’re calling is unavailable? – Flowpoint | Blog