I recently found myself in a situation where I had to optimize a Spark query. Coming from a SQL world originally I knew how valuable a visual representation of an execution plan can be when it comes to performance tuning. Soon I realized that there is no easy-to-use tool or snippet which would allow me to do that. Though, there are tools like DataFlint, the ubiquitous Spark monitoring UI or the Spark explain() function but they are either hard to use or hard to get up running especially as I was looking for something that works in both of my two favorite Spark engines being Databricks and Microsoft Fabric.

During my research I found these two excellent blog posts (1, 2) by Semyon Sinchenko who was already dealing with Spark execution plans and how to extract valuable information from them. I took a lot of inspiration and ideas from there to build my show_plan function.

In the end I wanted to achieve three goals:

- and easy to use function that can be used with any Spark Dataframe (including SparkSQL)

- a lightweight setup that works with all Spark engines

- an interactive, visual representation of the execution plan (still working on the interactive part)

Installation as of now is via sc.addPyFile from my GitHub repository Fabric.Toolbox. For now thats fine I think and if the function gets more popular I will probably create a PIP package for it.

sc.addPyFile("https://raw.githubusercontent.com/gbrueckl/Fabric.Toolbox/main/DataEngineering/Library/VisualizeExecutionPlan.py")

from VisualizeExecutionPlan import show_plan

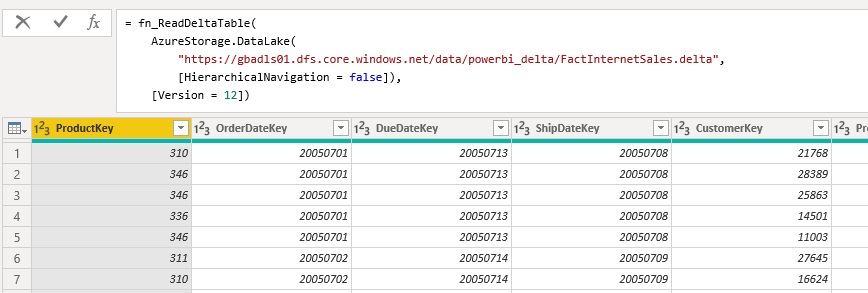

Next would be the definition of your Spark dataframe. As mentioned above, you can use any Spark dataframe regardless of how you created it (PySpark, SQL, …). For simplicity and transparency I used a SQL query in my example:

my_df = spark.sql("""

SELECT fs.*, dc.CurrencyName, ds.StoreName

FROM contoso.factsales_part fs

INNER JOIN contoso.dimcurrency dc

ON fs.CurrencyKey = dc.CurrencyKey

LEFT JOIN contoso.dimstore ds

ON fs.StoreKey = ds.StoreKey

WHERE fs.DateKey >= to_timestamp('2008-06-13', 'yyyy-MM-dd')

""")

display(my_df)

You can now simply pass the variable that represents your dataframe into the show_plan function:

show_plan(my_df)

As you can see, the function is very easy to install and use, its basically just 3 lines of code to give you a visual representation of your execution plan!

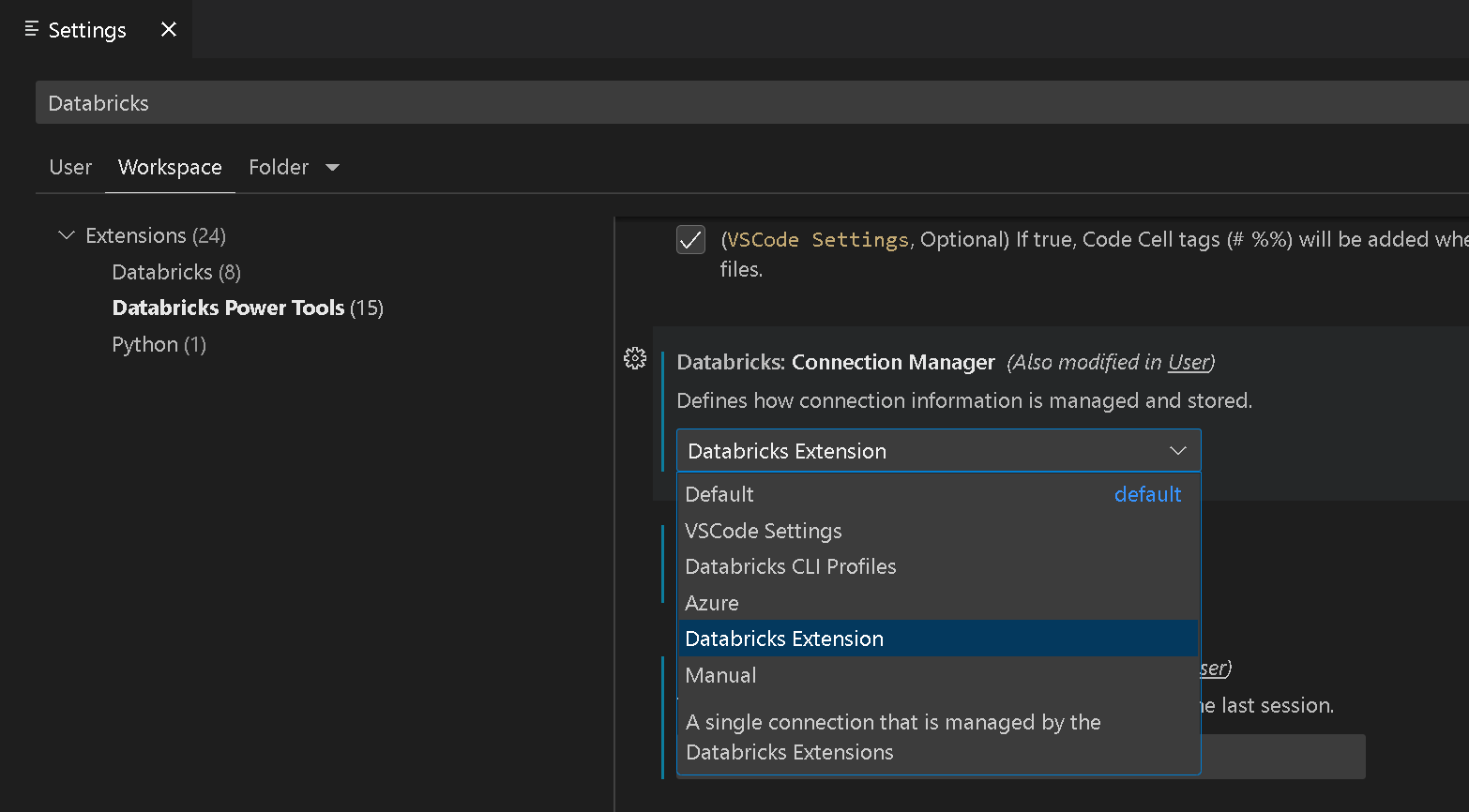

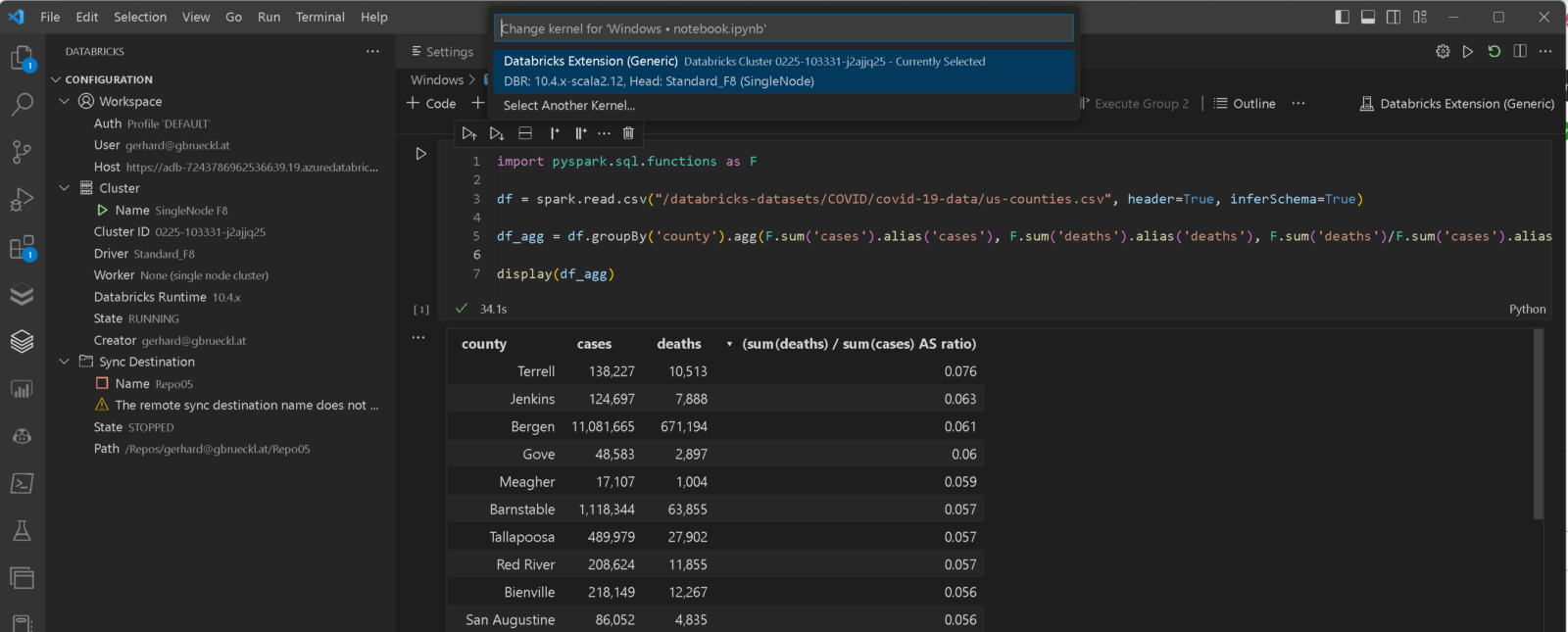

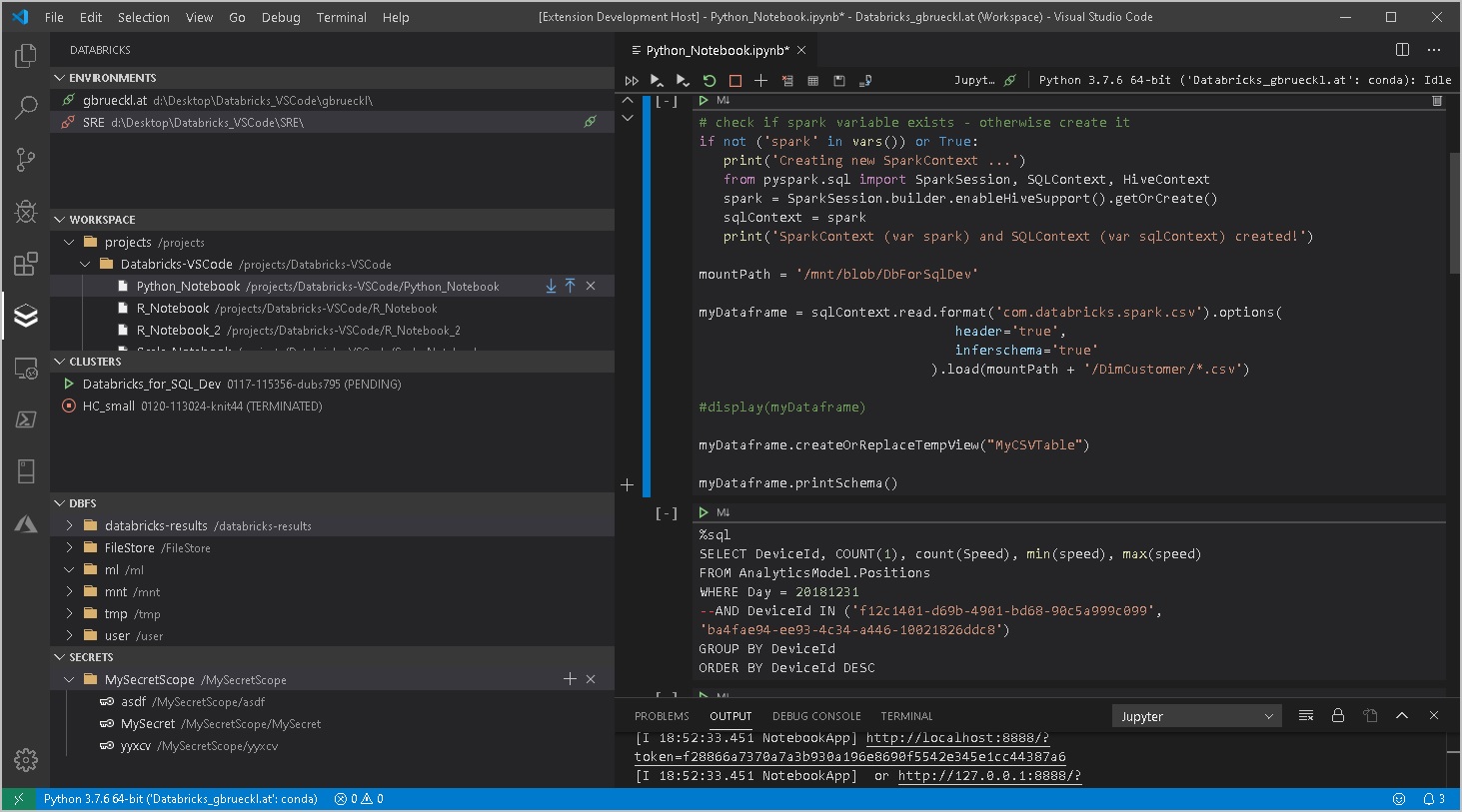

For Databricks, the code is slightly different to missing preinstalled libraries and limited capabilities of display() function. First we need to install graphviz using %sh and %pip. This is also partially documented in the official Databricks documentation.

%sh sudo apt-get install -y python3-dev graphviz libgraphviz-dev pkg-config

Instead of pygraphviz as described in the docs, we install the regular graphviz package:

%pip install graphviz

Adding the library and creating the test dataframe is the same as in Fabric.

sc.addPyFile("https://raw.githubusercontent.com/gbrueckl/Fabric.Toolbox/main/DataEngineering/Library/VisualizeExecutionPlan.py")

from VisualizeExecutionPlan import *

my_df = spark.sql("""

SELECT fs.*, dc.CurrencyName, ds.StoreName

FROM contoso.factsales_part fs

INNER JOIN contoso.dimcurrency dc

ON fs.CurrencyKey = dc.CurrencyKey

LEFT JOIN contoso.dimstore ds

ON fs.StoreKey = ds.StoreKey

WHERE fs.DateKey >= to_timestamp('2008-06-13', 'yyyy-MM-dd')

""")

display(my_df)

Finally we need to pass the displayHTML function as a second parameter to the show_plan function:

show_plan(my_df, displayHTML)

Information for the final output is take from the physical execution plan and is enriched with data from the optimized logical execution plan which for example contains the estimated sizes. Things like the type of join (e.g. BroadcastHasJoin) is taken from the physical plan.

It is worth mentioning that the sizes are based on the table statistics and become unreliable after joins are involved. However, I think they still play in import role in performance tuning so it made sense to me to also include them in the visual representation of the plan.

There is still a lot of room for improvements like installation via PIP, interactive visualization, highlighting of important things like partition filters, etc. and I could not yet test all potential scenarios (I mainly used Delta Lake tables for my tests). So I would really appreciate any feedback to make the show_plan function more robust and user friendly. Feedback is best provided via the underlying GitHub repository Fabric.Toolbox.