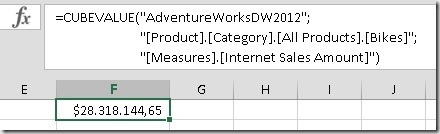

Two weeks ago at the German SQL Server Conference 2015 I was at Peter Myer’s session about Mastering the CUBE Functions in Excel. (PS: Peter is also speaking on our upcoming SQLSaturday #374 in Vienna next week and at PASS SQLRally in Copenhagen the week after). After his session we had a further discussion about this topic and our experiences on how to use Excels CUBE-functions in order to build nice Dashboards with native Excel functionalities that also work with e.g. Excel Services. Its always great to exchange with people that share the same passion on he same topic! One thing we both agreed on that is missing currently is a way to get the MDX UniqueName of something that is selected in a slicer, filter or simply in a cell using CUBEMEMBER-function. I once used a special Cube Measure which was created in MDX Script which returned the UniqueName of a given member that was selected together with this special measure. For this to work with Excel you need to know how Excel builds the MDX when querying cube values using CUBEVALUE-function. Here is a little example:

This produces the following MDX query:

- SELECT

- {

- (

- [Measures].[Internet Sales Amount],

- [Product].[Category].&[1]

- )

- } ON 0

- FROM [Adventure Works]

- CELL PROPERTIES VALUE, FORMAT_STRING, LANGUAGE, BACK_COLOR, FORE_COLOR, FONT_FLAGS

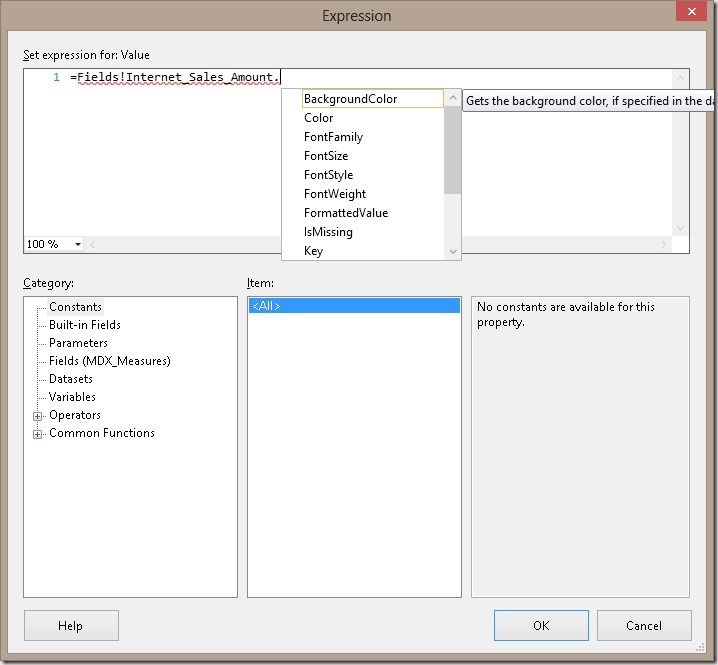

So it basically creates a tuple that contains everything you pass into the CUBEVALUE-Function as a parameter. Knowing this we can create a calculated measure to get the MDX UniqueName of this tuple using MDX StrToTuple()- and MDX AXIS()-function:

- MEMBER [Measures].[Excel TupleToStr] AS (

- TupleToStr(axis(0).item(0))

- )

Replacing the [Measures].[Internet Sales Amount] of our initial CUBEVALUE-function with this new measure would return this to Excel:

- ([Measures].[Internet Sales Amount],[Product].[Category].&[1])

Ok, so far so good but nothing really useful as you need to hardcode the member’s UniqueName into the CUBEVALUE-function anyway so you already know the UniqueName.

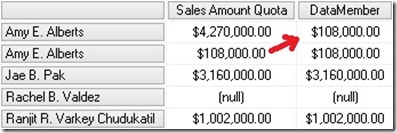

However, this is not the case if you are dealing with Pivot Table Page Filters and/or Slicers! You can simply refer to them within the CUBEVALUE-function but you never get the UniqueName of the selected item(s). Well, at least not directly! But you can use the approach described above, using an special MDX calculated measure, to achieve this as I will demonstrate on the next pages.

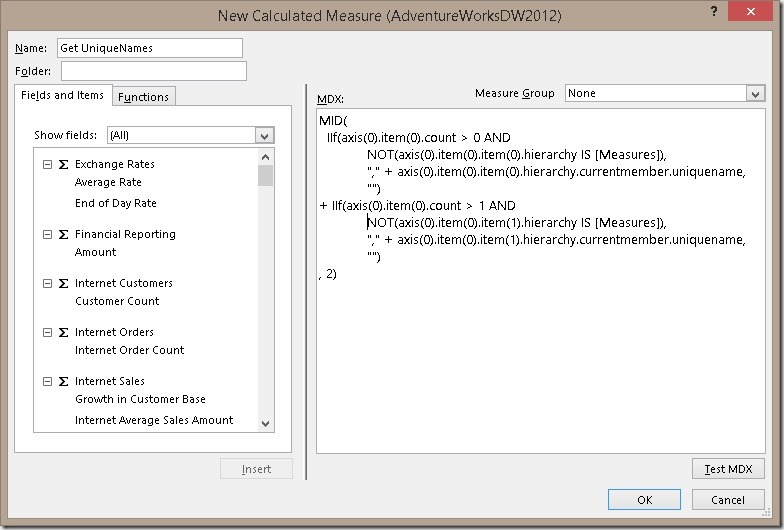

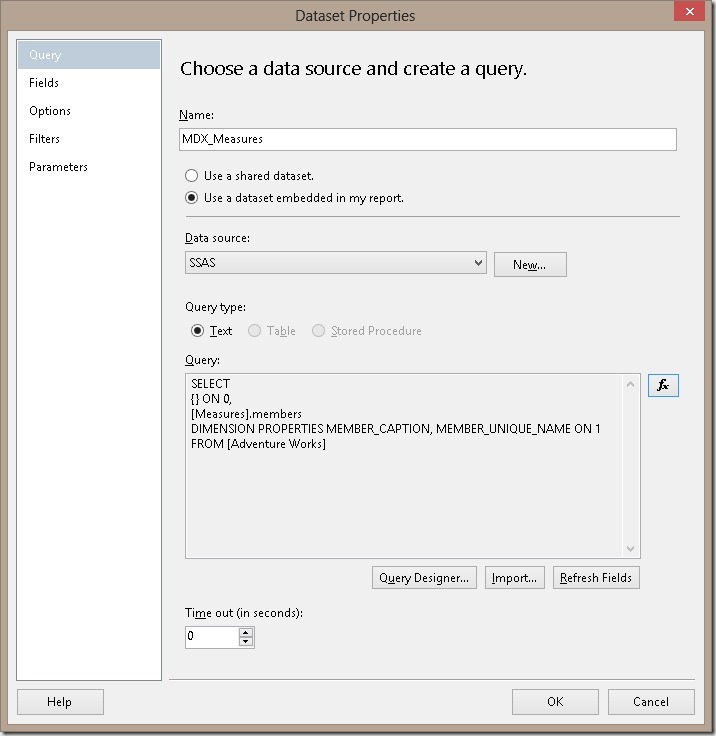

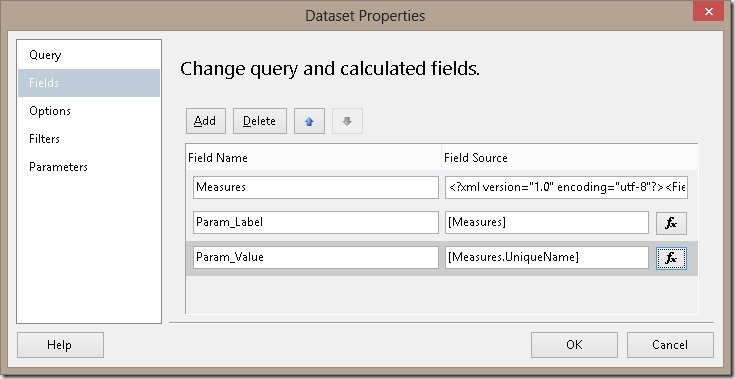

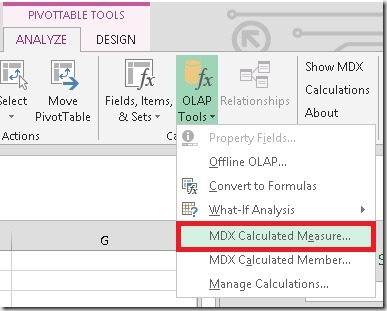

Calculated measures can only be created using the Pivot Table interface but can also be used in CUBE-functions. So first thing you need to do is to create a Pivot Table and add a new MDX Calculated Measure:

!Caution! some weird MDX coming !Caution!

You may wonder, why such a complex MDX is necessary and what it actually does. What it does is the following: Based on the example MDX query that Excel generates (as shown above) this is a universal MDX that returns the MDX UniqueName of any other member that is selected together with our measure using the CUBEVALUE-function. It also removes the UniqueName of the measure itself so the result can be used again with any other measure, e.g. [Internet Sales Amount]

The reason why it is rather complex is that Excel may group similar queries and execute them as a batch/as one query to avoid too many executions which would slow down the overall performance. So we cannot just reference the first element of our query as it may belong to any other CUBEVALUE-function. This MDX deals with all this kinds of issues.

The MDX above allows you to specify only two additional filters but it may be extended to any number of filters that you pass in to the CUBEMEMBER-function. This would be the general pattern:

- MID(

- IIf(axis(0).item(0).count > 0 AND

- NOT(axis(0).item(0).item(0).hierarchy IS [Measures]),

- "," + axis(0).item(0).item(0).hierarchy.currentmember.uniquename,

- "")

- + IIf(axis(0).item(0).count > 1 AND

- NOT(axis(0).item(0).item(1).hierarchy IS [Measures]),

- "," + axis(0).item(0).item(1).hierarchy.currentmember.uniquename,

- "")

- + IIf(axis(0).item(0).count > n AND

- NOT(axis(0).item(0).item(n).hierarchy IS [Measures]),

- "," + axis(0).item(0).item(n).hierarchy.currentmember.uniquename,

- "")

- , 2)

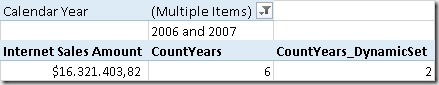

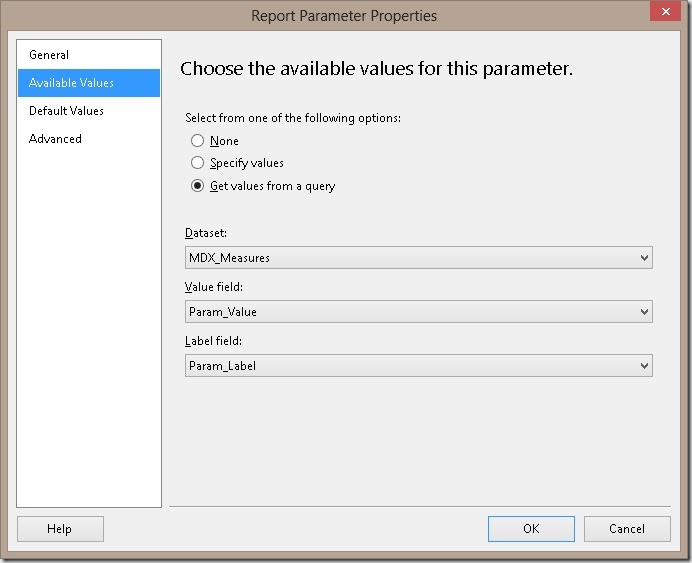

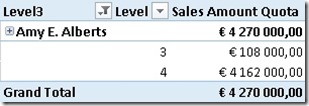

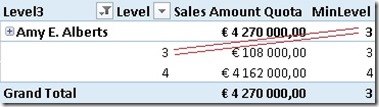

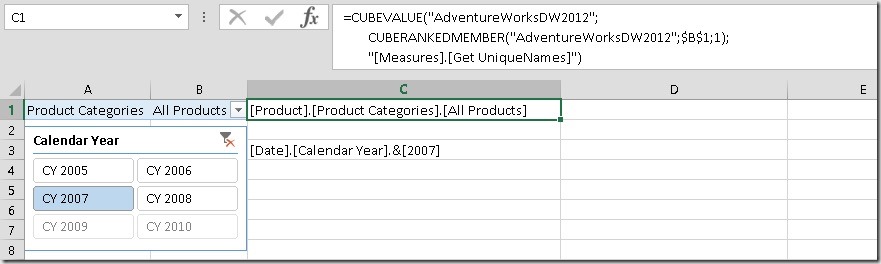

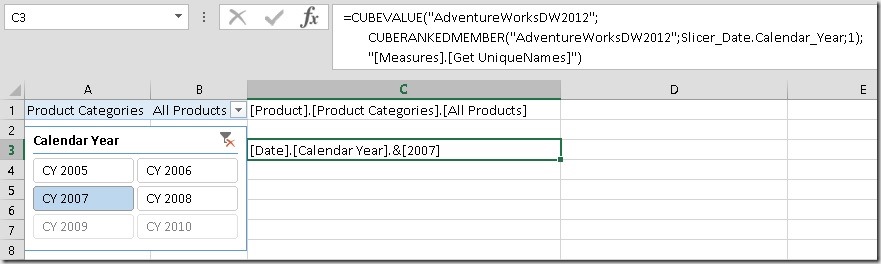

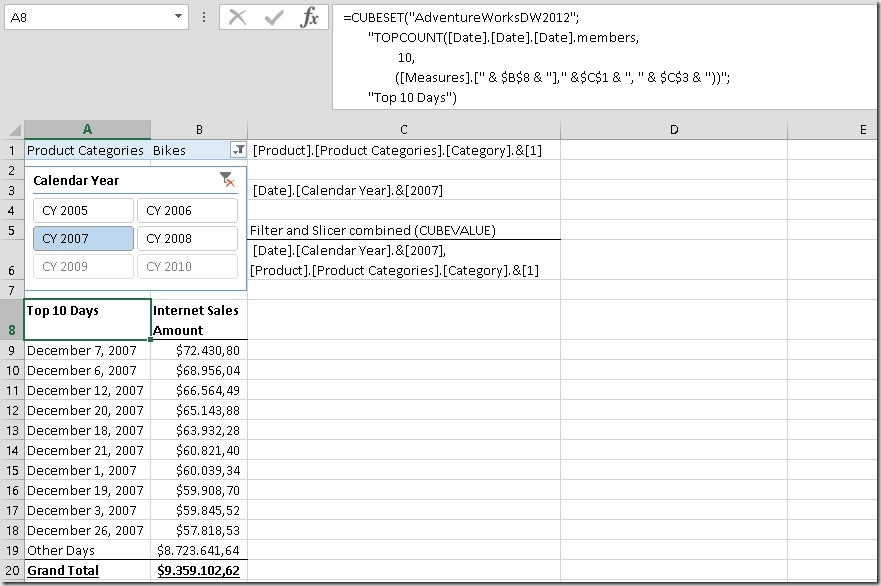

After creating this measure we can now use it in our CUBE-functions in combination with our filters and slicers:

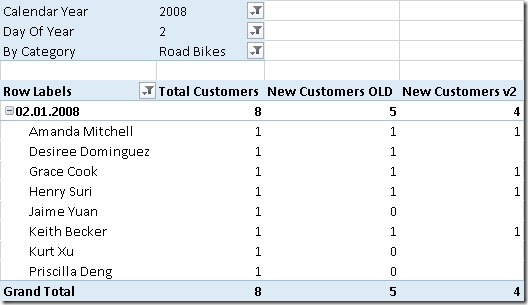

You may noted that I had to use CUBERANKEDMEMBER here. This is because filters and slicers always return a set and if we would pass in a set to our CUBEVALUE function a different MDX query would be generated which would not allow us to extract the single UniqueNames of the selected items using the approach above (or any other MDX I could think of). So, this approach currently only works with single selections! I hope that the Excel team will implement a native function to extract the UniqueName(s) of the selected items in the future to make this workaround obsolete!

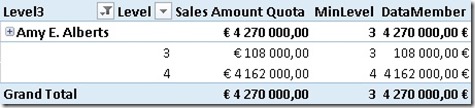

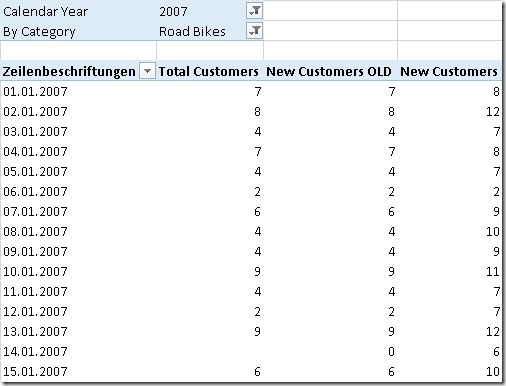

Once we have our UniqeName(s) we can now use them in e.g. a CUBESET-function to return the Top 10 days for a given group of product (filter) and the selected year (slicer):

And that’s it!

So why is this so cool?

- It works with SSAS (multidimensional and tabular) and Power Pivot as Excel still uses MDX to query all those sources. It may also work with SAP HANA’s ODBO connector but I have not tested this yet!

- It does not require any VBA which would not work in Excel Services – this solution does!

- The calculation is stored within the Excel Workbook so it can be easily shared with other users!

- There is no native Excel functionality which would allow you to create a simple Top 10 report which works with filters and slicers as shown above or any more complex dynamic report/dashboard with any dynamic filtering.

So no more to say here – Have fun creating your interactive Excel web dashboards!

Download sample Workbook: Samples.xlsx

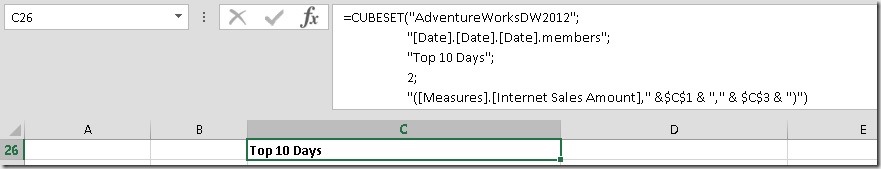

Note: You may also rewrite any TOPCOUNT expression and use the 4th and 5h parameter of the CUBESET-function instead. This is more native and does not require as much MDX knowledge:

However, if you are not familiar with MDX, I highly recommend to learn it before you write any advanced calculations as show above as otherwise the results might be a bit confusing in the beginning! Especially if you filter and use TOPCOUNT on the same dimension!