In one of my recent posts I highlighted how multiple security roles are handled in tabular opposed to multidimensional Analysis Services models. In this post I will go into more detail and focus on how the security is evaluated for both models.

I would like to continue using the same example as in the last post with the following roles:

“Bikes” – is restricted to [ProductCategory] = “Bikes”

“Brakes” – is restricted to [ProductSubCategory] = “Brakes”

“DE” – is restricted to [Country] = “DE”

I used the following MDX query to test both, the tabular and the multidimensional model:

- SELECT

- NON EMPTY {[Geography].[Country Region Name].[Country Region Name].MEMBERS} ON 0,

- NON EMPTY {[Product].[Product Category Name].[Product Category Name].MEMBERS} ON 1

- FROM [Model]

- WHERE (

- [Measures].[Reseller Total Sales]

- )

The last thing I mentioned was how the combination of roles “Bikes” and “DE” could be expressed in SQL:

- SELECT

- [ProductCategory],

- [Country],

- SUM([Reseller Sales])

- FROM <table>

- WHERE [ProductCategory] = ‘Bikes’

- OR [Country] = ‘Germany’

- GROUP BY

- [Product Category],

- [Country]

When running the previous MDX query on our tabular model using roles “Bikes” and “DE” we will see a very similar query being executed against our xVelocity Storage Engine:

(Note: There are also some SE engine queries executed to get the elements for rows and columns but the query below is the main query)

- SELECT

- [Geography_1fa13899-0770-4069-b7cb-5ddf22473324].[EnglishCountryRegionName],

- [Product_11920c93-05ae-4f1c-980e-466dfbcfca2a].[CalculatedColumn1 1],

- SUM([Reseller Sales_fc635e72-28dc-4156-80d5-43b805f8df1c].[SalesAmount])

- FROM [Reseller Sales_fc635e72-28dc-4156-80d5-43b805f8df1c]

- LEFT OUTER JOIN [Reseller_d52b9c6f-8d2d-4e23-ae4c-2fc57c1d968a]

- ON [Reseller Sales_fc635e72-28dc-4156-80d5-43b805f8df1c].[ResellerKey]

- =[Reseller_d52b9c6f-8d2d-4e23-ae4c-2fc57c1d968a].[ResellerKey]

- LEFT OUTER JOIN [Geography_1fa13899-0770-4069-b7cb-5ddf22473324]

- ON [Reseller_d52b9c6f-8d2d-4e23-ae4c-2fc57c1d968a].[GeographyKey]

- =[Geography_1fa13899-0770-4069-b7cb-5ddf22473324].[GeographyKey]

- LEFT OUTER JOIN [Product_11920c93-05ae-4f1c-980e-466dfbcfca2a]

- ON [Reseller Sales_fc635e72-28dc-4156-80d5-43b805f8df1c].[ProductKey]

- =[Product_11920c93-05ae-4f1c-980e-466dfbcfca2a].[ProductKey]

- WHERE

- (COALESCE((PFDATAID( [Product_11920c93-05ae-4f1c-980e-466dfbcfca2a].[CalculatedColumn1 1] ) = 6))

- OR

- COALESCE((PFDATAID( [Geography_1fa13899-0770-4069-b7cb-5ddf22473324].[CountryRegionCode] ) = 5)));

Well, not really readable so lets make it a bit nicer by removing those ugly GUIDs, etc:

- SELECT

- [Geography].[EnglishCountryRegionName],

- [Product].[ProductCategory],

- SUM([Reseller Sales].[SalesAmount])

- FROM [Reseller Sales]

- LEFT OUTER JOIN [Reseller]

- ON [Reseller Sales].[ResellerKey] = [Reseller].[ResellerKey]

- LEFT OUTER JOIN [Geography]

- ON [Reseller].[GeographyKey] = [Geography].[GeographyKey]

- LEFT OUTER JOIN [Product]

- ON [Reseller Sales].[ProductKey] = [Product].[ProductKey]

- WHERE [Product].[ProductCategory] = "Bikes"

- OR [Geography].[CountryRegionCode] = "Germany";

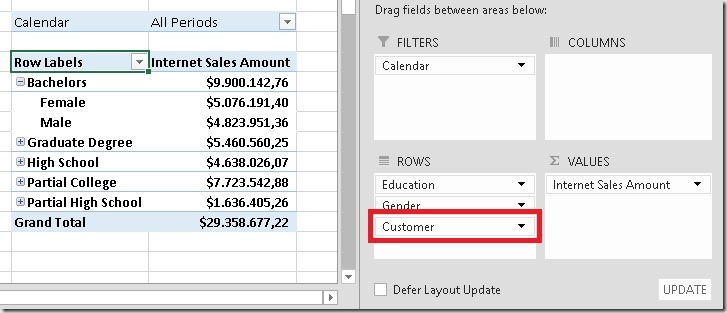

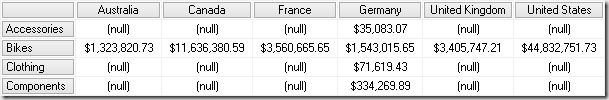

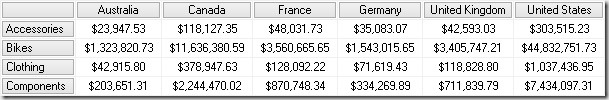

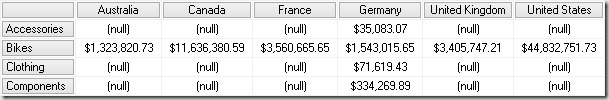

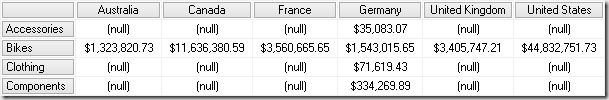

This looks very similar to our SQL query. The special thing about it is the WHERE clause which combines the restrictions of both roles using OR which is then propagated also to our [Reseller Sales] fact table and that’s the reason why we see what we want and expect to see – all sales that were either made with “Bikes” OR made in “Germany”:

Another very important thing to note and remember here is that the security restrictions get propagated into and are evaluated within the query. This is done for each and every query (!) which is usually not a problem but may become crucial if you use dynamic security.

To test this with dynamic security I introduced a new role called “CustData” which is going to replace our “Bikes” for this test. It is restricted on table ‘Product’ as:

- =([Product Category Name] = IF( CUSTOMDATA() = "1", "Bikes", "Clothing"))

So instead of using the connectionstring “…;Roles=Bikes,DE; …” I now used “…;Roles=CustData,DE;CustomData=1; …” which results in the exact same results of course. However, the query now changed to the following (already beautified) xVelocity query:

- SELECT

- [Geography].[EnglishCountryRegionName],

- [Product].[ProductCategory],

- SUM([Reseller Sales].[SalesAmount])

- FROM [Reseller Sales]

- LEFT OUTER JOIN [Reseller]

- ON [Reseller Sales].[ResellerKey] = [Reseller].[ResellerKey]

- LEFT OUTER JOIN [Geography]

- ON [Reseller].[GeographyKey] = [Geography].[GeographyKey]

- LEFT OUTER JOIN [Product]

- ON [Reseller Sales].[ProductKey] = [Product].[ProductKey]

- WHERE [Product].$ROWFILTER IN '0x000000…000000000000000000000fffff00000000000ffffffff'));

- OR [Geography].[CountryRegionCode] = "Germany";

Instead of using a direct filter on [ProductCategory] we now see a filter on $ROWFILTER ?!?

### ASSUMPTIONS START ###

I have to admit that I am currently not entirely sure what this means but I assume the following:

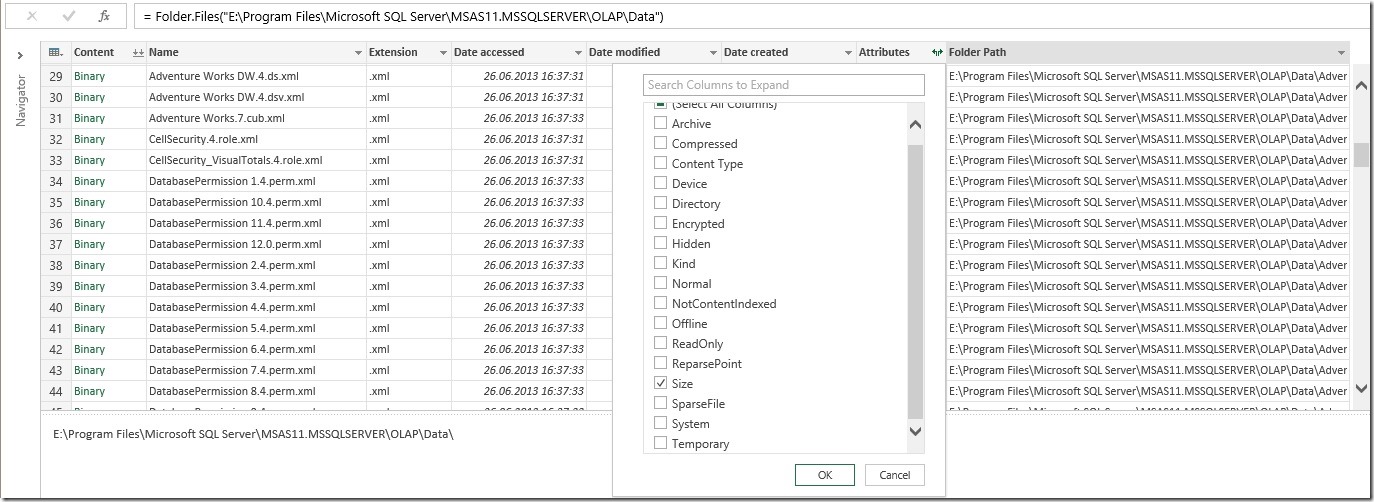

Preceding the main query another xVelocity query is executed which is important for us:

- SELECT

- [Product].[RowNumber],

- [Product].[ProductCategory],

- COUNT()

- FROM [Product];

This query fetches each [RowNumber] and its associated [ProductCategory]. Internally the [RowNumber] column is created for every table. This is related to the columnar storage that xVelocity uses. Elaborating this in detail would go far beyond the context of this blog post. For more details on the RowNumber-column please refer too http://msdn.microsoft.com/en-us/library/hh622558(v=office.15).aspx which describes the Excel data model which is actually Power Pivot and therefore also applies to Tabular. (In general this link contains a lot of in-depth information on the tabular data model and the columnar storage concepts!)

I further assume that our security-expression is then evaluated against this temporary table to create an bitmap index of which rows match the security-expression and which don’t. This result is then applied to our main query which using the WHERE clause [Product].$ROWFILTER IN ‘0x0000….’

For all subsequent queries the above query on [RowNumber] and [ProductCategory] is not executed again so I assume that the bitmap index gets cached internally by Analysis Services. I further assume that if the bitmap index gets cached it is also shared between users belonging to the same role which would be similar to multidimensional models.

### ASSUMPTIONS END ###

So the important take-away for tabular is that the security gets propagated into the query and down to the fact table. Combining multiple roles on this level using OR delivers the expected results.

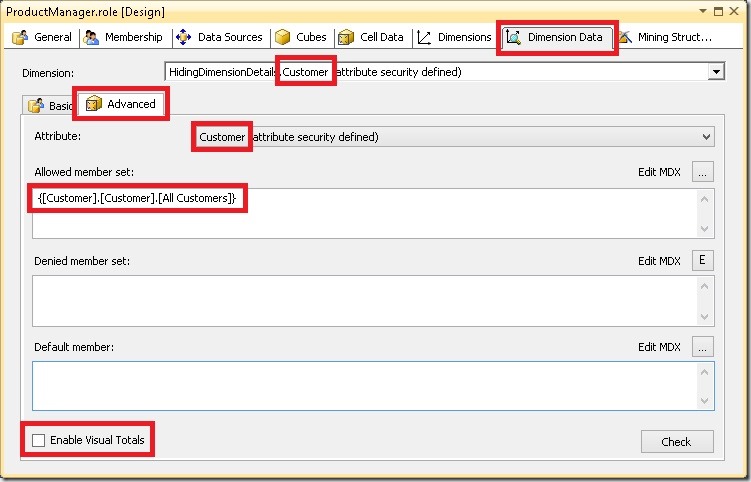

For multidimensional models this is slightly different. You can define security on either the Database Dimension (which gets inherited down to all Cube Dimension) or you can define security on the Cube Dimension directly. Defining security on the Database Dimension already makes it very clear that the security restrictions are independent of any fact table/measure group. A Database Dimension may be used in several cubes so the engine cannot know in advance which measure group to use. Security for multidimensional models is defined on the multidimensional space defined by that dimension. If one role is not restricted on a dimension at all, the user will always see the whole dimension and its associated values, even if a second role would restrict that dimension. And this causes unexpected results as the user may see the whole cube.

In terms of SQL the query could be expressed as:

- SELECT

- [ProductCategory],

- [Country],

- SUM([Reseller Sales])

- FROM <table>

- WHERE ( [ProductCategory] = 'Bikes' OR 1 = 1)

- OR ( 1 = 1 OR [Country] = 'Germany')

- GROUP BY

- [Product Category],

- [Country]

The left side of the inner OR statements represents the role “Bikes” whereas the right part represents the “DE” role. It should be very obvious that due to the combination of both roles you finally see everything without any restriction!

Another important thing to point out is that security for multidimensional models is only evaluated once and not for every query. So even if you have complex dynamic security its evaluation only hits you once for the first user that connects to a role and is cached for all queries of that user and also shared with other users belonging to that role.

I hope this gives some more insights on how tabular and multidimensional handle multiple roles and their fundamental differences!