An Analysis Services cube is usually accessed by a wide variety of people all of them having different roles in the business. For example Product Managers, Sales Representatives, Key Account Managers and so on. Sometimes it is necessary to hide detailed information of a given dimension or attribute from a certain user or role. In this post I will show you how this can be accomplished.

Lets take a simple example and assume we have the role “ProductManager” which must not be allowed to see any specific details of a single customer but must be able to see aggregation levels above the customer like Gender, Education, etc.

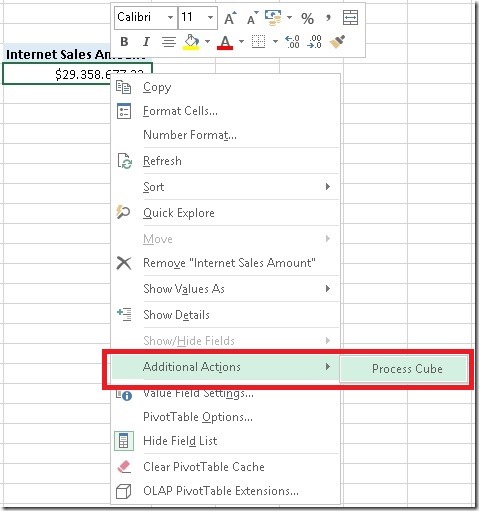

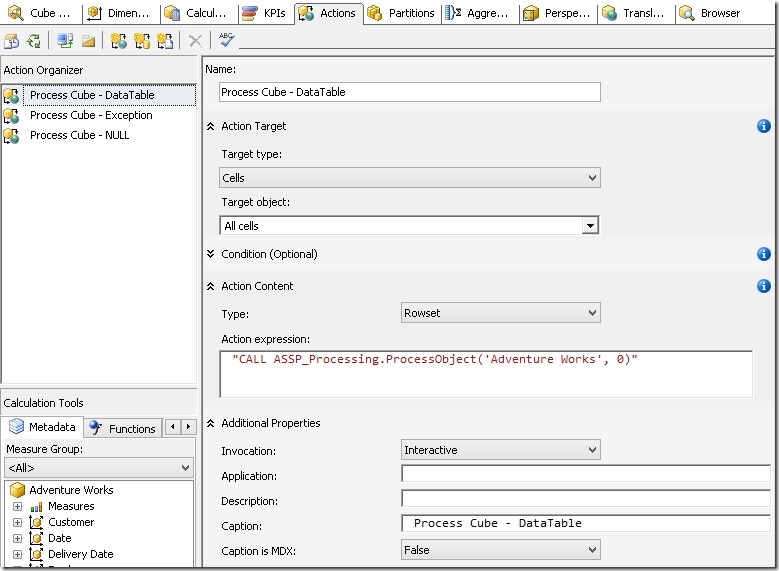

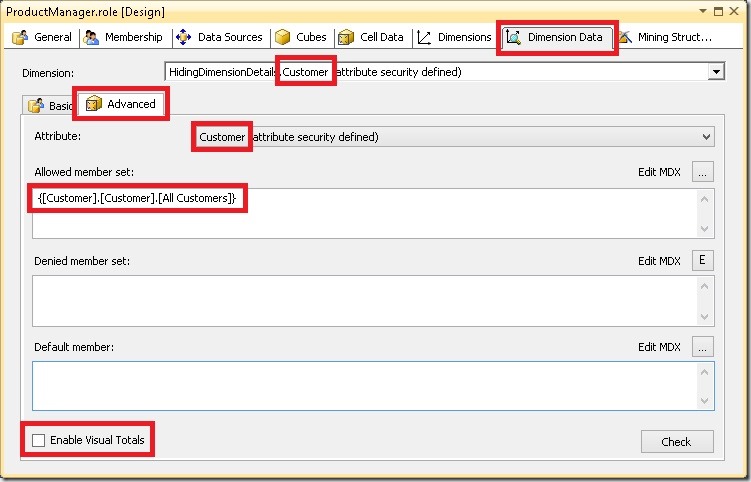

This can be accomplished quite easily by changing the security settings of our Product Manager role. We navigate to “Dimension Data” > Dimension “Customer” > Attribute “Customer”.

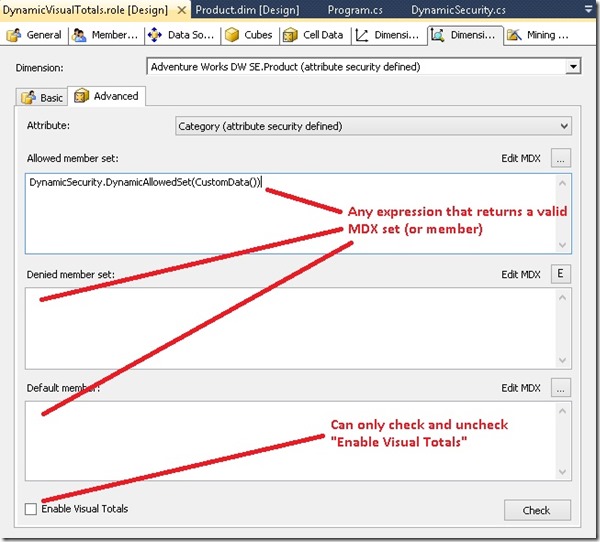

Next go to the “Advanced” tab and manually specify your Allowed Set as:

{[Customer].[Customer].[All Customers]}

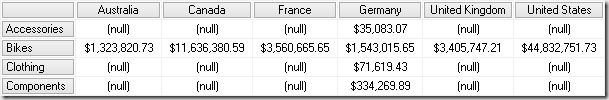

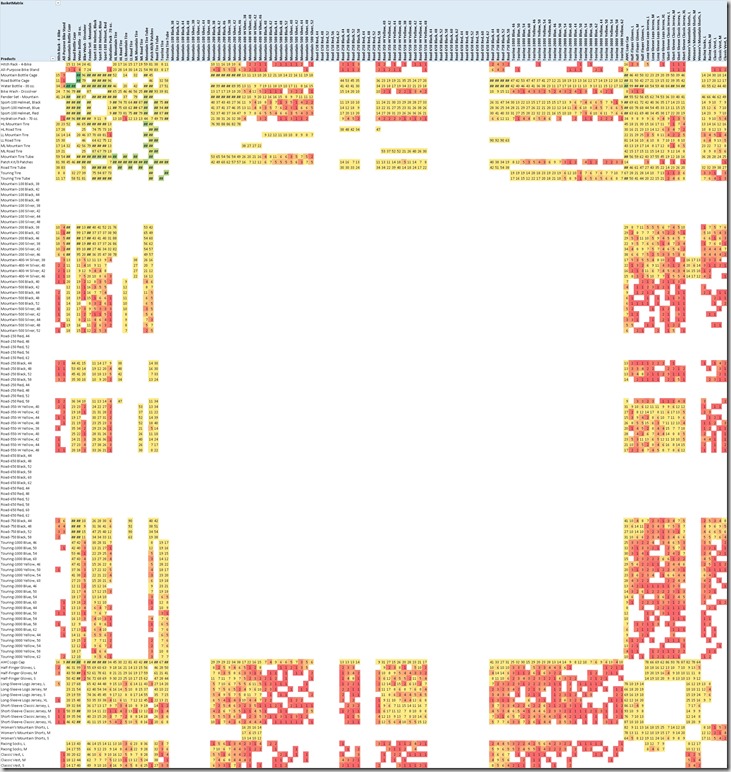

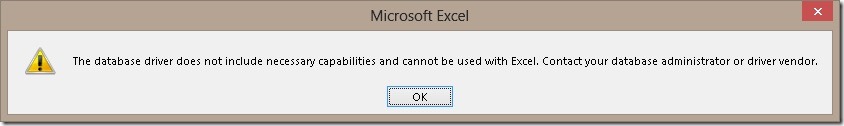

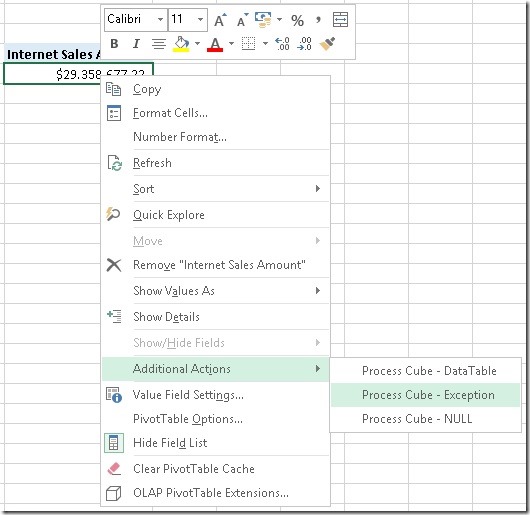

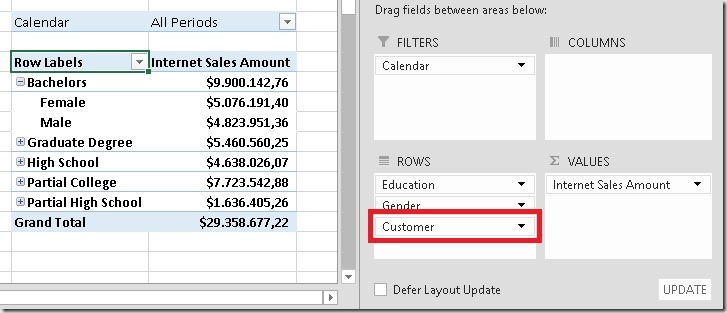

One important thing here is to uncheck “Enable Visual Totals” as shown above, otherwise you will not see any data at all! An end-user which owns the role ProductManager would see the following result in Excel:

He sees aggregated values on Education and Gender Level but can not see details of any single customer.

A scenario that’s a bit more complex to achieve is to hide not only one attribute but all attributes of a given dimension. Of course, we could use the approach as described above and do it for each and every attribute in the dimension. This would technically work, but its timely to implement and also hard to maintain (e.g. if new attributes are added). Ideally we only want to define it once in a single place and it should work for all attributes, existing and also new ones. We can make use auf Auto-Exists within our security role here. Auto-Exists basically reflect the security defined on one attribute to all other attributes in the same dimension. So if you are restricted to [Country]=”Austria” you can only see Cities in Austria and for Continents you would only see Europe but not America.

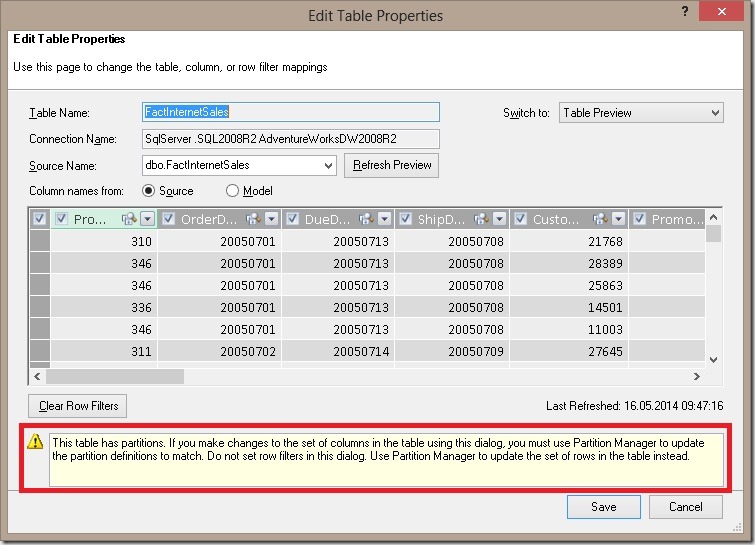

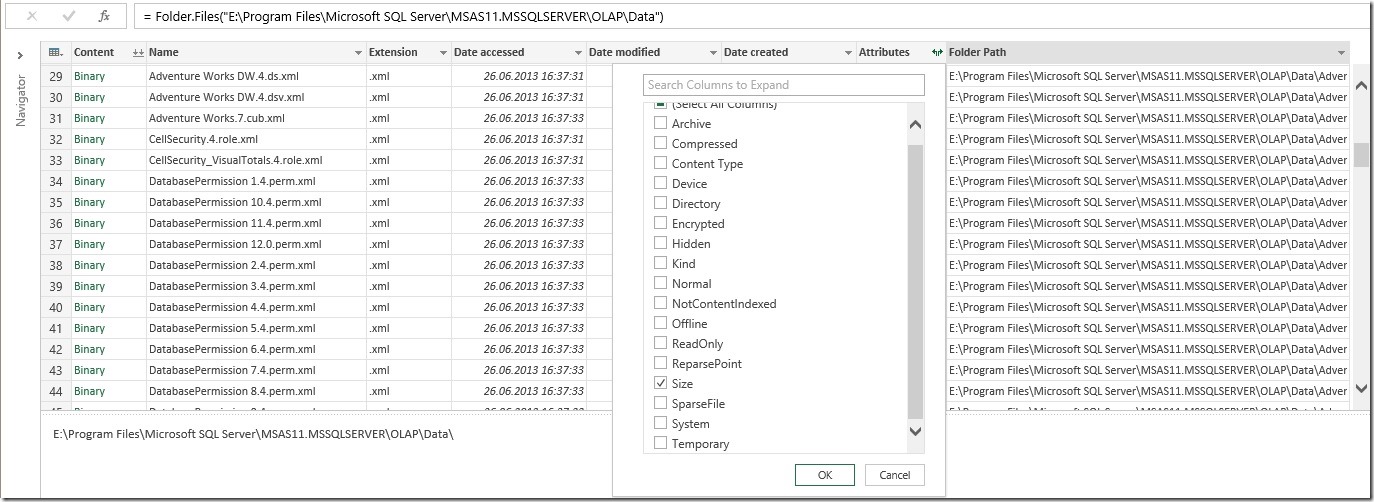

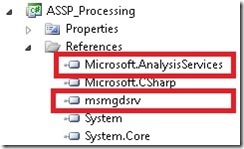

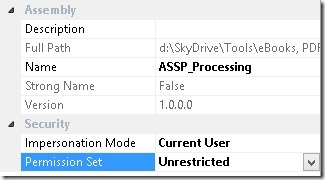

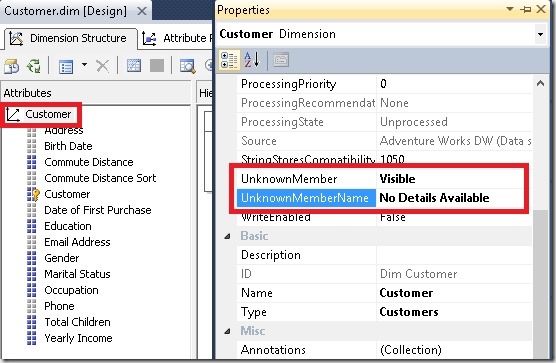

Having this in mind we could create a dummy-customer (which has no associated facts) on which we put our security. Instead of creating a dummy-customer manually in our relational DB we can also make use of the special UnknownMember which can be activated for any dimension. Doing this SSAS automatically creates a dummy-member for us which can be used for our security purposes:

Note that we have set the UnkownMemberName to “No Details Available” to inform the user that there are no details available for him/her.

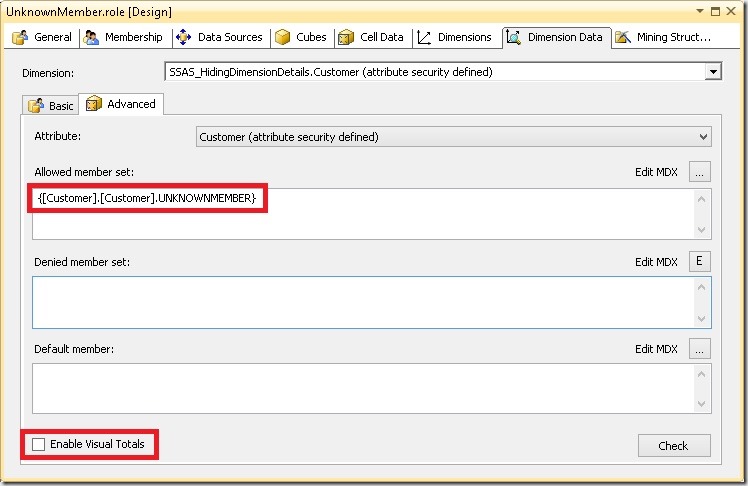

The role itself would then specify the UnknownMember in the Allowed Set as:

{[Customer].[Customer].UNKNOWNMEMBER}

Again, make sure that “Enable Visual Totals” is unchecked!

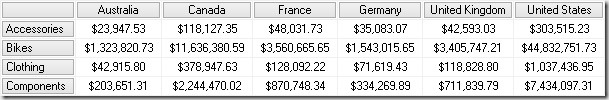

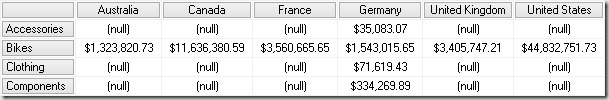

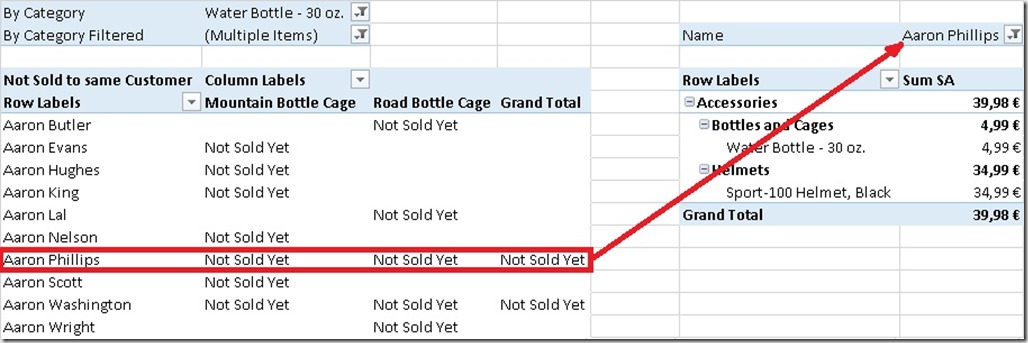

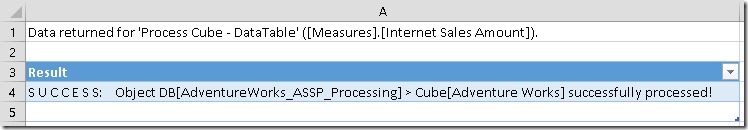

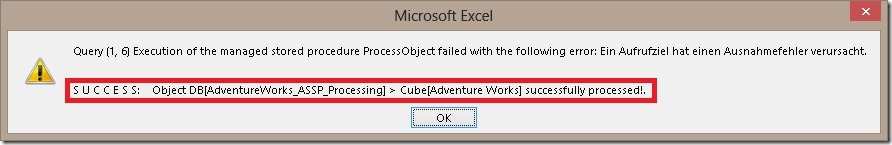

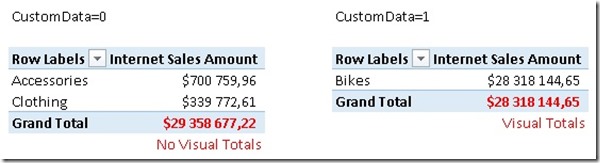

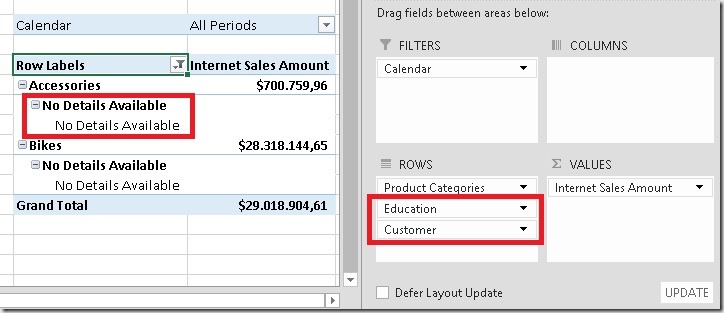

Now our Excel looks as follows:

(Note: “Show items with no data on rows” was enabled in PivotTable options here)

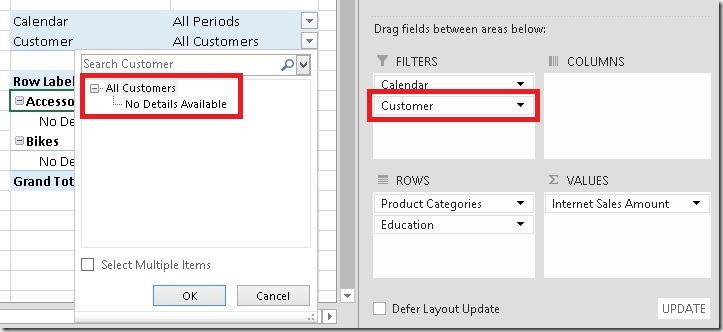

Using any attribute or hierarchy of our secured dimension in a filter would show this result:

One last option you may consider is to set the Visibility of the UnknownMember to “Hidden” thus also hiding the UnknownMember and only revealing the All-members which was our initial requirement. However, I think using the UnknownMember’s name to provide some further information for the end-user makes quite sense e.g. naming it “No Details Available due to Security Restrictions” or “Restricted by Security”.

This is of course not possible if you create your dummy-customer manually that’s why I prefer using the UnkownMember.

Note1:

During my tests I came across a bug which when you change the Visibility of the UnkownMember after you have already defined security makes you end up seeing all details. Even a redeploy or ProcessFull do not solve the problem. What you need to do is to explicitly change the definition of the role (e.g. by adding a blank to the Allowed Set) and redeploy the role afterwards.

Note2:

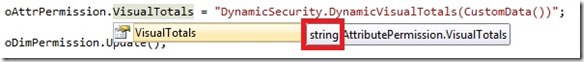

None of the above works for SSAS tabular as it does not have the concept of (disabled) VisualTotals which is essential for this solution to work!