First of all I have to thank Marco Russo for his blog post on Optimizing High Cardinality Columns in Vertipaq and also his great session at SQL PASS Rally Nordic in Stockholm last year which taught me a lot about columnar storage in general. I highly recommend everyone to read the two mentioned resources before continuing here. Most of the ideas presented in this post are based on these concepts and require at least basic knowledge in columnar storage.

When writing one of my recent posts I ended up with a Power Pivot model with roughly 40M rows. It contained internet logs from Wikipedia, how often someone clicked on a given page per month and how many bytes got downloaded. As you can imagine those values can vary very heavily, especially the amount of bytes downloaded. So in the Power Pivot model we end up having a lot of distinct values in our column that we use for our measure. As you know from Marcos posts, the allocated memory and therefore also the performance of columnar storage systems is directly related to the number of distinct values in a column – the more the worse. Marco already described an approach to split up a single column with a lot of distinct values into several columns with less distinct values to optimize storage. These concepts can also be used on columns that contain measures or numeric values in general. Splitting numeric values is quite easy, assuming your values range from 1 to 1,000,000 you can split this column into two by dividing the value by 1000 and using MOD 1000 for the second column. Instead of one column with the value 123,456 you end up with two columns with the values 123 and 456. In terms of storage this means that instead of 1,000,000 distinct values we only need to store 2 x 1,000 distinct values. Nothing new so far.

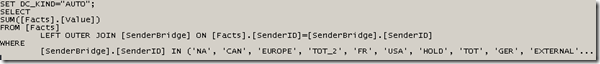

The trick is to combine those columns again at query time to get the original results as before the split. For some aggregations like SUM this is pretty straight forward, others are a bit more tricky. Though, in general the formulas are not really very complex and can be adopted very easily to handle any number of columns:

| Aggregation | DAX Formula |

| Value_SUM1 | =SUMX(‘1M_Rows_splitted’, [Value_1000] * 1000 + [Value_1]) |

| Value_SUM2 | =SUM ( ‘1M_Rows_splitted'[Value_1000] ) * 1000 + SUM ( ‘1M_Rows_splitted'[Value_1] ) |

| Value_MAX | =MAXX(‘1M_Rows_splitted’, [Value_1000] * 1000 + [Value_1]) |

| Value_MIN | =MINX(‘1M_Rows_splitted’, [Value_1000] * 1000 + [Value_1]) |

| Value_COUNT | =COUNTX(‘1M_Rows_splitted’, [Value_1000] * 1000 + [Value_1]) |

| Value_DISTINCTCOUNT | =COUNTROWS ( SUMMARIZE ( ‘1M_Rows_splitted’, ‘1M_Rows_splitted'[Value_1000], ‘1M_Rows_splitted'[Value_1])) |

As you can see you can still mimic most kind of aggregation even if the [Value]-column is split up.

Though, don’t exaggerate splitting your columns – too many may be a bit inconvenient to handle and may neglect the effect resulting in worse performance. Marco already showed that you can get a reduction of up to 90% in size, during my simple tests I came up with about the same numbers. Though, it very much depends on the number of distinct values that you actually have in your column!

I would not recommend to always use this approach for all your measure column – no, definitely not! First check how many distinct values your data/measures contain and decide afterwards. For 1 million distinct values it is probably worth it, for 10,000 you may reconsider using this approach. Most important here is to test this pattern with your own data, data model and queries! Test it in terms of size and of course also in terms of performance. It may be faster to split up columns but it may also be slower and it may be also different for each query that you execute against the tabular model / Power Pivot. Again, test with your own data, data model and queries to get representative results!

Here is a little test that you may run on your own to test this behavior. Simple create the following Power Query using M, load the result into Power Pivot and save the workbook. It basically creates a table with 1 million distinct values (0 to 999,999) and splits this column up into two. You can just copy the workbook, remove the last step “Remove Columns” and save it again to get the “original” workbook and Power Pivot model.

List1 = List.Numbers(0, 1000000),

TableFromList = Table.FromList(List1, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

RenamedColumns = Table.RenameColumns(TableFromList,{{"Column1", "Value"}}),

ChangedType1 = Table.TransformColumnTypes(RenamedColumns,{{"Value", type number}}),

InsertedCustom = Table.AddColumn(ChangedType1, "Value_1000", each Number.RoundDown([Value] / 1000)),

InsertedCustom1 = Table.AddColumn(InsertedCustom, "Value_1", each Number.Mod([Value], 1000)),

ChangedType = Table.TransformColumnTypes(InsertedCustom1,{{"Value_1000", type number}, {"Value_1", type number}}),

RemovedColumns = Table.RemoveColumns(ChangedType,{"Value"})

in

RemovedColumns

This is what I ended up with when you compare those two workbooks:

| # distinct values | Final Size | |

| single column | 1,000,000 | 14.4 MB |

| two columns | 2 x 1,000 | 1.4 MB |

We also get a reduction in size of 90%! Though, this is a special scenario …

In the real world, taking my previous scenario with the Wikipedia data as an example, I ended up with a reduction to 50%, still very good though. But as you can see the reduction factor varies very much.

Downloads:

Sample workbook with Power Query: 1M_Rows_splitted.xlsx