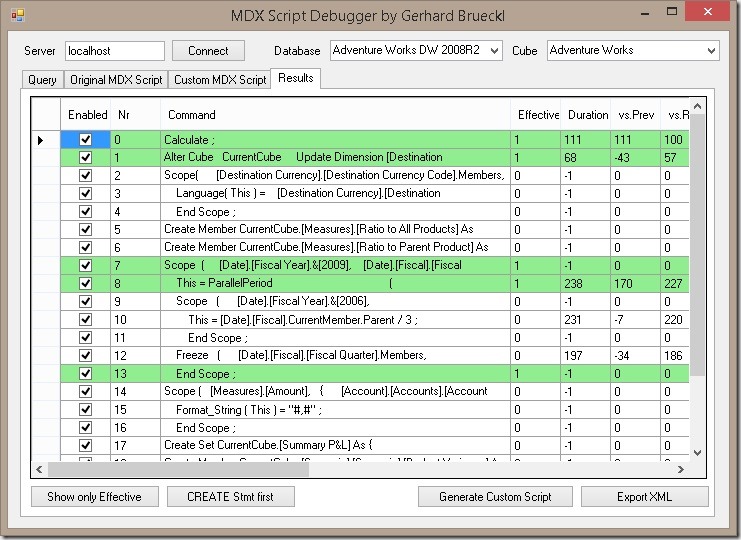

Recently at one of my customers we were doing some performance tuning on a SSAS Multidimensional cube and I came across something I have not seen before yet in my lifetime as a SSAS developer. Even the simplest select queries where running for several seconds, even if executed on warm cache! So first I thought this may be related to some SCOPE assignments overwriting values etc. Using my MdxScriptDebugger I could easily identify that none of the SCOPE assignments had any impact on the actual result of the query. However, what the result of the MdxScriptDebugger trace also revealed was that the query-time increased after a calculated member was created. Important to note here is that this specific member was NOT used in the test-query at all! Investigating into the calculated member turned out that it was using a Dynamic Named Set.

Dynamic Named Sets are usually used if you want a set to be re-evaluated in the current context opposed to Static Named Sets which are only evaluated once during creation. For example you can create a Dynamic Named Set for your Top 10 customers. Changing the Year or Country would cause a re-evaluation of the set and different customers are returned for your filter selections. These type of calculated sets is usually not a problem.

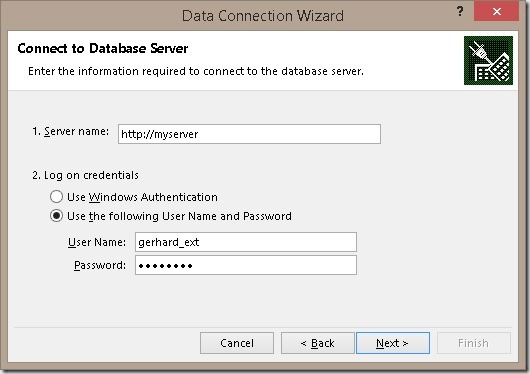

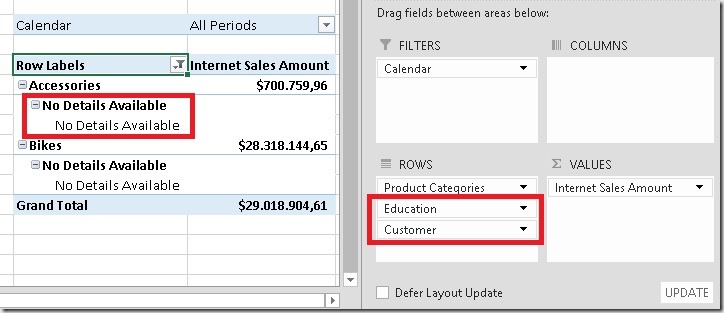

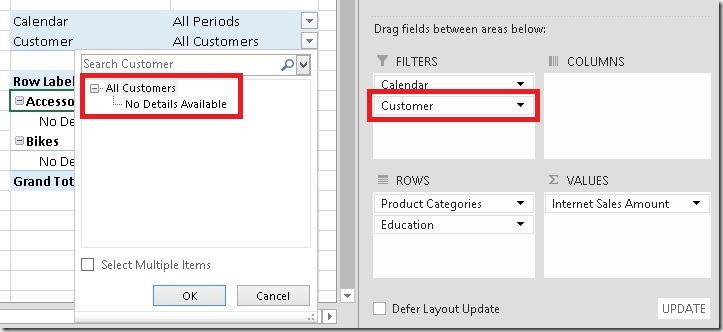

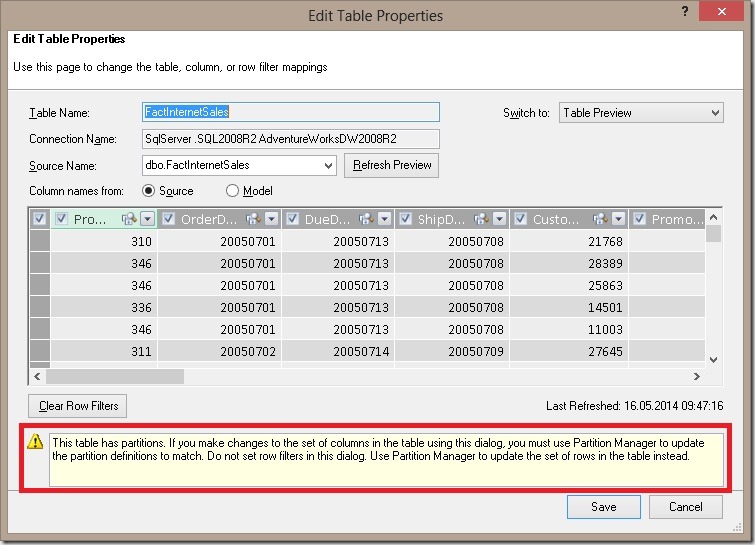

Another reason to used Dynamic Named Sets is to deal with Sub-Selects. Some client tools, foremost Microsoft Excel Pivot Tables, use Sub-Selects to deal with multiple selections on the same attribute. Lets do a little example on how to use Dynamic Named Sets here. Assume you need to calculate the average yearly sales for the selected years. This may sound very trivial at first sight but can be very tricky. In order to calculated the yearly average we first need to calculated how many years are in the currently selected:

- CREATE MEMBER CURRENTCUBE.[Measures].[CountYears_EXISTING] AS (

- COUNT(EXISTING [Date].[Calendar Year].[Calendar Year].members)

- );

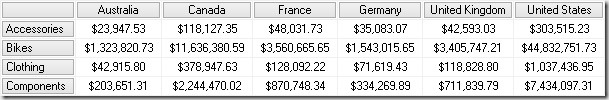

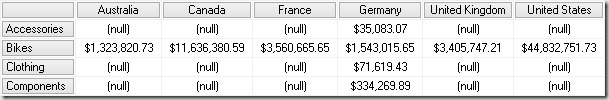

However, this does not work if Sub-Selects are used in the query:

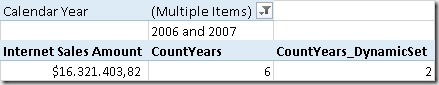

The calculated member returns “6” (the overall number of years) instead of “2” (the actually selected number of years). The issue here is that the calculation is not aware of any Sub-Select or filters within the Sub-Select as it is executed only outside of the Sub-Select.

To work around this issue you can create a Dynamic Name Set and refer to it in your calculated member:

- CREATE DYNAMIC SET [ExistingYears] AS {

- EXISTING [Date].[Calendar Year].[Calendar Year].members

- };

- CREATE MEMBER CURRENTCUBE.[Measures].[CountYears_DynamicSet] AS (

- COUNT([ExistingYears])

- );

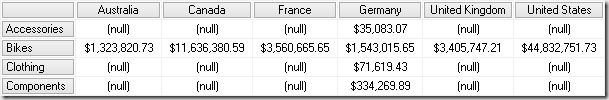

Now we get the correct results for our Count of Years calculation and could simply divide our Sales Amount by this value to get average yearly sales. The reason for this is that Dynamic Named Sets are also evaluated within the Sub-Select and therefore a COUNT() on it returns the correct results here.

So this technique is quite powerful and is also the only feasible workaround to deal with this kind of problem. But as I initially said, this can also cause some performance issues!

To illustrate this issue on Adventure Works simply add these two calculations to your MDX Script:

- CREATE DYNAMIC SET [ExpensiveSet] AS {

- Exists(

- [Product].[Category].[Category].members,

- Filter(

- Generate(

- Head([Date].[Calendar].[Month].MEMBERS, 30),

- CrossJoin(

- {[Date].[Calendar].CURRENTMEMBER},

- Head(

- Order(

- [Customer].[Customer].[Customer].MEMBERS,

- [Measures].[Internet Sales Amount],

- BDESC),

- 10000))),

- [Measures].[Internet Order Quantity] > -1

- ),

- 'Internet Sales'

- )

- };

- CREATE MEMBER CURRENTCUBE.[Measures].[UnusedCalc] AS (

- COUNT([ExpensiveSet])

- );

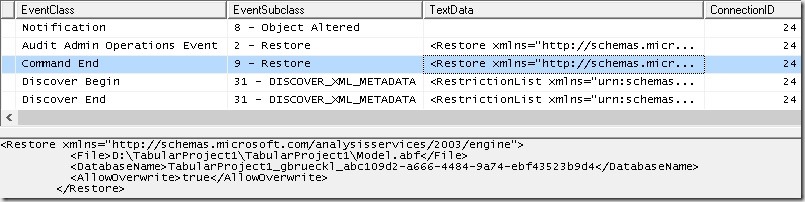

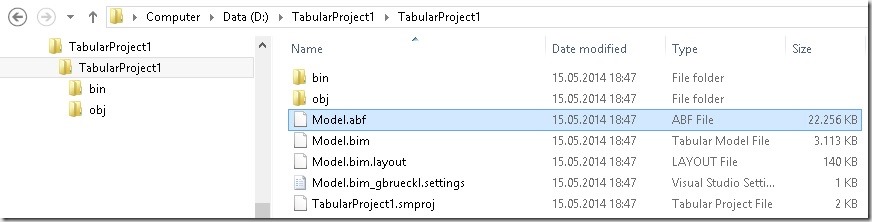

The [ExpensiveSet] is just a Dynamic Named Set which needs some time to be evaluated and the [UnusedCalc] measure does a simple count over the [ExpensiveSet]. Having these calculations in place you can now run any query against your cube and will notice that even the simplest query now takes some seconds to execute even if the new calculated member is not used:

- SELECT

- [Measures].[Internet Sales Amount] ON 0

- FROM [Adventure Works]

I am quite sure that this behavior is related to how Dynamic Named Sets are evaluated and why they also work for Sub-Selects. However, I also think that calculations that are not used in a query should not impact the results and/or performance of other queries!

I just created a bug on Connect in case you want to vote it:

https://connect.microsoft.com/SQLServer/feedback/details/1049569

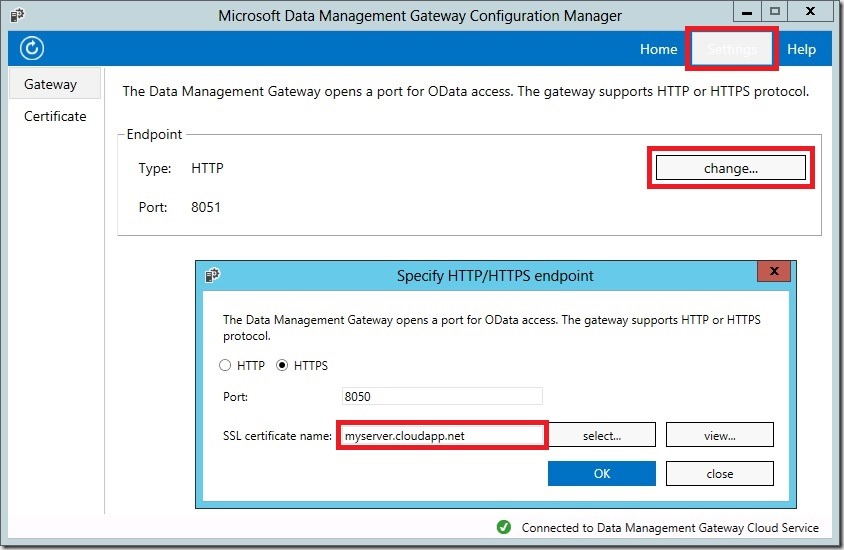

I know that Dynamic Named Sets in combination with calculated members is a not a widely used technique as I guess most developers are not even aware of its power. For those who are, please keep in mind that these kind of calculations get evaluated for every query which can be crucial if your Dynamic Named Set is expensive to calculate! This has also impact on meta-data queries!